AI: Persona stopped 75+ million AI Face Spoofs in 2024 alone

Generative AI Drives $20B in Fraud with Synthetic IDs

Hi Fintech Futurists —

Today’s agenda below.

Summary: We explore the rapidly escalating threat of generative AI–driven fraud in financial services, particularly through synthetic identities and deepfakes. Criminals are now using AI to impersonate executives, forge identity documents, and bypass KYC protocols with lifelike spoofed videos, leading to billions in fraud losses. Persona, a digital identity platform, has detected over 75 million AI-based spoof attempts in 2024 and built 25 micromodels to detect fraud in ID and selfie verifications. The rise of “deepfake-as-a-service” and fraud marketplaces shows how industrialized this threat has become. While Web3 identity and blockchain verification offer promise, most companies must rely on multilayered, real-time detection strategies to stay ahead in this arms race.

This article was written in partnership with Persona. To support the Blueprint, make sure to check them out below — your engagement helps us immensely.

Digital Investment & Banking Short Take

Persona stopped 75+ million AI Face Spoofs in 2024 alone

Generative AI is letting us all create digital avatars, repurpose our voices and faces, and automate those TikTok reels. That’s a clear consumer benefit. Unfortunately for financial services, there is also an emerging downside.

Since the rise of Deepfakes, we have talked about AI’s potential to facilitate fraud and scams, including bypassing KYC and executing other types of attacks. The better our AI programs are at faking people, the better they are at creating fake people and breaking into systems.

Generative AI can be used to create synthetic humans at scale who seem real but aren't, capable of perpetrating sophisticated social engineering attacks like impersonating the CFO and other executives of a Hong Kong company during a video conference call, successfully convincing an employee to transfer $25MM of company money. Visual and auditory authentication methods are increasingly vulnerable.

Criminals can use generative AI in creating fake driver's licenses and other identity documents, with quality ranging from crude online creations to crime ring-level reproductions. As KYC processes often rely on visual verification of identity documents and sometimes video calls, the advancement of deepfakes poses a direct threat to their effectiveness.

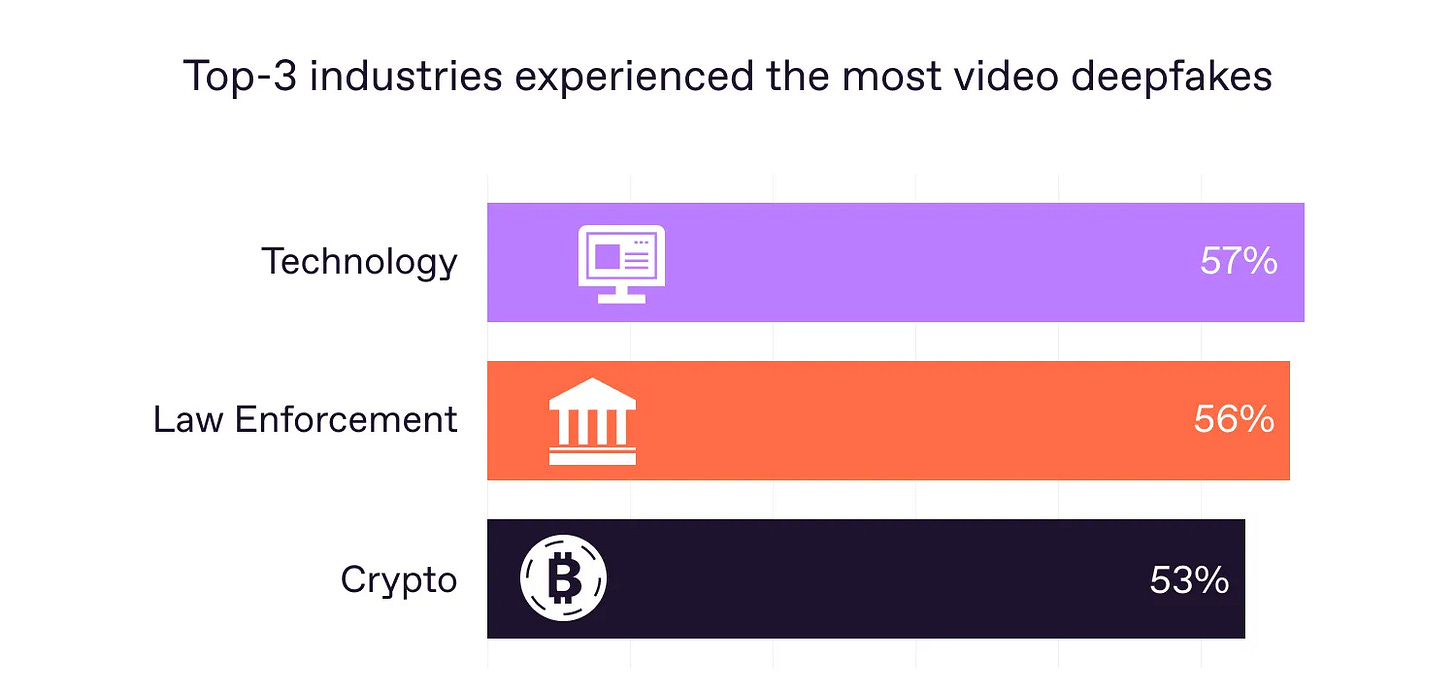

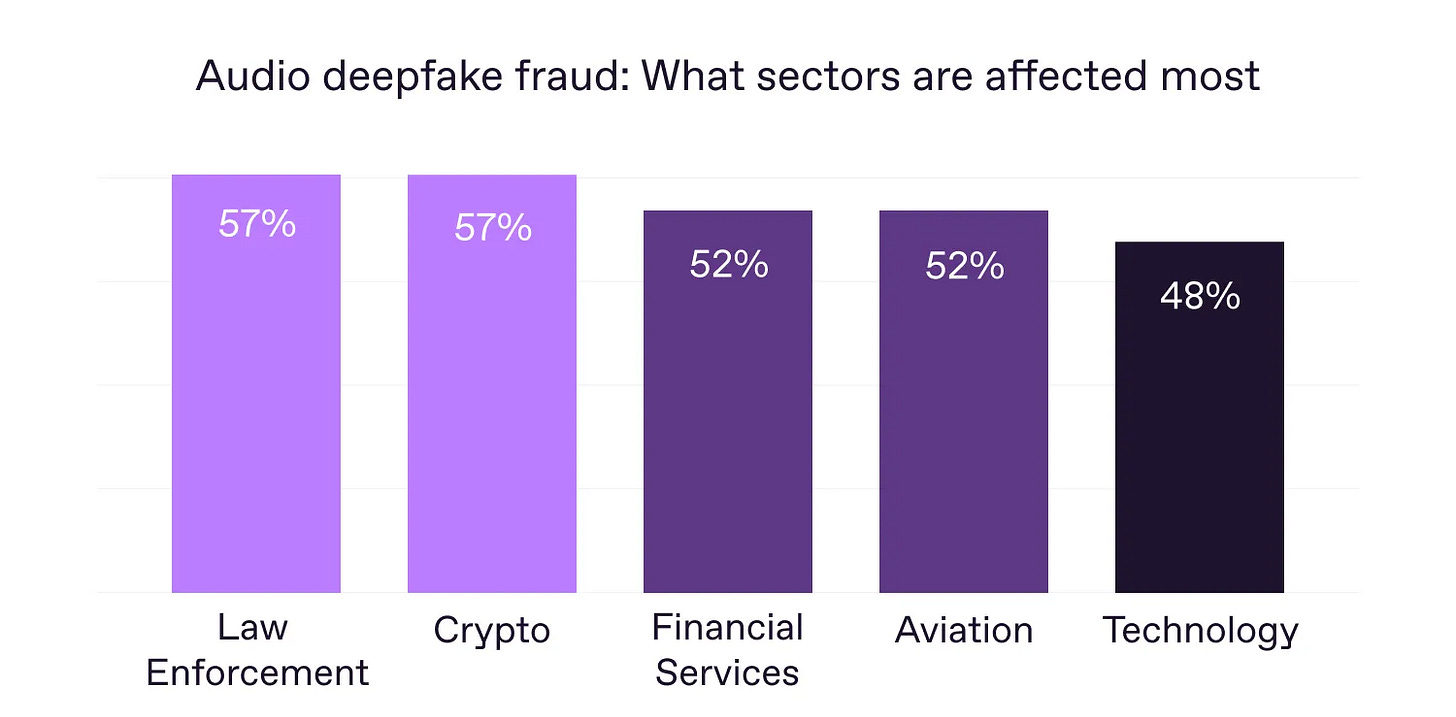

In the U.S., losses from synthetic ID fraud were around $20B in 2020 — a sharp rise from $6B in 2016. Europe has also seen a spike. Experian reported “synthetic fraud” cases in the UK jumped 60% in 2024 vs 2023, now comprising nearly one-third of all identity fraud there. These fake identities result in unpaid loans, credit card charge-offs, and even abuse of government programs, directly hitting lenders’ balance sheets. Deloitte estimates up to $40B in fraud losses towards the end of the decade from GenAI, and surveys suggest that Finance and Crypto are the juiciest targets.

As companies build capabilities to combat these attack vectors, the attack vectors are getting more sophisticated. While deepfakes may be the most notorious type of AI-based face spoof, other variations have emerged. Persona, a leading digital identity platform that works with fintechs like Brex, AngelList, and Branch, has identified over 50 distinct classes of AI-based face spoofs, including face swaps, 3D avatars, and face morphs. These face spoofs have not only evolved to look more realistic, but can also perform more lifelike actions. What used to be just images are now live videos that get around real-time systems.

As a result, it has become challenging, if not impossible, for humans to discern between reality and a spoof. Using a variety of AI models, criminals will make multiple types of face spoofs in case the first ones they try get flagged. They will also generate images of realistic IDs featuring information stolen from the dark web or online profiles. This is no longer just individual bad actors, but an industry with a value chain of software developers and consumers.

Online communities and hackers create apps that others can use to attack companies, including financial institutions. In the study below, we see several communities with thousands of users that target these capabilities.

Experienced forgers now offer “deepfake-as-a-service” on dark web forums, selling custom-made fake videos or documents to less tech-savvy criminals. Prices range from just $20 for basic deepfake tools up to a few thousand dollars for more sophisticated, turnkey fraud kits. There is even evidence of long-term “sleeper” fraud strategies, where fraudsters cultivate synthetic identities over months or years (building credit scores, etc.) before cashing out.

What can be done in response to these threats? Clearly there is an ongoing arms race between the capabilities of generative AI for fraudulent activities and the development of more sophisticated security measures.

We have talked in the past about the combination of Web3 / blockchain with AI, where blockchain can provide a layer of trust and verification for digital identities and transactions. Concepts like verifiable credentials and proof of humanity are also being explored as ways to distinguish between genuine users and AI-generated entities. Unfortunately, decentralized identity is still in early innings and has not been widely adopted.

A systematic approach to identifying and fighting fraud can be useful.

Persona suggests the above strategic framework when working with customers. The more data you can collect, augment, and view in aggregate (e.g., with a link analysis tool), the better your chance of understanding the situation and figuring out how to defend your business. Speed is also of the essence — in just two months, Persona integrated 25 fraud detection micromodels into their government ID and selfie verifications. This strategy implies collecting diverse visual and non-visual signals, and extracting insights from those signals to see a more holistic picture of the attempted fraud types.

Companies battling the generative AI fraud onslaught are in a quickly growing market, estimated to go from about $1B in revenue to $5B by 2030. Companies like Persona are building platforms that enable an ongoing process of identity management, focusing on maximizing customer onboarding while minimizing fraud. In 2024 alone, Persona caught over 75 million fraud attempts that leveraged AI-based face spoofs.

While we are fans of the machine economy, it has to leave some space for us humans as well.

👑 Related Coverage 👑

🚀 Level Up

Join our Premium community and receive all the Fintech and Web3 intelligence you need to level up your career. Get access to Long Takes, archives, and special reports.

Sponsor the Fintech Blueprint and reach over 200,000 professionals.

👉 Reach out here.Check out our AI newsletter, the Future Blueprint, 👉 here.

Read our Disclaimer here — this newsletter does not provide investment advice

At some point, do you think face recognition is just going to stop and we're going to have to move to biometrics and stuff like Wordcoin?