Analysis: The $10T cost of ChatGPT's economic advice, and AI governance

AI can rationalize flawed decisions with scientific-sounding nonsense.

Gm Fintech Architects —

Today we are diving into the following topics:

Summary: We explore the growing role of AI in policymaking, sparked by the apparent use of AI-generated logic behind recent U.S. tariff proposals. The piece draws a stark contrast between scientific reasoning and pseudo-scientific justifications — comparing astronomy to astrology — to highlight the dangers of using generative AI to rationalize flawed economic decisions. It questions whether AI will asymptotically match human cognition or exceed it, and whether centralized AI governance can outperform decentralized market systems in managing complex economies. While AI offers precision and scale, it also inherits political and data biases from its creators, risking technocratic overreach if used blindly. Ultimately, markets — despite their flaws — remain the most faithful processors of real-world complexity, and AI should be used to augment, not replace, their adaptive power.

To support this writing and access our full archive of newsletters, analyses, and guides to building in the Fintech & DeFi industries, see subscription options below.

Long Take

Astrology

As a rule, we don’t want to worship Magicians, but we do want to empower and support Scientists.

There is a difference between bullshit and reality. Financial nihilism is a failed philosophy — just because something about the world is wrong does not mean everything is wrong. Nuance and degrees of thinking matter. Building fundamentally functional things matters. There is a distinction between Astrology, the voodoo practice of making up stories about stars, and Astronomy, which has gotten us to land on the moon and on the way to Mars.

The very idea of a “Blueprint”, which is what you are reading, is the pursuit of a ground truth that actually exists. Reasonable people with different information sources and processing may disagree on what that looks like, but it exists.

Economists have a broadly-consensus view on global tariffs. That view is that they are bad by distorting free market incentives. Large tariffs break trade, and trade is the source of consumer surplus and GDP creation. This is the same logic by which economists have a broadly-consensus view on minimum wages, which is that minimum wages are bad by distorting free market incentives, putting a limit on production. As a society, we may have policy goals that involve these trade-offs. But at least we know the variables that we are trading off.

Tariffs up top, destruction of global value-chain companies like NVIDIA, Google, JPM, and Visa below. This is the first order effect. We can tell ourselves some story about second-order effects — re-shoring production or about forcing the Fed to cut rates by generating a recession. That type of logic is too complex for us to reason about correctly, and there’s no good evidence that tanking our economy is the best way to accomplish those goals. Volatility about having those tariffs on, then off, then on again creates uncertainty and erodes market trust.

But this isn’t the main story today. In fact, far from it.

The much more interesting story is that these tariff numbers — and the whole strategy by which they were derived — were very likely made with AI chatbots. And this, in turn, opens up the far more weird and speculative question.

Are we already living in a world that is being explicitly governed by AI? Web3 and its DAOs have been clamoring for AI management of communes for several years. But those experiments are timid nothing-burgers compared to the idea that the Trump administration may have used OpenAI to do their homework the night before announcing policies.

The White House posted a long science-sounding explanation of the tariff formula on their website. This is Astrology. It masks a simple formula with Greek letters and constants that multiply things by 1. It is ridiculous the administration wanted to fib in such a blatant way, and it reaffirms what we are all in for from an economics perspective.

Occam’s Razor suggests they used an AI assistant to formulate the policy implementation. We know that AIs hallucinate and bullshit — they do this where no obvious answer already exists, but the user is pushing for a solution. The goal of the AI is to generate an answer that will make the user happy, not one that will make the world a better place.

Punishing the world’s manufacturing base for manufacturing things we want, or taxing islands inhabited by penguins is exactly the thing an AI that doesn’t know what to do would do.

These are the implications we explore below.

Quality

It is difficult to track the quality of generative AI development, because it moves so quickly that our minds forget where we were just a year ago.

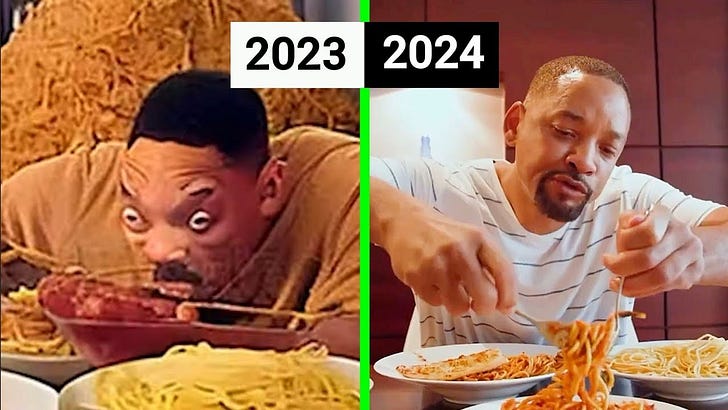

Here’s a great reminder — the infamous video of Will Smith eating Spaghetti.

Compare it to this gem from two months ago by the Dor Brothers.

Can you figure out from this progression where we will be in 2030?

If you were to tell somebody playing Super Mario Bros in 1985 what video games looked like in 2025, do you think they would believe you? That we would go from a few pixels and 8 colors to fully rendered 3D worlds with thousands of players and real-time physics simulation systems? That progression is what we, sitting here today, cannot fathom about what AI (our math) is going to be able to do.

Now, Trump’s use of ChatGPT to decide on tariff policy is the equivalent of the original Will Smith eating spaghetti video. It is slop.

But as the technology improves, and as today’s young people — who rely on AI for a meaningful portion of their education — come into power, you better believe that large-scale machine reasoning systems are going to govern the world.

There is an unanswered question in my mind still.

Will the improvements be asymptotic to human ability? Meaning, will performance improvements stop once AI is indistinguishable from the best person in every field of knowledge?

Or will improvements be exponential, breaking through our aggregate human intelligence and then reaching escape velocity into an alien world of robots.

These charts clearly do not agree about the slope of the line.

Maybe it is some other S curve, claiming new pockets of intelligence and consciousness in ways we are yet to understand.

But for the sake of argument, let’s grant that AI becomes a near-perfect replica of the entire body of human knowledge and cognition. And further, that a user of AI can derive comprehensible answers as generated by that graph of knowledge — we can float around in latent space and pull out the most likely probabilistically-derived maxima. We ask questions, and get high-quality answers to large and complex problems — the equivalent of the improvement on video generation I showed before.

If you are Trump, or Musk, or Bezos, or any person in a position of responsibility, of course you will use this amazing tool to drive your thinking.

The quality of your thinking is likely to improve. Will it be possible then to actually centrally manage an entire economy? Will corporations run by AIs executing on a five-year plan be superior in financial performance to those run by humans? What is the set of trade-offs, morally and philosophically, from following this path to its natural conclusion?

And most importantly, will an AI-managed approach outperform a free-market approach?