Long Take: Combining AI agents, like AutoGPT, with Web3 chains will create self-driving money

Let's predict the future together

Gm Fintech Architects —

Today we are diving into the following topics:

Summary: Prediction is a hard game, but we can learn by articulating our hypotheses and figuring out the real constraints. We cover how large language models, i.e., LLMs, have evolved recently and their assembly into artificial intelligence agents that can start to do human tasks independently. Robotic process automation is an important industry theme, and LLMs add intelligence into those workflows. However, we dive deeper to think about how self-driving financial agents could be generated on blockchains, and why digital ownership of those software robots is an important destination.

Topics: artificial intelligence, big tech, blockchain, NFTs, wallets and payments

Tags: AutoGPT, BabyAGI, Zapier, UI Path, Wealthfront, Otomo, OpenAI, AIGC Chain, Prompt Sea

If you got value from this article, let us know your thoughts! And to recommend new topics and companies for coverage, leave a note below.

Long Take

Futurecasting

There is nothing to make one feel more foolish than predicting future industry trends and getting them super, duper wrong.

Back in the 1920, the future was supposed to have metropolitan roof terraces with interconnected train tunnels, flying helicopter cars, and fancy fashion hats. When we look around in 2023, there are drones and green roofs, but very few fancy hats. There are no flying cars or utopian robot societies.

But, you can tell that some of the elements of the predictions are correct! This is true for things that align to repeatable human behavior — shopping, markets, transport, society. So we can recognize the elements of the predictions that are useful, even if they are wrong. Can you see the hundred-year old helicar concept somewhere in this drone swarm?

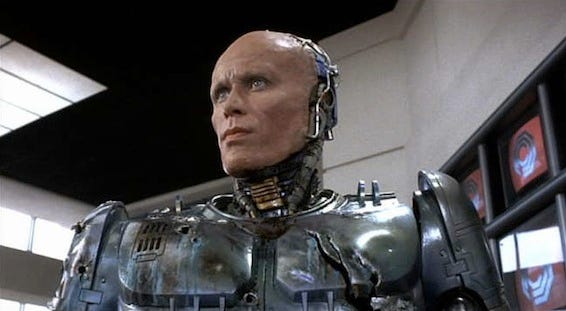

Similarly, the 1980s saw many definitive stories about cyborgs and artificial intelligences. In order to augment human capacity, people would drill computers into their heads and have silicon brains interfacing directly with their meatspace bodies. We all thought that the industry of computing was physical, hardware spliced into DNA. Forty years ago, we did not have concepts for server farms and large cloud infrastructure providers.

We did not have a mobile Internet that could be carrier around on the whispers of the wind. To have a computer, it had to be welded into you literal mind.

The prediction wasn’t wrong. We are all cyborgs, walking around with massively powerful computing devices, octopus-sucked into a global technological hivemind. All knowledge is instantly available to our fingertips, and the mechanical processing of a tiny computer feels like a part of our brain. When we don’t have our phones, we feel chemically depleted, depressed, an addict in withdrawal.

Our para-symbiotic relationship with technology did come fruition, but it is a lot less grisly than imagined.

Just sadder.

All this to say, prediction is as much about the current moment as it is about the future. We can only extrapolate those things that we see, and guess at the things that we do not.

The more unknowns we incorporate into our predictions, the more incorrect they become, because we are combining unlikely probabilities together to create very unlikely probabilities, and then using some point location of a future state among many others.

On the other hand, some predictions can accurately answer problems by pulling in the right social, economic, and financial constraints. If, for example, we know that financial inclusion is a problem, or that proximity payments are growing, or that big tech tends to concentrate rather than decentralize, than our predictions actually become more accurate by adding additional detail. The question becomes — does the detail remove unlikely possibilities and replace them with likely possibility, or does the detail introduce unknown-unknowns and break our mental model.

So let’s play the game.

Autonomous Agents

In 2016, we put together this view of the tech stack in 2026.

Embarassing stock photography aside, the key here the separation of the (1) user on the left, and (2) distribution channel on the right. The user, or investor, is generating data exhaust at scale — search intent, social network activity, wearable bio data, and so on. These get aggregated in preference graphs, or marketing profiles of the user in Web 2.0 adtech, or in artificial intelligence models creating digital twins of the user.