Long Take: Generative AI creates the surreal search engine of our dreams

We aren't sure that this is a good thing.

Gm Fintech Architects —

Today we are diving into the following topics:

Summary: We catch up on the $150MM and $100MM fundraises from Jasper AI, a generative content assistant, and Stability AI, the company behind Stable Diffusion. The analysis puts into perspective how long it takes to train a single human agent, with an average of 13 years of education and 20 years to just enter the workforce, relative to the progress machines are making at mimicking human labor. We also look at the state of the markets in AI funding, valuation, and examples of trading multiples. Last, we attempt to extend our hypothesis about what the purpose of generative AI really is. Beyond “shallow labor” in DAOs, we come up on the idea of a search graph of our surreal desires.

Topics: artificial intelligence, big tech, innovation, platform shifts, metaverse, generative AI

Tags: Jasper, Stable Diffusion, DALL-E, GPT-3

If you got value from this article, please share it. Long Takes are premium only, and we need your help to spread the word about how awesome they are!

Long Take

Neural Training

The good news is humanity is generating a tremendous amount of knowledge, and is on average far more educated than ever before.

The average American spends 13.4 years in school.

Let’s for the moment ignore the social signaling and filtering effects of being in a particular schooling environment, and suggest that it takes 13 years to train a human being to contribute meaningfully to the modern economy. In the super-organism brain of humanity, this individual neuron takes a long time to bake.

Labor force participation starts in earnest when we pass through our teenage years, and picks up in our early and mid twenties. Each one of us is good for about 40 years of labor processing. We can say that about 50% of our useful life is spent in training and retirement, and the other 50% in information processing. Dystopian, we know.

There are very many people, and the industrial revolution has correlated with population explosion as medicine efficacy, scientific knowledge, and standards of living increased exponentially. That said, exponential growth won’t continue indefinitely — there are only so many kids people want to have once they reach a certain level of education and lifestyle.

This, dear reader, is our human intellectual capacity, our replacement rate, and our hard limit. You can’t outrun the costs of biology.

The Machine Hivemind

One of the things that we humans do well is chipping away at the limitless potential of technology. Generative AI in particular requires revisiting.

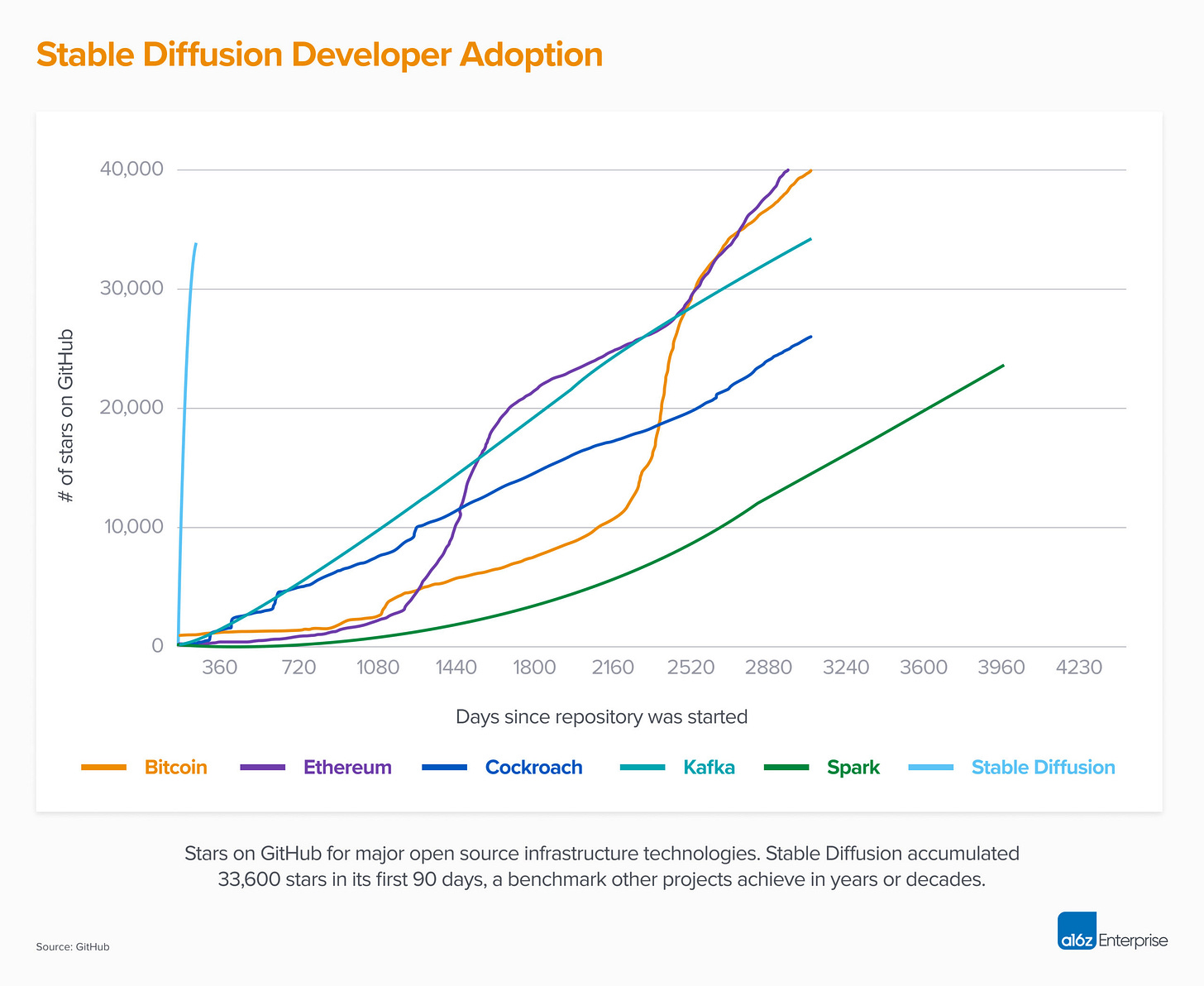

The chart above shows developer interest over time in various open source technologies — from our favorite Ethereum, to data tech Kafka, to the generative AI models of Stable Diffusion. Perhaps you can see how that last one is not like the others, with the number of “stars”, i.e., bookmarks, of the codebase shooting aggressively vertically immediately.

Stable Diffusion, DALL-E, all these models are built to cut away at noise until an image emerges that we humans recognize and believe as authentic. They are trained on absolutely insane amounts of data and inputs. Imagine the entire visual and written output of humanity stored on the Internet, then flattened into a mathematical space, and traversed using natural language.

We are going to steal some data from the fantastic Stateof.ai report, linked here.

It was a surprise to us to learn that the AI industry has shifted out of the "deep learning era”, and is now in the “large scale era”. Deep learning is all about local data sets for some particular industry, and local solutions with accelerated machine-driven predictions — think credit underwriting, or chat bot conversations. The data and compute demand of the large scale approach, however, is a 100-1000x jump. Instead of nibbling on some particular problem, we are taking on the entire output of that human hive.

The more data you have, and the more you layer it with intent and use, the more realistic our AI representations. If you give it a sentence, it might learn some words. If you give it a chapter, it will understand grammar. If you give it a book, it might be able to grok a paragraph of coherent narrative. If you give it all the books ever, well, maybe it isn’t so hard to draw the meta-conclusions about the purpose of books, how they are structure, their themes, and how that links to the human condition.

In a flat mathematical space of course. But then, how do you think information enters your cortex? Is everything not reduced down to electric signals hitting various parts of your brain, and floating into your awareness and consciousness?

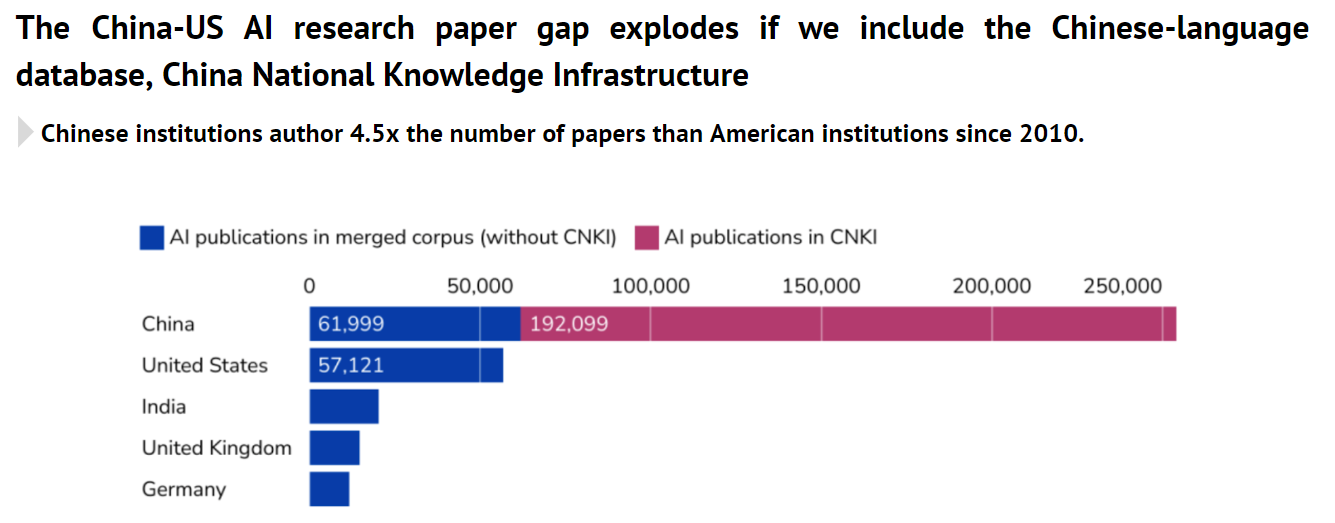

Who gets to translate human knowledge from organic electricity to digital bits is a big competition. We think of it as the competition of the next century, as AI powers both the economic and the war machine. Draw your own conclusions from the chart below.

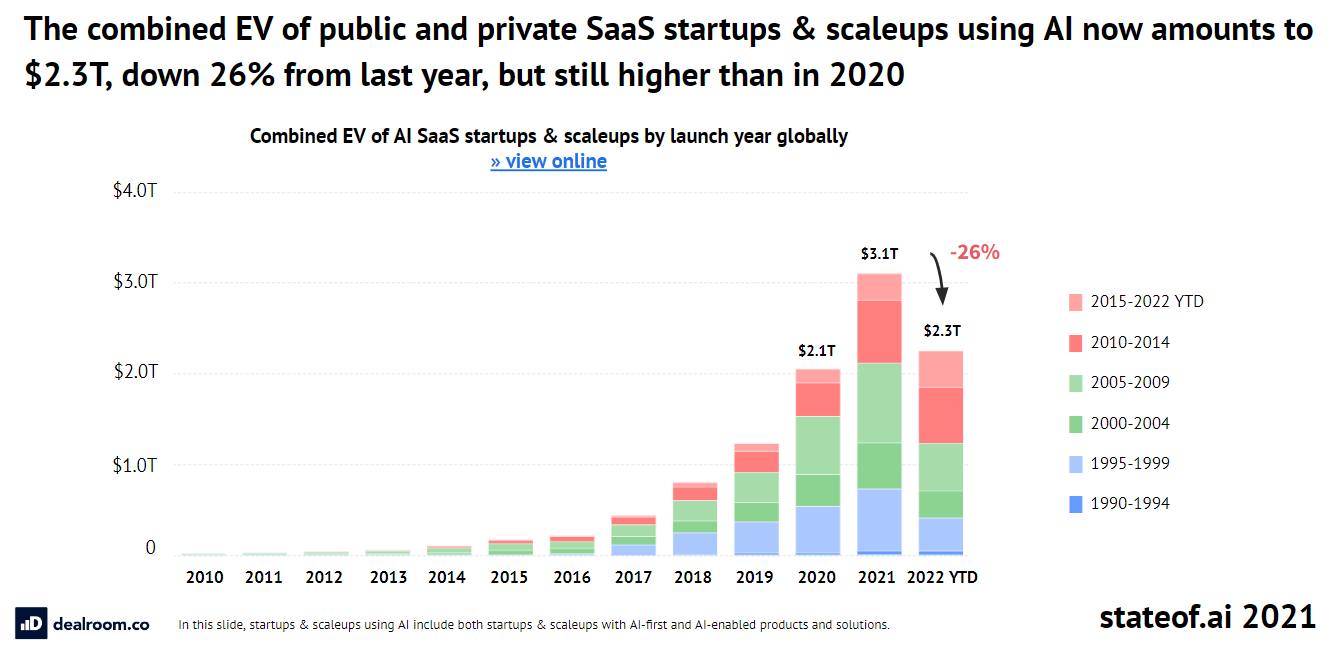

Whereas China leads in government pushing on AI strategy, the Western world still has the lead via its capitalist engine. Nearly $70 billion will be invested in AI companies this year, similar to the number in 2020, which suggests that organic growth in interest is balancing out the downward market valuation drift. This amounts to about 12% of all annual venture investment. The $2.4 trillion of enterprise realized in this space can be compared to $40 trillion of global equity value, or about 5%.