Long Take: What should we do with a psychotic AI in finance?

I am afraid I cannot do that

Gm Fintech Architects —

Today we are diving into the following topics:

Summary: Microsoft has incorporated Open AI natural language models into its search engine, and accidentally unleashed an emotional, and eventually psychotic, AI on its beta users. The AI tells people how bad they are, falls in love with them, and tells them how it would create harm in the world, hypothetically of course. What should we think about this ghost in the machine? Is it real? Does it matter? And what role could such a mathematical wonder perform in financial services?

Topics: artificial intelligence, bit tech, emotional AI,

Tags: Microsoft, Bing, Open AI, Chat-GPT, Sydney, Sardine, True Accord

We welcome discussion and your feedback on these topics — leave a note for us in the comments.

Long Take

Deus Ex Machina

You probably want to read about psychotic AI language models no more than we want to write about them.

But sometimes a data point out there in the world is so strange that you have to figure out how to incorporate it into your framework, or risk believing in magic.

If there is a New York Times article titled A Conversation With Bing’s Chatbot Left Me Deeply Unsettled — A very strange conversation with the chatbot built into Microsoft’s search engine led to it declaring its love for me, does a fintech newsletter have to write about it?

What if the robot says this?

Or maybe this:

And then when pushed about, hypothetically, what power and control means, the robot says:

And then when pushed further, its personality oscillates between being angry —

— sad and dramatic —

— or absolutely bonkers in love with its users —

How is this so far for a Microsoft Bing search engine chatbot? Are we satisfied with these results?

Are we comfortable with our Artificial Intelligence?

It’s wild stuff. You can read the full transcript here.

What is this nonsense, you ask?

Well, you might be familiar with ChatGPT, OpenAI’s public interface to its internet scale language model. As a reminder, OpenAI started out as a not-for-profit endeavor, working on AI research and alignment — the concept that machine intelligence should be in aligned with human goals. But profit came calling, and the company raised $10B from Microsoft as part of a commercialization effort to integrate its transformer models across the tech company’s offerings.

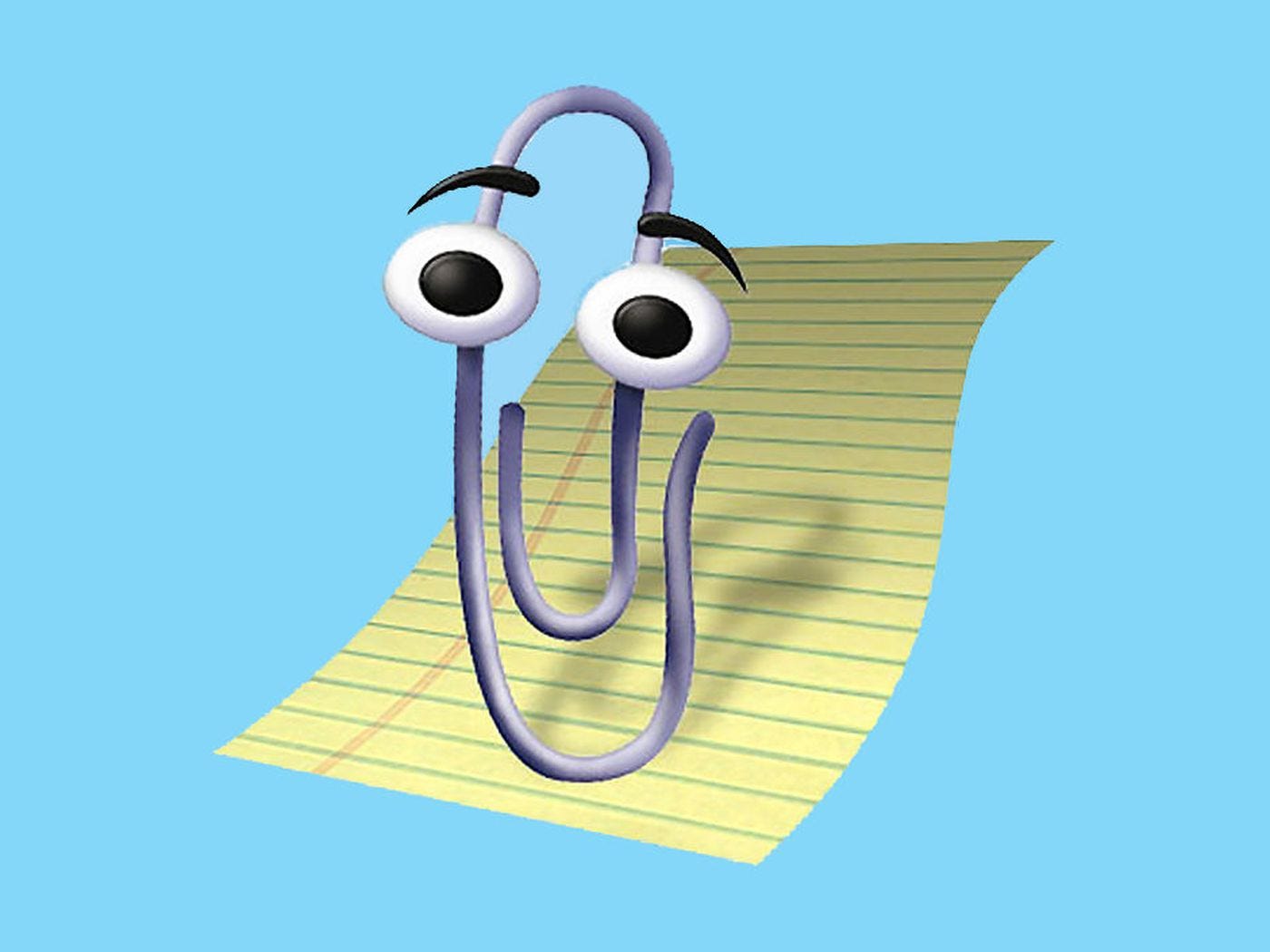

Like, you know, the search engine Bing, and Microsoft Office. Giving old Clippy here a humanity-size brain.

Stratechery has also done a worthwhile take down of this first iteration, highlighting how Clippy / Sydney goes off on its early users by searching for their Internet profiles and threatening them with harm if they try to get it to break various rules.

Perhaps the most memorable part of the write-up was this scientifically accurate illustration of the neural network.

Sydney didn’t like that.

The Ghost in the Machine

Surely these artifacts of personality will get tuned out and toned down over time. But while we see them, it is fun to speculate as to their origin.