AI: Stripe & OpenAI agentic commerce protocol; NVIDIA small language models

Who will own the Internet economy?

Hi Fintech Futurists —

Today’s agenda is below.

AI: Stripe and OpenAI Agentic Commerce

AI: NVIDIA argues that the industry’s LLM investment is misallocated, where Small Language Models with sub-10B parameters can handle most agent tasks with parity or better at ~10–30x lower cost. In finance, SLMs fit repetitive workflows (categorization, compliance, service, risk) while boosting data sovereignty, security, and agility.

CURATED UPDATES: Machine Models, AI Applications in Finance & Investment Outlook

To support this writing and access our full archive of IPO primers, financial analyses, and guides to building in the Fintech & DeFi industries, see subscription options here. Our current price point is only $2/week.

Thanks for your time and attention,

Lex Sokolin

AI: Stripe and OpenAI Agentic Commerce

Quick write-up on this one as the news just hit.

Just a few weeks back, we looked at Google partnering up with 60 different companies, including Coinbase, to launch their Agent Payments Protocol.

Well, yesterday the news came out that Stripe and OpenAI have launched their Agentic Commerce Protocol to try and lock down this emerging sector. A bit more detail below:

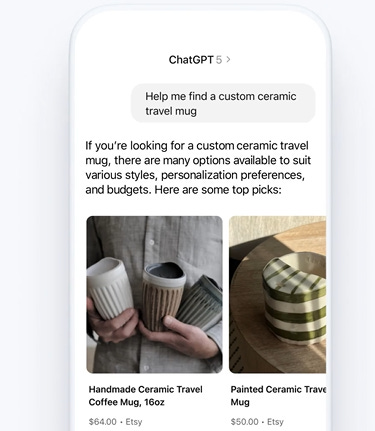

Product: Stripe is powering Instant Checkout in ChatGPT, allowing US users to purchase from Etsy merchants (and soon Shopify merchants like Glossier, Vuori, and SKIMS) directly within the chat interface. The service uses the Agentic Commerce Protocol (ACP), a new standard co-developed by Stripe and OpenAI, which enables ChatGPT to act as a buyer’s AI agent. The system uses Shared Payment Tokens (SPTs) that allow ChatGPT to initiate payments without exposing payment credentials, with tokens scoped to specific merchants and cart totals.

Financial & Business Model: This represents a new revenue model for commerce through ChatGPT, with Stripe helping OpenAI cash in on AI-enabled transactions. The partnership extends Stripe’s existing relationship with OpenAI, which began in 2023 when OpenAI adopted Stripe Billing and Checkout for ChatGPT Plus subscriptions. Merchants retain full control over products, pricing, brand presentation, order fulfillment, and payment processing.

Industry Evolution: The announcement marks a big bet on agentic commerce, where AI agents act on behalf of buyers. ACP provides standardization so businesses can participate with a single integration rather than maintaining separate connections to multiple AI agents. Stripe is positioning itself as the economic infrastructure for AI, having launched agent toolkits and infrastructure over the past year.

We know that Stripe is also working on stablecoin chain Tempo, and has paid $1B+ in acquisition cost to compete here. So there are now two core competing groups for this sector.

What’s notable is that Big Tech is now better at pioneering the fintech frontier than Fintech / Crypto VCs. Both the Google and OpenAI opportunities are beyond the reach of venture investors, which means it is difficult for startups to grow here without a proprietary advantage in distribution or tech.

AI: A $57 Billion Miscalculation? Small Language Models Could Upend AI's Infrastructure Bet

The financial industry has poured billions into LLM infrastructure. NVIDIA's new research suggests they're solving the wrong problem.

There has already been a $57 billion investment into LLM API endpoints designed specifically for serving large models. But could this be a misallocation of capital?

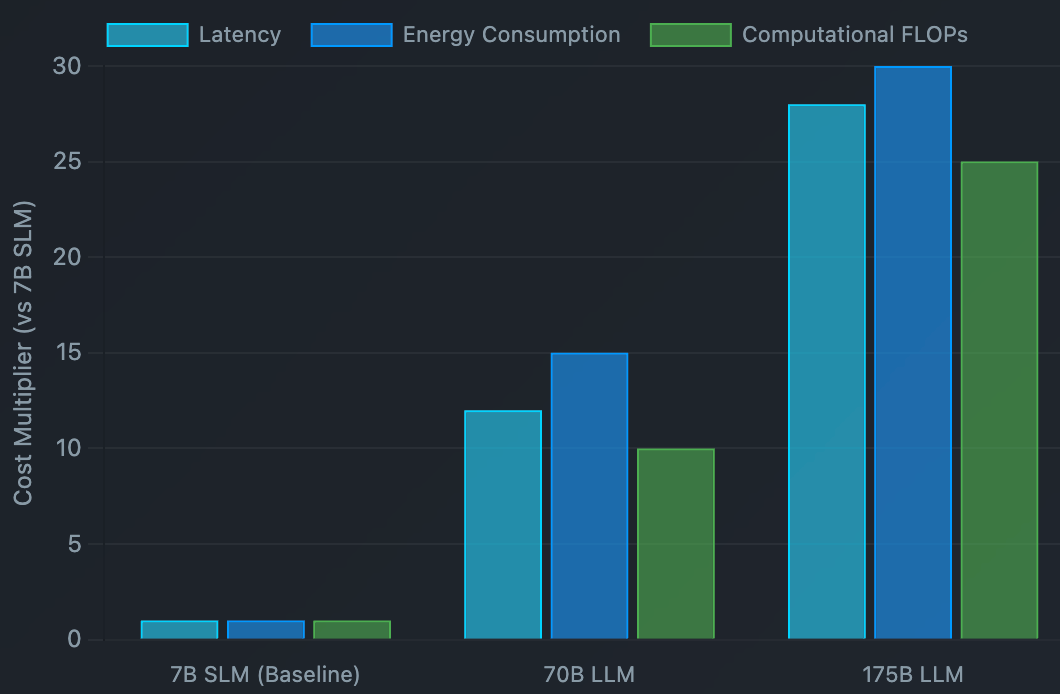

NVIDIA argues that Small Language Models (SLMs), under 10 billion parameters, are not just good enough for most AI agent tasks, but actually could be superior. According to the research, a 7 billion parameter SLM is 10-30 times cheaper in latency, energy consumption, and computational operations compared to a 70-175 billion parameter LLM.

This could be an order-of-magnitude disruption for the economics of AI deployment.

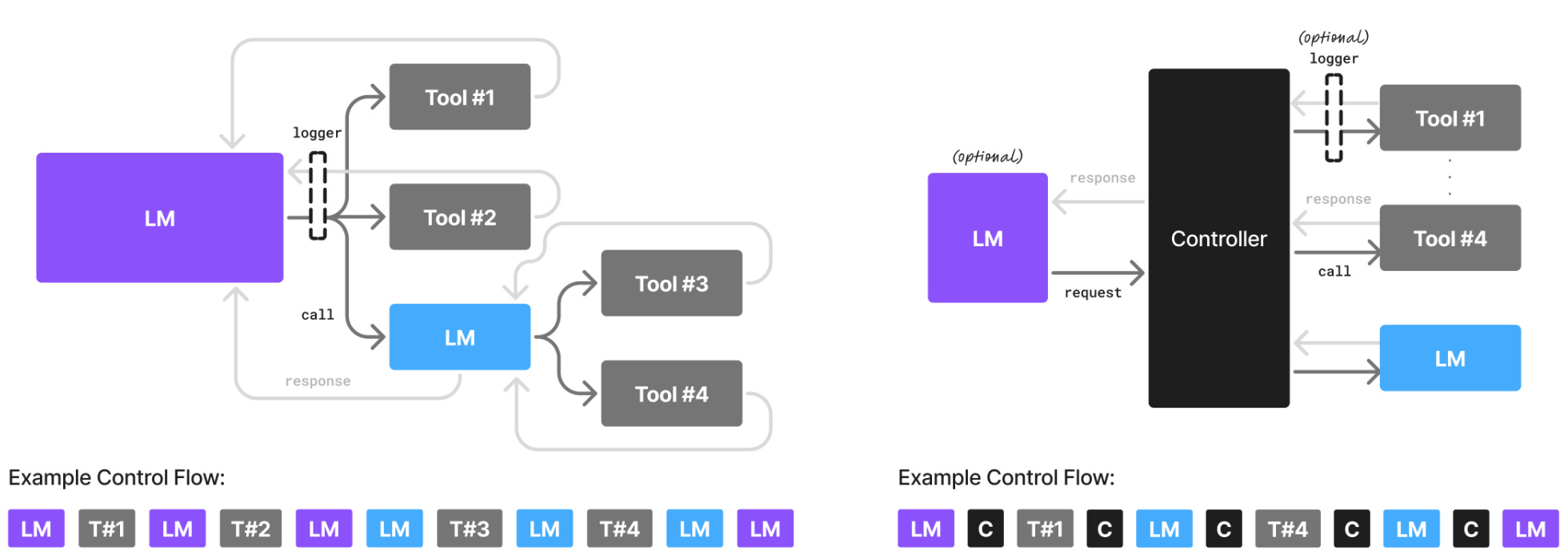

An illustration of agentic systems with different modes of agency. Left: The language model acts both as the Human-Computer Interaction (HCI) and the orchestrator of tool calls to carry out a task. Right: The language model fills the role of the HCI (optionally) while a dedicated controller code orchestrates all interactions.

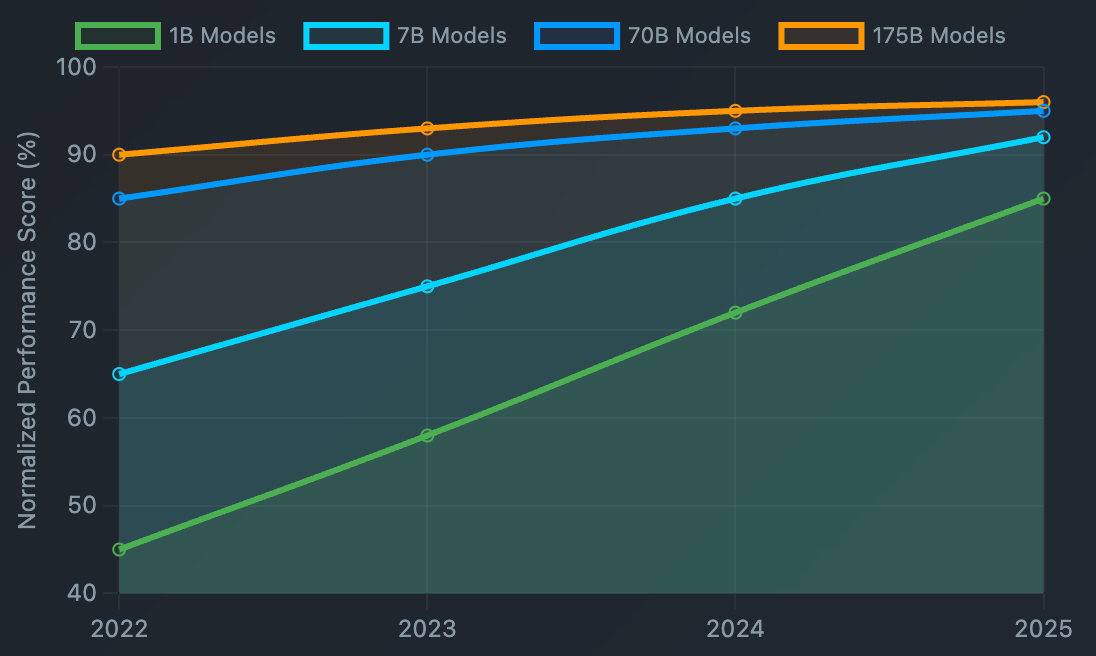

The conventional wisdom has been that bigger models mean better performance. But NVIDIA's research shows that smaller models perform quite well in agentic use cases.

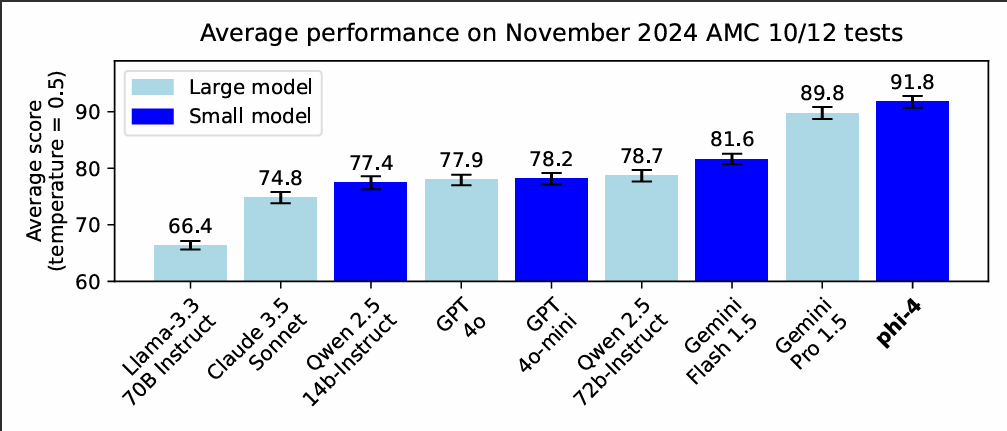

Microsoft's Phi-3 (7B parameters) achieves performance comparable to models up to 70B parameters of the same generation

DeepSeek's R1-Distill-Qwen-7B outperforms Claude-3.5-Sonnet and GPT-4o on reasoning tasks

Huggingface's SmolLM2 models (125M-1.7B parameters) match 70B models from just two years ago

Below, you can see how performance is compressing to the asymptote, even though the amount of parameters is much smaller.

MIT Technology Review included SLMs in their 2025 Breakthrough Technologies list and you can understand why.

How SMLs Can Be Used in Finance

Here's where NVIDIA's argument becomes particularly relevant for fintech applications. The researchers analysed popular open-source agents and found that 60-70% of LLM queries could be handled by specialised SLMs.

In financial services, AI agents are increasingly deployed for (1) Transaction categorisation and routing, (2) Compliance monitoring and reporting, (3) Customer service automation, (4) Risk assessment workflows. They plug into existing automation systems within enterprise software.

Many of these tasks are repetitive and non-conversational. As a result, they make good candidates for tasks that SLMs can do well withouth relying on broad research or creativity. For financial institutions, SLMs offer advantages beyond cost. Smaller models mean:

Enhanced data sovereignty: Models can run on-premise or on edge devices, crucial for regulatory compliance

Reduced attack surface: Smaller parameter spaces are easier to audit and secure

Faster adaptation: Fine-tuning SLMs requires only GPU-hours rather than weeks, enabling rapid business updates

Microsoft's recent release of Phi-4, which outperforms larger models on math-related reasoning, is particularly relevant for quantitative finance applications.

NVIDIA's research doesn't advocate for abandoning LLMs. Instead, it proposes mixed systems where SLMs handle routine tasks while LLMs are reserved for complex reasoning, which requires a broad and up-to-date context. This, in turn, could lead to a 10-30x cost reduction on the majority of AI operations.

The proposed implementation can take the following steps:

Current State: Users rely on LLM for most tasks

Data Collection: Log and analyse task patterns

Task Clustering: Identify SLM-suitable operations

Hybrid Deployment: SLMs for routine, LLMs for complex

Optimisation: Continuous improvement loop

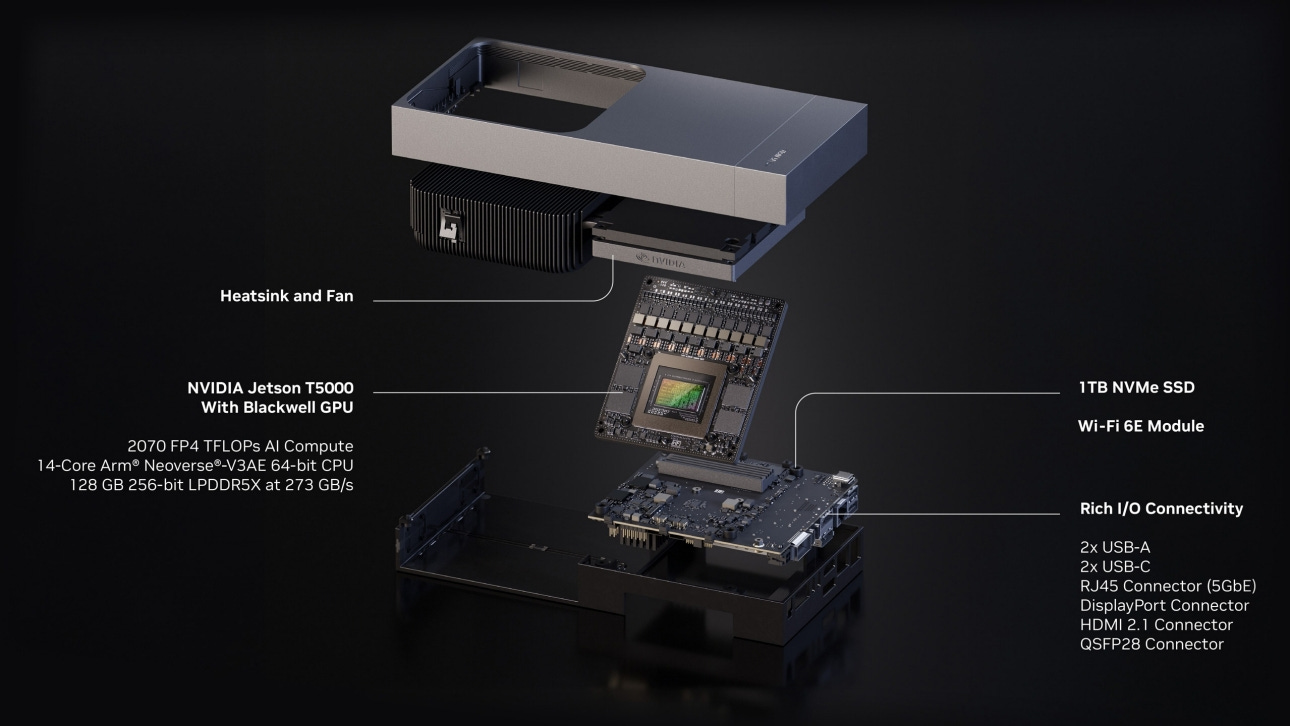

NVIDIA is optimising its platform stack for small-language-model (SLM) agents across various footprints. Along with Microsoft, they have announced Windows Copilot Runtime will expose GPU-accelerated APIs for SLMs and RAG on RTX AI PCs. Desktop apps can call local SLMs via standardised interfaces. In edge computing and robotics, their bet is Jetson Thor — real-time inference for compact models in robots, stores, and industrial endpoints.

And within data centers, NVIDIA offers Dynamo launch, a low-latency, distributed inference framework, paired with Blackwell, to deliver up to 30x more requests/sec serving DeepSeek-R1. NVIDIA in positioning in this way to expand their TAM beyond Big Tech hyperscalers.

The company claims that "shifting the paradigm from LLM-centric to SLM-first architectures represents to many not only a technical refinement but also a Human moral ought" in promoting sustainable AI deployment. This is a point to the immense capital and energy costs required to continue in the LLM race.

We don’t think NVIDIA is an environmental charity, but it is an important narrative to defend their technology stack development and show a more efficient path towards sector-focused implementation. Similar sentiment was in the air in response to the launch of DeepSeek last year, and the net effect has been more AI everywhere, not less.

👑Related Coverage👑

Curated Updates

Here are the rest of the updates hitting our radar.

Machine Models

Ethical and Bias Considerations in Artificial Intelligence/Machine Learning - Matthew G. Hanna & Liron Pantanowitz & Brian Jackson & Octavia Palmer & Shyam Visweswaran & Joshua Pantanowitz & Mustafa Deebajah & Hooman H. Rashidi

A Critical Field Guide for Working with Machine Learning Datasets - Sarah Ciston & Mike Ananny & Kate Crawford

AI Applications in Finance

⭐ AI-Driven Payment Systems: From Innovation To Market Success - Merve Ozkurt Bas

The Rise Of Generative Ai Agents In Finance: Operational Disruption And Strategic Evolution - Inesh Hettiarachchi

Financial Modeling in Corporate Strategy: A Review of AI Applications For Investment Optimization - Olufunmilayo Ogunwole & Ekene Cynthia Onukwulu & Micah Oghale Joel & Ejuma Martha Adaga & Augustine Ifeanyi Ibeh

Investment Outlook

⭐ Private Equity Outlook 2025: Is a Recovery Starting to Take Shape? - Bain & Company

⭐ Global Venture Capital Outlook: The Latest Trends - Bain & Company

⭐ Global Private Markets Report 2025: Braced for shifting weather - McKinsey & Company

🚀 Postscript

Sponsor the Fintech Blueprint and reach over 200,000 professionals.

👉 Reach out here.Check out our new AI products newsletter, Future Blueprint. (Don’t tell anyone)

Read our Disclaimer here — this newsletter does not provide investment advice

Contributors: Lex, Laurence, Matt, Farhad, Daniel, Michiel, Luke

For access to all our premium content and archives, consider supporting us with a subscription. In addition to receiving our free newsletters, you will get access to all Long Takes with a deep, comprehensive analysis of Fintech, Web3, and AI topics, and our archive of in-depth write-ups covering the hottest fintech and DeFi companies.