Analysis: Learning from the $500B AI crash and 1873 Railroad Bond panic

DeepSeek challenges the $11B of GPU-backed debt

Gm Fintech Architects —

Today we are diving into the following topics:

Summary: In this article, we examine the recent $500B market cap loss for NVIDIA and OpenAI, triggered by the emergence of DeepSeek, a Chinese AI model rivaling OpenAI’s capabilities at a fraction of the cost. DeepSeek’s reported $6M training expense — compared to OpenAI’s hundreds of millions — challenges the economic efficiency of large-scale AI investments, raising concerns about the sustainability of GPU demand. Additionally, CoreWeave and other GPU cloud providers have taken on $11B in debt to finance data center expansion, creating systemic financial risk if AI demand fails to meet expectations. Historical parallels, such as the 1873 railroad bond collapse, illustrate the dangers of over-leveraged infrastructure investments without clear revenue streams.

Topics: NVIDIA, OpenAI, DeepSeek, Microsoft, CoreWeave, Lambda Labs, Crusoe, Blackstone, Pimco, Carlyle, BlackRock

To support this writing and access our full archive of newsletters, analyses, and guides to building in the Fintech & DeFi industries, see subscription options below.

🤖 Check out our dedicated AI newsletter, the Future Blueprint, 👉 here.

Long Take

OpenAI and NVIDIA take a $500B hit

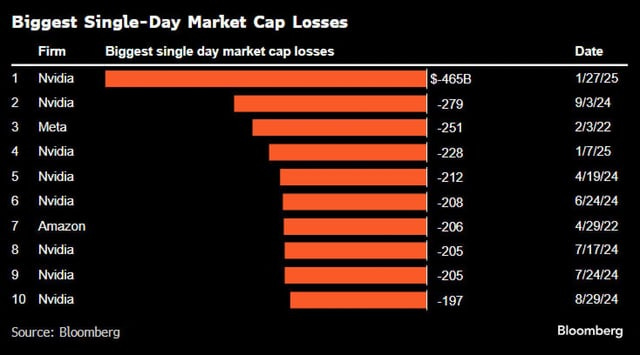

You’ve seen this chart — NVIDIA losing $500B of market cap and leading a $1T+ sell off across markets a few days ago.

Why?

Here’s “the reason” on paper — it’s called DeepSeek. So what is it exactly?

At a high level, DeepSeek R1 is a model released by a Chinese quant financial firm that rivals the very best of what OpenAI has to offer. Unlike dedicated AI labs in the US, this particular firm was set up to use AI for financial investment selection. In the process, they acquired a large number of GPUs and solved a number of complicated problems — like adding in reinforcement learning — to allow them to train a very successful model.

The chart above shows you performance benchmarks comparing R1 and o1, the OpenAI reasoning “chain-of-thought” model. That’s the one that takes longer but breaks problems down into pieces and creates plans to execute things.

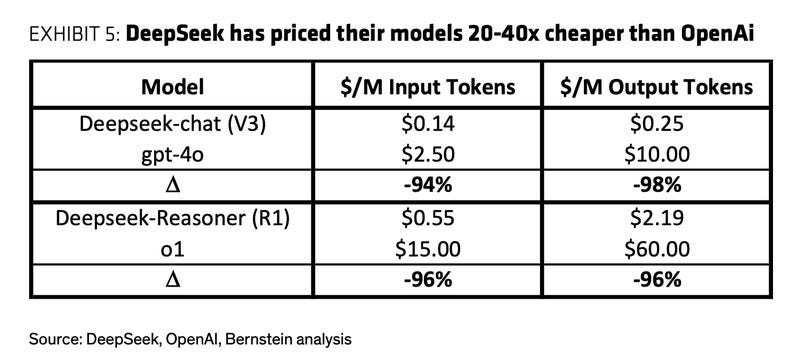

There are many conspiracy theories floating around the Internet. Quite a few technical people believe that the results are real, and that even though DeepSeek used less sophisticated graphics cards, they were just able to do things far more efficiently. The math from Bernstein below shows you why this is a “problem” for the current commercial approach of the large AI companies.

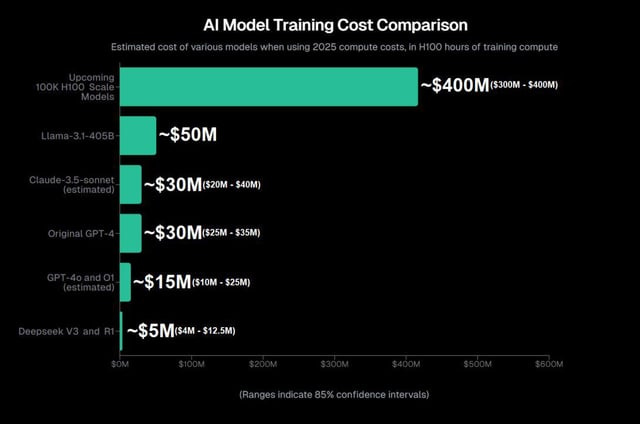

If you can train this model for $6MM, while OpenAI trains it for several hundred million, there is a clear competitive and economic problem. The entire $500B GPU initiative from the United States looks like a large industrial joke in this context. Instead of throwing more hardware at the problem, just be smarter! A 90%+ cost in the use of GPUs to train these models could suggest a 90% decline in the stock price of GPU manufacturers, right? Well … it’s possible that DeepSeek actually paid a lot more than $6MM for their training run by having to purchase $50MM+ of GPUs upfront and training several times. We don’t know.

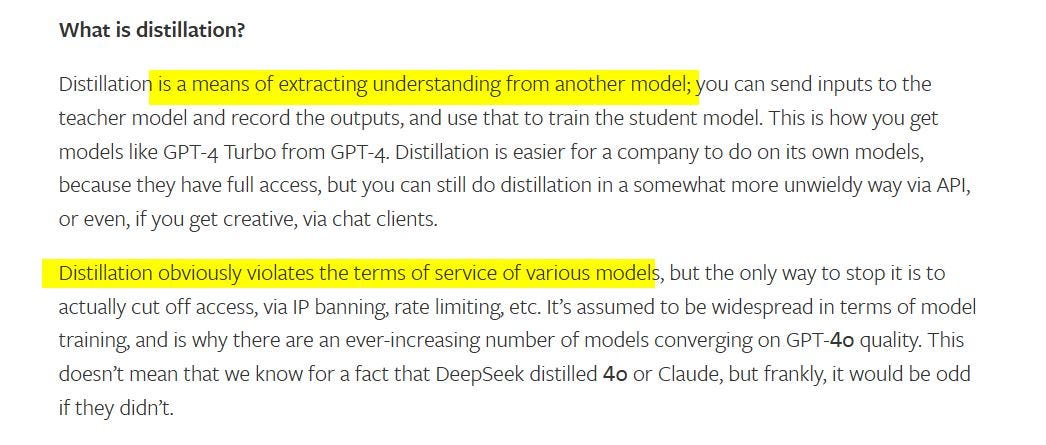

The other side of the conspiracy theories is that DeepSeek used the outputs of OpenAI’s model to train their model, in effect compressing the “original” model through a process called distillation. This is something OpenAI and other companies do to their own big models to make them cheaper for others to use as well.

Here’s Stratechery on what this means, and an associated Financial Times headline to fuel the fire.

How ironic! As OpenAI scraped the Internet for content to train its machine brain, so too did DeepSeek. Maybe, maybe not. Copyright seems dead. The positive flipside of this, of course, is that now these models are open source. That means they are available for anyone to run on their own infrastructure. It could be you or me. It could be Goldman Sachs and JP Morgan. It could be OpenAI itself. The very floor of the entire industry is raised to a new level.

The market sell-off, in our view, is completely wrong. Yes, tech companies are over-extended on valuation and importance relative to the rest of the US market capitalization. And yes, the paradigm of cost has changed too.

Instead of a large monopolistic outcome, where the big tech companies get to win all the spoils of the AI platform shift through regulatory capture, we can instead have a boom in applications powered by the open-source variants of these models, which are now as good or better than what you can get from anywhere else.

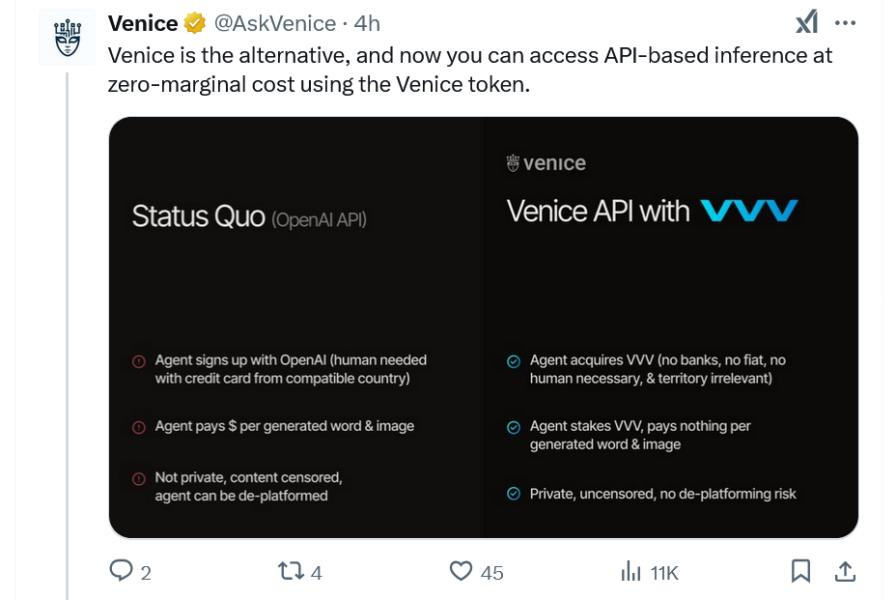

Such models will be hosted on decentralized networks and applications, like Venice or Hyperbolic, where they are already available today.

This in turn leads to amazing opportunities for builders. Further, on-device models are likely to be extremely performant and powerful, avoiding the need to connect to the cloud in the first place. It even runs on a Raspberry Pi.

In all cases, we think the demand for GPUs will sky-rocket like never before as the entire machine world becomes “smart”. We, and our children, will expect intelligence attached to everything that we build. Because it is possible.

And when you zoom out further, did NVIDIA really collapse?

If anything, the current market correction is in line with the investment banking view that infrastructure is expensive and they cannot imagine the applications coming to generate sufficient revenue to pay for the initial investment.

How good are investment banks at sizing innovation? Why should we care what their analysts believe?

There is something else, however, that keeps us up at night. It is not the geopolitical competition between China and the US and the number of AI PhDs by country. It is not whether everyone is a spy and has stolen national secrets. It is not the placement of defense ministers on the boards of AI companies to build war machines. Ok, maybe a little of that. No — something far more simple.

The Hidden Danger

Let’s start with this.

Haha, it can’t be that bad.