AI: Who is responsible if your AI hallucinates financial mistakes? Court rules against bot.

How should companies and regulators protect consumer rights in the incipient age of AI?

Hi Fintech Futurists —

Today we highlight the following:

AI: AI Chatbots, Hallucinations, Consumer Safety and Accountability

CURATED UPDATES: Machine Models, AI Applications in Finace, Infrastructure & Middleware

To support this writing and access our full archive of newsletters, analyses, and guides to building in the Fintech & DeFi industries, subscribe below.

AI: Chatbots, Hallucinations, Consumer Safety and Accountability

A recent lawsuit against Air Canada has set a legal precedent for what happens when a business chatbot provides consumers with inaccurate information.

The American Consumer Bill of Rights states that every consumer has:

The right to safety

The right to choose

The right to be heard

And the right to be informed.

The Consumer Rights Directive similarly protects European consumers. In part, this means businesses are legally obliged to provide “complete and truthful product information so that consumers can make intelligent choices.”

What happens, then, when businesses deploy AI chatbots that provide consumers with inaccurate information? How should companies and regulators protect consumer rights in the emerging AI age, and where does the onus lie should AI chatbots fail to comply with consumer rights? A recent lawsuit against Air Canada has set a legal precedent.

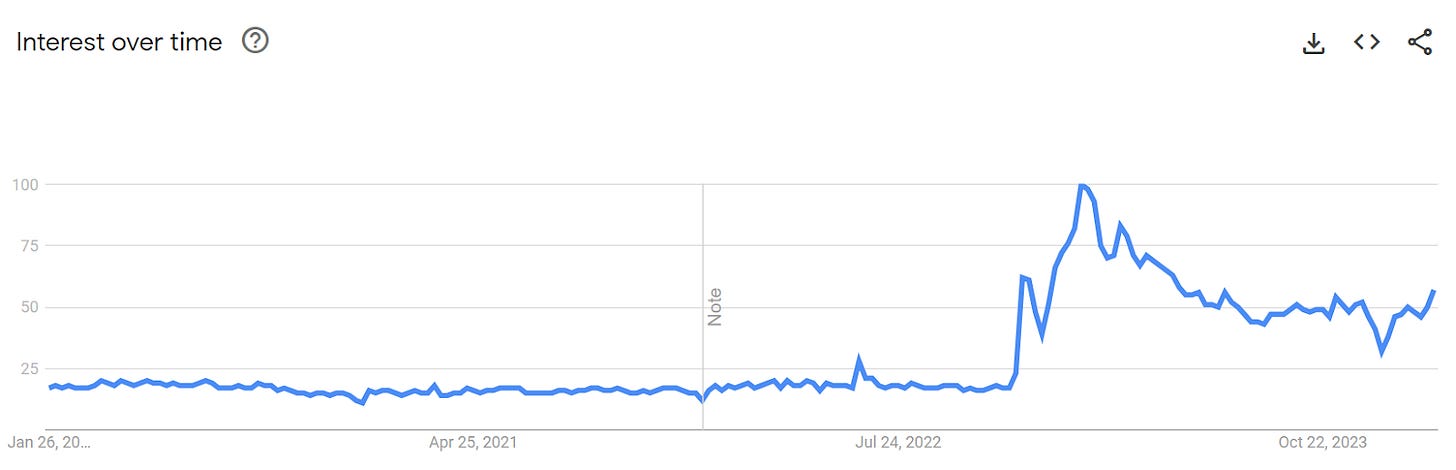

AI chatbot use has increased by 92% since 2019. The primary customer use case for AI chatbots is “getting a quick answer.” However, AI chatbots are well known to hallucinate - provide false or misleading information. According to the NYT, this can happen anywhere between 3 to 27% of the time, which is highly problematic for organizations legally bound to provide accurate information and consumers who desire not just quick but accurate answers from their chatbot interactions.

AI chatbot hallucination occurs due to the inherent limitations of large language models (LLMs). Trained on vast amounts of data, chatbots leverage natural language processing and machine learning algorithms to analyze customer queries, discern intent, and formulate relevant responses.

Specifically, machine learning models called Long Short-Term Memory (LSTM) networks are especially crucial here, as LSTMs process sequential data (such as sentences), retain contextual information throughout conversations, predict next phrases, and continuously adapt to new patterns through training, thus allowing chatbots to answer user prompts by predicting the most likely word to follow based on context — but they can be, and often are, incorrect.

An example of this recently occurred in an Air Canada lawsuit, where the company’s AI chatbot incorrectly informed a customer that he could claim a refund for bereavement travel.

In this instance, Air Canada’s official policy states they do not provide refunds for bereavement travel; however, given the evidence, which included a screenshot of the discussion, the Canadian Consumer Protection Tribunal held Air Canada accountable for its chatbot's false promise of a bereavement fare discount, ordering them to pay the partial refund as promised by the chatbot, along with additional damages to cover interest on the airfare fee and tribunal fees.

This decision reinforces the critical legal principle that corporations are directly responsible for their AI's actions under consumer protection laws. The Air Canada case is a catalyst for regulatory bodies and corporations to develop more stringent AI policies and practices, placing financial liability on the corporations who deploy the chatbots to ensure they provide factual and accurate consumer information. In other words, treat your human and robot workers the same!

A consequent opportunity for the financial industry is to develop insurance products to protect companies from the financial hallucinations that their AI employees have, and the negative financial exposure that may create.

👑 Related Coverage 👑

With a 35% open rate and 1 million post views per month, we have an engaged audience of Fintech, DeFi, and AI enthusiasts receptive to your messaging.

Contact us to learn more about our custom opportunities.

Curated Updates

Here are the rest of the updates hitting our radar.

Machine Models

⭐ AI for Alpha Integrates GenAI into its Investment Process - FinTech Global

AI Applications in Finance

⭐ Generative AI in Fintech Market to Reach 20M by 2032 - Data Horizon

Sora AI Frenzy Prompts Chinese Fintech Firm to Tout ‘Priority’ Access - SCMP

Infrastructure & Middleware

⭐ Tecnotree Completes 14 Simultaneous AI Transformations for Multiple Groups - FinTech Futures

Microsoft Unveils RyRIT for Red Teaming to Revolutionise AI Security - FinTech Global

🚀 Level Up

Sign up to the Premium Fintech Blueprint and in addition to receiving our free newsletters, get access to:

Wednesday’s Long Takes with a deep, comprehensive analysis.

‘Building Company Playbook’ series, offering insider tips and advice on constructing successful fintech ventures.

Enhanced Podcasts with industry leaders, accompanied with annotated transcripts for deeper learning.

Special Reports

Archive Access to an array of in-depth write-ups covering the hottest fintech and DeFi companies.

Join our Premium community and receive all the Fintech and Web3 intelligence you need to level up your career.

Fr7616170620160033518010064