Hi Fintech Architects,

In this episode, Lex interviews Scott Woody, CEO and founder of Metronome, a usage-based billing platform. Scott shares his journey from academia to entrepreneurship, detailing his experiences at UC Berkeley, D.E. Shaw, and Stanford, where he studied biophysics. His tenure at Dropbox, where he tackled billing system challenges, inspired the creation of Metronome. The discussion highlights Metronome's real-time billing data capabilities, which aim to improve business efficiency and customer experience. Scott also explores the broader implications of AI in fintech, emphasizing the shift towards usage-based business models and the importance of real-time data.

Notable discussion points:

Metronome emerged from firsthand frustrations at Dropbox, where Scott Woody experienced how rigid billing systems slowed growth, confused customers, and blocked real-time insights. He built Metronome as a flexible, real-time billing engine that merges usage data with pricing logic—powering the monetization infrastructure for top AI companies today.

Real-time billing isn’t just about invoices—it's a strategic revenue lever. For AI and SaaS businesses alike, Metronome enables teams to run dynamic experiments, optimize GPU allocation, and make last-minute decisions to hit quarterly targets—turning billing into a core growth engine.

The rise of AI is accelerating a shift to usage-based models. As AI becomes specialized labor across verticals (from loan collection to customer service), companies are rapidly replatforming, and entire industries may flip from seat-based to outcome-based pricing within quarters—Metronome is positioned as the "payment processor" for this AI economy.

For those that want to subscribe to the podcast in your app of choice, you can now find us at Apple, Spotify, or on RSS.

Background

Prior to Metronome, Scott Woody was Director of Engineering at Dropbox, where he led teams focused on enterprise products and infrastructure.

Earlier in his career, Scott held engineering roles at Palantir and Clarium Capital. He studied computer science at Stanford University, where he developed a strong foundation in distributed systems and backend engineering.

At Metronome, Scott drives the technical vision, helping fast-growing software companies scale their billing systems to meet complex business needs.

👑Related coverage👑

Topics: Metronome, Dropbox, Datadog, OpenAI, AI, AGI, machine learning, pricing models, financial services, business optimization, operational frameworks, analytics, financial modeling

Timestamps

1’05: Talent Spotting and Swag Strategy: How Scott Woody Recruited the Brightest Minds at Berkeley

7’27: From Biophysics to Startups: How Stanford and Protein Folding Led Scott into Tech

9’28: Lessons from Dropbox: Why Talent Density Beats Perks in Building Scalable Tech Teams

14’09: Solving the Billing Bottleneck: How Metronome Powers Real-Time Monetization for Modern Tech

19’45: Real-Time Revenue Intelligence: How Metronome Helps Teams Optimize Pricing, Experiments, and GPU Allocation

24’55: Optimizing AI Economics: Metronome’s Real-Time Matching of Model Usage, Customer Spend, and GPU Allocation

29’03: The Rise of Usage-Based AI: Why Real-Time Monetization Is Reshaping Business Models Across Tech

34’25: Specialized AI as Labor: Why Purpose-Built Agents Are Shaping the Future of Work and Software Integration

40’46: The Tipping Point for AI Adoption: Why Clear ROI and Competitive Pressure Are Rewriting Entire Industries

48’39: The channels used to connect with Scott & learn more about Metronome

Illustrated Transcript

Lex Sokolin:

Hi, everybody, and welcome to today's conversation. We have a really interesting discussion today with Scott. Woody, who is the CEO and founder at Metronome. Metronome is a fascinating company. It's a usage-based billing platform, really interesting fintech that's underneath some of the largest AI companies today. and we'll talk about how Scott got there and how it works with that. Welcome to the podcast.

Scott Woody:

Hey, nice to meet you. I'm very excited to chat.

Lex Sokolin:

So you've got a really interesting background on your academic side as well. Before you went into entrepreneurship and kind of company building. Tell us what you what you started doing before you got to, money for artificial intelligence.

Scott Woody:

So maybe I'll go way back to college. So, while I was in college, I actually worked as a physics major, and I love physics, but I think I also was kind of. It sounds weird to say, but kind of like I was looking for something a little bit more challenging at different times. And so, I kind of in addition to doing physics, I also had a almost full time job working for the hedge fund D.E. Shaw, and I wasn't working for the, like the quant side of things. I think that was all like headquartered in New York. This was an on-campus job, and really my job was talent spotting. So, I spent this is like the glory days of Facebook where everything was completely transparent. But basically, what I did was I spent a lot of time kind of talking to a lot of the smartest people at UC Berkeley, where I went to school, and just identifying who might be good fits for the quant side of DSA. And then my job was to get them to come to certain events on campus and then eventually obviously apply and hopefully become, you know, go work at the hedge fund.

And so, it was really a very interesting job because I had a chance to, you know, it was like kind of getting paid to, to network in a sense. But on the other side, it was also really interesting because you got to kind of study, where do the best people at Berkeley like what are they doing and what is the commonalities between them? So, you get to like identify which are the hardest classes, who are the hardest professors? How do you how do you like, you know, like, what are the whisper network say is like, who are the best people at the at the, at the school? Because, you know, a firm like the D.E. Shaw, they only hire the best of the best. And so, you really wanted to make sure that you were kind of identifying the top, top talent at Berkeley. So, I did that for two years. It was great. It paid for my entire undergrad.

Lex Sokolin:

Was that qualitative or quantitative like, were you trying to define the patterns for what makes talented people, or was it very qualitative and sort of intuition driven?

Scott Woody:

It was. I mean, yeah, it's a good question. It was a lot of both. So it definitely started as qualitative, where what you're trying to do is just like it's kind of actually how I like to do executive searches now where it's like, I just want to go talk to the like top ten people, however that's defined, and then look for some commonalities between them, like what makes them really top and especially when someone's like 19, 20 years old. Like it's kind of a very interesting game because what makes someone like really stand out in a class of 2000 people at Berkeley, you know, is going to look pretty different than what makes them stand out as like a senior. And so what I would do is I would interview like, you know, the ten best people, however that defined. And then what I would do is I would try to like extract what was common about them. And, and you know, some things that I noticed was it's kind of maybe obvious in retrospect, but these people were like easily bored because the classes were designed not for them.

They were designed for a different level of, you know, like human. And so, what they ended up doing is they would take like one of my friends, he took like ten classes a semester. And it was like it was like literally two and a half times, like horse load of any other person I've met. And he had to get special exemptions in order to do that. And so, you start to like kind of come up with these like unique, singular things. And, and then what you do is you also notice that these people tend to cluster because they get bored. They want to find other people who don't bore them. And so, what you do is if you can get into one part of the network, you can kind of exploit that network and kind of go map out the rest of it. And as long as you are, you know, reasonably friendly and have something of interest, you know, you can like keep their interest. You can kind of like more or less navigate that network relatively quickly.

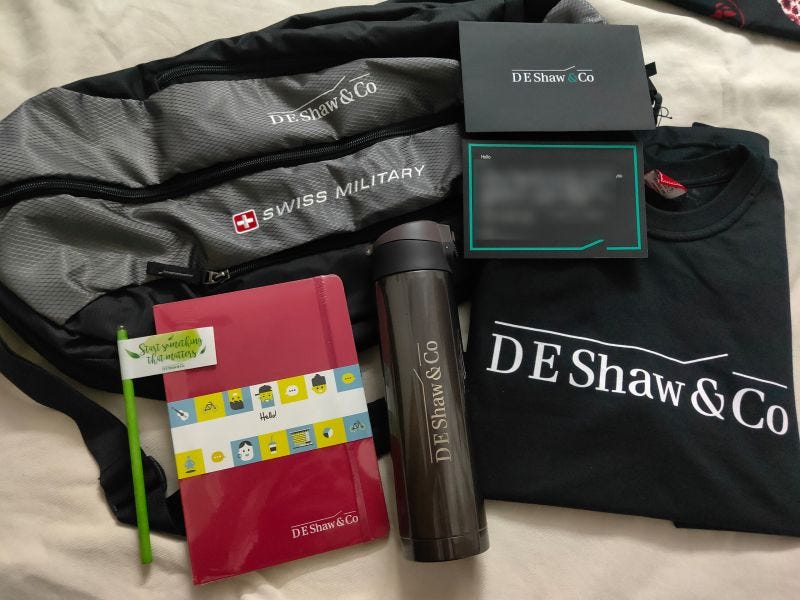

And like, you know, one thing that we found was that swag worked wonders on like freshman, sophomore or sophomores. And so, we would give out like branded Rubik's Cubes and kind of just like very, you know, backpacks and things like that. And that would get like even the smartest, like undergrads to come to your event. And so, what we realized is that swag was really invented to kind of spearfish for these kinds of people.

Lex Sokolin:

That's hilarious. It's like there's no kind analogy, you know, even pinky and the brain. Right? Even the pinky and the brain situation, if you're the smartest mouse, you're still going to go for the cheese.

Scott Woody:

Exactly. You just have to find the thing that you have, which in this case was interesting swag that they don't have. And you know, the other thing about smart people is they don't like to spend money. They're like very, you know, tend to be very cheap. And on the whole, especially when you're young.

And so if you could offer them like even really like pretty good food and like even, you know, some solid swag, they would show up and then, you know, as long as you got a fun time, like, you know, you come to these events and you'd come play chess with like a, you know, like a master level person, like it was not like, come and, you know, whatever, I don't know, drink or whatever. So, it's like very, uniquely designed experience for getting smart people to come work at your company.

Lex Sokolin:

So high powered executive search advice is to have swag at your events.

Scott Woody:

Honestly, it works pretty well. We have found that like, well, really well-designed swag can do wonders like there's like the very obvious stuff, but you can't see it. But I'm. I'm wearing, like, a Patagonia branded hoodie or not a hoodie, a fleece, but which is very nice. But then the like Metronome logo is like written on the collar in the back.

And so, it's like very it's like tasteful and hidden. And I, we find that that swag does wonders. Whereas like a very obvious branded like thing is just like going to get a lot less, a lot less traction.

Lex Sokolin:

After that D.E. Shaw human nature, existentialism experience. You went on to do some fairly complex work at Stanford in grad school. Talk us through what drove you there and then why did you move on from there?

Scott Woody:

I always I loved physics, I loved learning, and I thought I loved research. And so what that meant was the logical path for me after undergrad was to go to grad school. I went and spent four years in the biophysics program there, and I was studying essentially. How do you know there's a set of proteins called motor proteins, if you like, flex your muscles like there's like little engines that like consume energy. If you remember ATP from biology, high school days, the engines that consume that are called myosin. And so, I was studying those and I was specifically studying how do you use computational modelling to rewire those molecules so that they either move faster or move slower or move backwards? It was a very interesting thing to go do.

I was actually using the software from a guy named David Baker, who won the Nobel Prize this year, and his software was really about how do you use, how do you do protein folding? And so, I was using protein folding software to essentially like computationally design new versions of the motor protein. The challenge with it was the theory was very interesting, but the practice of it was extremely grinding and very it was not to my temperament. I think I was looking for something a little bit faster paced. And so, I spent four years there and then at Stanford. You can't, like, walk two feet without, like, getting pitched on some startup. And so, I kind of convinced myself that the path away from academia was going to be something in industry and specifically in tech. And so, I kind of went back to actually about the roots and said, like, well, it's very interesting. You know, I spent all this time like tracking down and like essentially headhunting a bunch of really talented people.

But I was doing it in spreadsheets and so could I, you know, like, I kind of like scratch my own itch and built essentially an applicant tracking system for, for that kind of work. And that's like, was my exit from Stanford raise a little bit of money, spent two years working on it, and then got acquired into Dropbox.

Lex Sokolin:

From being in tech. Were there any shockers for you in terms of how large product management works, or how systems at scale or designed? Was there anything that you've taken away from Dropbox as key lessons.

Scott Woody:

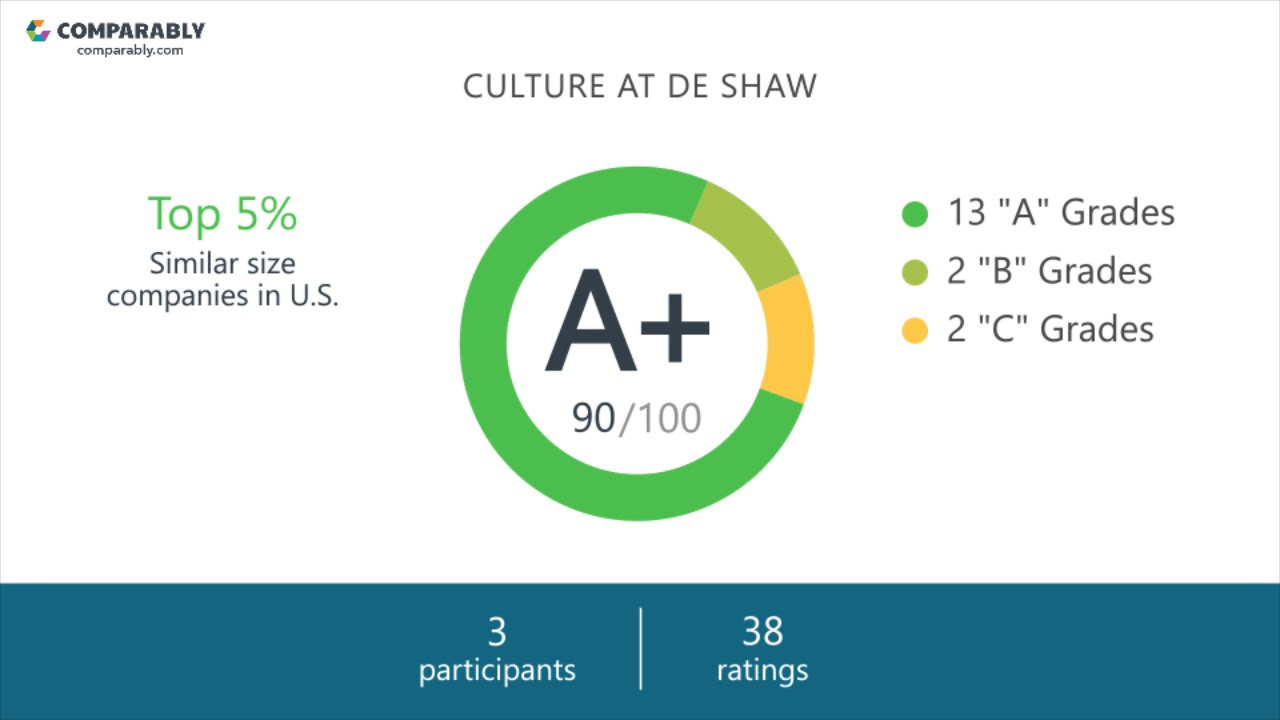

Dropbox taught me, like everything that I know about software, and it's kind of where I cut my teeth, right? I kind of, coming from self-taught academia, don't know how the world works. And so, Dropbox was like getting dropped into one at the time. This is 2012, 2013, one of the fastest growing startups. And I got to see firsthand how like, excellent software engineering gets done. And so, some of the lessons that I really took away, I think the first one, honestly, is that density of talent trumps everything.

I like to say that like my time at Dropbox, the most valuable thing I got from that was just the network. So, I jumped in. I was like roughly 220th employee or something like that, but every single person I worked with at that company or met at that company has gone on to do incredible work at other places. And Dropbox had really, you know, invested for years in building up a super high talent density and meant that the hiring process was like was brutal because people would come in, they would interview, they get all like yeses, and then they get rejected because they didn't get any, like, super strong. Yes. And at first, I was very confused because like, you know, it's like, well, this person clearly meets the bar. But Dropbox kind of held this idea of like the thing that matters is you want talent that continuously raises the bar. And if you if you execute that algorithm successfully for, you know, dozens, hundreds of hires, what ends up happening is you have a group of people who are all just like, super charged and could do incredible things.

And so, like the first lesson I learned was just like, you really have to invest in, in talent in order to like to build a product that can scale at the rate that Dropbox did. I think the second thing that's kind of a little interesting is this was also 2012, 2013, when it was the heyday of people going really hard on perks for hiring, you know? So, like, you know, when I joined, there was like a you could schedule a massage every day for free. And it was one of those things where and then the beer in the refrigerator was like really expensive, very fancy beer. And it kind of felt very over the top, especially coming from grad school, where you’re kind of living in like a hand-to-mouth type of way. And I think the kind of thing I learned there was that while these perks were nice, what it turned out, it was as it wasn't necessary for the people who were there.

In fact, actually, the hardest driving people were the people who took advantage of the perks of the least. And in some sense, I think what happened is that Silicon Valley mistook this idea that you need to create like a really lavish environment in order to retain great people, when in reality, what you need to retain great people is you need really challenging work and really great coworkers to do it with. And the perks kind of don't help with that at all. And so, at a certain point, like drop offs, kind of like let those perks go. And honestly, retention wasn't affected at all. And I think that was like a very yeah, like interesting lesson on like again, like human psychology side.

Lex Sokolin:

How do you square this with the idea that giving people swag brings them in but giving them more swag doesn't retain them. It's the challenging work.

Scott Woody:

It's the difference between like marketing and your actual product, right? You need something to stand out from the crowd and you need to get them to come in the door.

So, for me, like using the Dropbox analogy, my first interview day, I walk in the door and there's literally a like hill of lobsters bigger than me. Like, just like it's like it's a lobster dinner day. And it like, I was like, whoa, this is a different weird place. And so, it brought me in the door. But once I was there, I didn't need that in order to stay. And so, I think the kind of my takeaway here is that you want to deploy these, you want to deploy these kinds of perks or these kinds of things in very tactical ways, but there's like a hidden sickness to them. And so you want to like, have it be something that attracts someone in the door, but then you don't need it to, to kind of be a permanent part or permanent fixture of your, of your culture. So that's kind of how I square those two.

Lex Sokolin:

So you're now in tech. You have this really interesting background. Take us through to Metronome. And maybe if you could introduce the core product idea and, and maybe the current scale of the product would be great.

Scott Woody:

Actually, Metronome starts from my time at Dropbox. So, while I was at Dropbox, I worked on a bunch of different stuff. But one of the key things I worked on was our essentially what we call monetization/commercialization. So, we owned the first 30 days of the user experience of Dropbox, and our job was to grow the product from monetization as fast as possible. So, over the 3 or 4 years I worked on that, we grew from 300 million to something in the low $1.2 billion range. My job, or my team's job on the engineering side was to build the essentially product experience that would kind of help people understand the value of Dropbox and then ultimately decide to pay for it. And there were kind of three core challenges with doing that work. The type of things we were doing was like a B testing, price testing, launching new features, etc. and there were three core challenges that ultimately formed the backbone of what Metronome became.

So, the first was we would do pricing experiments all the time. We would try like, let's try a different price in New Zealand as a distinct from Australia, and see if that has any effect on growth rates. And the problem was that we are built on an internally built billing system. And that experiment, even if it was like changing from 8,99 to 9,99, could easily take like 6 to 8 weeks of engineering time on the billing system, even though it was a very small change. And that's the first core problem that we ran into, which is that just like fundamentally making changes to pricing and packaging at Dropbox was super slow and very risky. Every time we did it, there would be incidents like customers would end up getting mis billed or customers would end up complaining. And so that was like problem number one. Problem number two was that we would make that change and then customers would get extremely confused. So, we would, let's say, launch a new product like as an add on. So, you could optionally buy something and then it would suddenly appear on someone's invoice and the customer would be like, I don't remember buying this.

What is this? Why am I paying an extra $3 a month. And they would write in customer support tickets. And the problem here was that the product experience, the customer, the kind of product billing dashboard that the customer had access to, just didn't have access to the data inside of the billing system, because on the back end, the billing system was a set of scripts that ran once a month. So, in the middle of the month, the like product had no idea what the customer was going to be charged at the end of the month because the script hadn't been run yet. And so, the second major problem that Metronome was built to solve was this idea of how do you bring billing data into the product experience? How do you help customers understand the value that you are charging them for? And then the third problem that my team ran into at Dropbox was we were a billing growth team. So, we were focused on data. And how do you use data to grow faster? The biggest problem was that, again, this billing system was a set of scripts that ran once a month.

That meant that the data inside the billing system just wasn't available to us in the middle of the month, which meant that we could not make decisions on data mid-month. We'd have to wait until the end of the month. But actually, it was worse than that because we'd have to wait till the end of the month. Then we'd have to wait for finance team to kind of process the data, and then we have to wait, you know, sometimes 30, 60, 90 days for the data to come back into systems that we could actually go query, which meant that as a growth team, the data inside the billing system was completely inaccessible to us. And it meant that we made decisions not on revenue data. We made decisions based on click through rates as opposed to like, you know, revenue essentially revenue growth rates. And so kind of tying it all out. The three problems that I ran into at Dropbox were one, the billing system wasn't flexible to the billing system really made it hard for the end customer to understand the value they were getting.

And then three, the billing system made it really hard for my internal team to take action on top of revenue and usage data. And so, Metronome is really the solution to those three problems rolled into one. So literally what we do is we integrate into essentially your product telemetry stream. If you're familiar with Datadog, we basically operate on a similar type of data as Datadog. So, you stream us a bunch of information or facts or events that correspond to how your customers using your product. We consume those events and turn them into useful like metrics. And then what we do is we also have all the pricing and packaging logic for every one of your customers stored individually, so that we can, in real time, marry the event usage data to the pricing and packaging data. And then we can spit back out to you exactly how much money will this customer owe at the end of the month? And then we store all of that data securely so that you can, you know, sleep easy at night, knowing that your customers are getting accurately billed.

And then obviously once a month or whenever the customer is actually getting billed, we will issue an invoice on your behalf. But that's like literally what Metronome does. And by kind of essentially being data dog married to a pricing engine, we allow you to have continuous real time access to all of the information about how your customers are using your product and exactly how much money they're spending on your platform. So that's like in a nutshell, what we do.

Lex Sokolin:

At a high level. It's super interesting, right? The idea that you have this real time product usage data and then sort of value accrual or billing data that can be matched up. And if you look at broad fintech trends, there are lots of examples where, you know, unlocking wage data for employees to underwrite credit or unlocking financial data for businesses for their treasuries, like is a major value driver. Can you make it more concrete for us and like give an example of if I'm a product manager and I'm seeing something in the dashboard about usage and billing.

Like I have my moment, or when you are a Dropbox and you were like, if only I could. You mentioned experiments like, let's anchor to maybe a couple of examples that you could walk us through.

Scott Woody:

Yeah. So, I think one of the common questions we get is like, you know, what's the value of computing this in in real time? And so, I'll use a Dropbox example because it's very live. And then I can also use an existing customer example. So, one of the interesting things about a company like Dropbox is that every quarter you're expected to essentially hit certain financial metrics. Because you're a public company, you need to hit certain growth rates in order for Wall Street to be happy. So what that means is that as the quarter is kind of trending, like you see this in sales all the time where like, let's say that like a sales team is off target. And then the last two weeks of the quarter are just like helter skelter, like you're calling up every single prospect.

You're trying to push those last deals through so you can hit quarter. The same thing is true for self-serve businesses or businesses that sell software. So, like Dropbox is a good example. And what that meant was that there are certain experiments that we had where we knew it would drive essentially long term. It would drive short term revenue. So, like an example would be, you know, we knew that if we put a essentially like a credit card gate in front of certain product actions, that customers would pay short term to access that product feature. But that's like a pretty hostile customer experience, right? Because you're having to enter a credit card in order to get access to a feature is like a it's like a pretty high gate. But if we knew we needed to make revenue in a given quarter, we knew we could throw up that gate and we could generate, you know, a short term burst of like, let's say, a couple million dollars. And so that kind of action, that kind of like idea of deploying an experiment that, you know, will make money.

You only want to do it if it is the right time in the month to do it. Like you know. You know your revenue is like trending lower than you wanted it to. And so, in order to make, you know, Wall Street happy, you want to deploy these experiments. And so, having access to revenue data in more continuously let’s you say, okay, is it time to pull the lever or time the time to actually go like deploy that experiment that we know will make us money in some sense? You want to hold those experiments because they are ways of like, you want to do a bunch of speculative stuff in order to try to generate more revenue. But if it's getting toward the end of the quarter and you know, you need to make quarter, you, you wanted to play those experiments. But unless you have a real time view into how your customers are spending money on your platform, you can't make the decision about whether or not to make it. And so at Dropbox, what we had to do is we constantly be like reading the tea leaves, like, should we pull that lever or should we not pull that lever? And it was a very, you know, end of quarter was a very stressful time at that company for some of our other customers, a real time view like our current customers at Metronome, a real time view offer actually offers a lot of value in a couple of different ways.

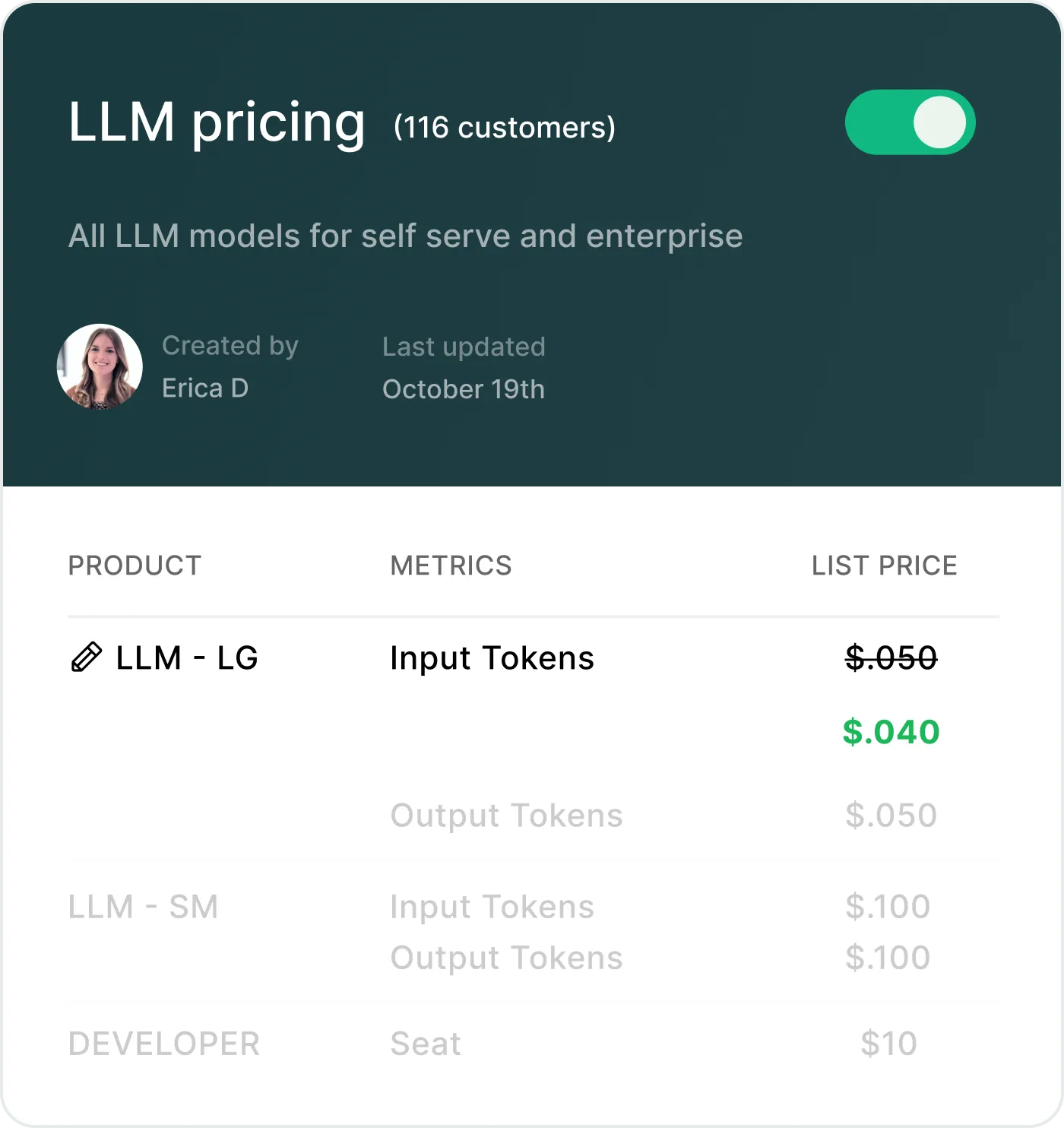

So, we work with a lot of the LLM providers, LLM providers, they have this really interesting problem or opportunity, which is they have a lot of different models on the market, and they also have huge fleets of GPUs. And what they need to do is they need to properly match the LLM to the GPU. You essentially assign them to work on different GPUs. And the reason why you do that is because you want latency to be low when customers are requesting access to information from that LLM. Now, if you assign the wrong set of GPUs to an LLM, let's say you overprovision for an LLM. That's not getting a lot of traffic. What that means is that you have essentially like mis allocated resources. You've like given a lot of, you know, GPU capacity or GPU capacity to an LLM that's not getting any traffic. And then probably what you're doing is you're starving a Jeep, a model that does have a lot of like demand. And then that means that the latency for your customers is going to be overall higher because you miss allocated GPUs to the LLMS.

And so, some of our customers use our data to basically understand trends of where our customers actually using where are they actually using, which of the models are they using in production. And then they use that to assign GPUs to different models. And that's the kind of thing that a more real time view actually allows you to do. It lets you actually properly essentially load balance, properly load balanced GPUs against traffic.

Lex Sokolin:

So let me pause on that use case because it might be my favorite one ever. You know how science fictiony and future focused it is. It's amazing. So, like if I imagine one of the large LLM companies, I mean, I'd expect them to have I don't know what a model is in the case that you're talking about. But, you know, it can be framed as anywhere, either to like a dozen to literally thousands or hundreds of thousands of models. And then similarly, on the GPU side, I mean, the complexity there, you're dealing with potentially hundreds of thousands of devices, if not more.

So, what does that look like on your end if they're trying to play this game of, you know, GPT four needs like really heavy graphics cards, whereas GPT three is cheaper but fewer people are using it. And then there's like this developer stuff, like, what does that actually look like on the back end for you? And then to also just to reframe kind of what the story you've told for the business, it's rather than a billing platform for the customer. It's a revenue platform for the business.

Scott Woody:

Exactly. Right. So, how does it work. So, you know, let's say you're one of these GPU or these loan providers, you're streaming a constant stream of every time a customer requests some tokens from a given model variant you're sending. You're sending us a fact. It just says like customer one, two, three generated 17 tokens on model ABC, and you're doing that across your entire customer base in real time. That means that we have a real time view into exactly which models are getting which amounts of like essentially like token traffic.

We are then providing back out to you, essentially a real time view across your entire customer base of like, okay, here's the real time count of how your customers are using their different tokens. Now, if that's all we did, actually like the model providers would be able to like, do this better than we can. The trick, though, is that the economic thing that you're trying to do is you're trying to match traffic to spend. Now, why are you doing that? You're basically want to make sure that the places where customers are spending the most money are getting the lowest latencies. And so, what we do is because we have the usage data, we marry it to our, the essentially the pricing data on a per customer basis. Now there's like a list price and then customers can have discounts and maybe they have a credit all this stuff. But basically, what that gives you is a real time view of okay, which models are getting traffic and how much money are those models generated in a given or generating in a given minute and a given hour in a given day? And so what the teams internally are looking at is they're looking at a view of their usage data, but they're also looking at where our customer's spending money and then they are making business decisions around, okay, it seems like there's been a surge on model variant ABC.

They are now spending like ten times as much money on that variant as the prior generation of variant. And you know, our GPUs are not allocated optimally. Let's like shift, you know, GPUs from this like low traffic, low revenue model onto this higher revenue model or this higher revenue traffic model. And so, what they're able to look at is they're able to see the usage. They're able to see the spend. They're able to like slice and dice it by time. So, they can see how it's varying over time. They can also, you know, if they wanted to, they could even go into like look at, you know, specific large enterprise clients and look at exactly how, for instance, their largest customers, like which models are they looking at or using. And so, then they can use that to make, you know, more sophisticated, essentially allocation decisions around like, okay, it seems like the trend is last week, you know, it was all about model ABC and now it's all about model AB.

And so, let's like shift, you know, this fleet of GPUs over here. And so that's the kind of stuff that like you know our customers are using our data for. And they're looking at this stuff at a level of frequency that you might be surprised by. It's like multiple times a week at least.

Lex Sokolin:

I would check it right now I'm really interested in that data. So, I mean that sounds like a pretty bespoke build. You know, that doesn't sound like a generic. You are any software as a service company, and you come here and you plug in your billing information and some financial integrations, and then we'll tell you how it's going. That sounds like you targeted the AI companies. You asked them what they wanted, and then you built a wrapper around their specific sort of value chain, which is the GPUs on the manufacturing side. And then the, you know, users on the distribution side. Can you talk about how those relationships came about and how were you able to build the product in that direction?

Scott Woody:

I guess what I would say is that it actually is not really a specific build for them, the kind of general concept of let's take usage data and then marry it to pricing data and then spit it back out to you on a per user per like basically we've basically what we have done is built a generalized platform that allows you to self-serve.

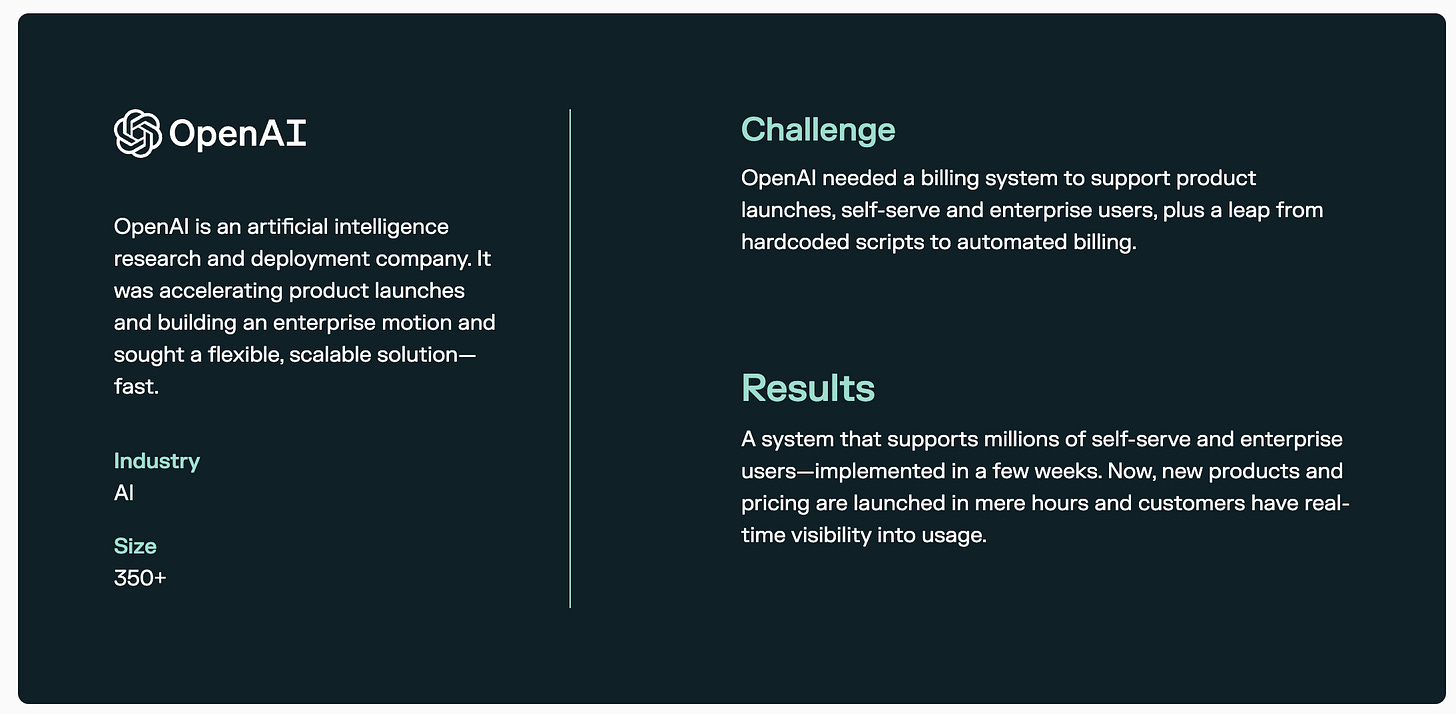

Do that kind of work yourself. And so, we were so like customers use that data. They have different flavors of it. So, like the LLM providers obviously they're using a very specific version of they're setting up Metronome in a very specific way in order to make that work. But we also have that same broad concept used by companies that do database as a service and also other fintech companies. So, it really is like we've built Metronome to be a general-purpose tool that can be used in a lot of different ways, and it just happens to be that this is one of the ways that you can use it. To answer your question about how we started working with the loan providers, I mean, you know, I think we started we've been focused on usage billing for over five years. At this point. I can tell you when we raised seed capital, it was actually really hard to raise money because most VCs were like, usage billing is not a consumption billing usage billing is not a real industry.

There are very few companies that are successfully doing it. At the time, it was like Twilio, New Relic, snowflake, where like the only one AWS of course, but like they were the only ones, and I wasn't really even a thing. And so, what happened is about two years in, we met this company called OpenAI. It was this interesting research lab, but it, you know, very it wasn't that no one had heard of them, but they just weren't they weren't what they are today. And we started working with them and kind of solving a very specific hard problem, which is that the tracking of accurate tracking of usage in real time and then marrying it to revenue like a pricing data and generating a real time view on revenue, was something that they had a really big pain point around, but that they just there was no solution on the market. And so, we kind of got in very early into that company. And it just turns out that what we had seen very early with OpenAI was actually the kind of precursor to what's happening to a huge percentage of companies in the Valley right now, which is that when you adopt AI, when you kind of go all in on the concept of AI, your business by nature becomes much more usage and usage centric.

The reason for that is that AI is a very expensive product. It's like, you know, there's like a lot of costs in training up models, but then there's also a lot of costs on querying models. And because of that, like very cost intensive nature and also because AI is a lot more, I would say like the value of AI, it is how frequently you're interacting with it. What we kind of saw in the early days with the LLM providers was we got a glimpse into the future of how almost every business is starting to operate, which is that they're adopting AI in their core. And because they're adopting AI, they're adopting the core value they're providing back to their customers. Is this is this concept of here's this intelligence that's doing work on your behalf? We were helping answer, well, how do you monetize and intelligence? And it turns out the way you would monetize an intelligence is actually quite similar to the way that you might monetize like a human intelligence, which is you pay them a salary.

Now, AI is because they're replicable and can spread across many machines. You don't want to pay at a salary. That doesn't really make a lot of sense, but what you do pay it for is you pay it for the work that it's doing on your behalf. And it turns out that if you pay for the work that you're doing on you on their behalf, that's a consumption or usage-based model. And so what we just got a glimpse of really early with the LLM providers was a future that like, actually, if you take this AI stuff really seriously, what ends up happening is that your business is forced to become more of a usage based or consumption based business, and we just happen to get in very early with them and then have a product that solved a very unique pain point, which was actually doing this usage based consumption billing is really freaking hard. And doing it in a way that's 100% accurate and auditable is even harder. So that's kind of the story of how we got started with those early guys. And then obviously we've been able to parlay that into like lots of growth across the entire industry.

Lex Sokolin:

That's fascinating. I want to get your intuition even more around this idea of like AI is labor. So, one question that comes to mind is whether AI is scarce or not. When you're a consumer of a ChatGPT that has been fine tuned in some way, or that has had a special prompt like you feel like you're interacting with a very specific agent. But on the other hand, when you're open AI on the other side, you're just serving ChatGPT, and it has lots of little wrappers. But end of the day is just like this one big model. And so I guess my question to you, and in part trying to understand the economic flows, is, you know, should we be just as people relating to each instance or each packaging of I as a individual in a sense like this is my marketing hire. They just happen to be a robot. That's my accounting hire. They just happen to be a robot.

Or is it? There's some giant unknowable math soup, you know, that has been created, and that thing can scale to a billion productive identities. And that's just one thing. So, like, just because you've been closer to the productivity of these things, what's your intuition?

Scott Woody:

My intuition in the short term is there is a pretty high return on specialized versions of these intelligences. I think what you're seeing in the market. Is that more specialized? Either fine-tuned or more commonly like actually just kind of base models with like quite significant infrastructure built on top of them are kind of the ones that are taking off and growing really fast. So like think like a decade gone or something like that. And, and so that's really domain specific. And so the way that I've thought about it is you're gonna, at least in the next couple of years, you're not going to buy some super employee that just does everything. You're gonna buy, like a purpose built agent.

And the reason, the reason why I think that's going to happen is I do think there's like a lot of local contacts, but in reality, the reason why you're going to buy a specialized agent is that the thing that, like the labor that it's replacing is specialized labor that has specialized tooling and specialized interfaces. And so, what you're really paying for, even if the model is exactly the same behind the scenes, the integrations and the places where it's going to plug into your organization look totally different. So, like the customer support agent is going to need to live on your homepage or you’re in your app, it's going to need to talk to your whatever, your ticketing solution, because at some point it's going to translate to work for a human, at least in the short term. And so, the, the, the value in some sense of the specialized agent. Yes, it may have special logic that's different than like the base model of GPT or whatever, but in reality, the integrations are going to look completely different, and the value that you provide is going to be through the like, how that information flows through those integrations.

And so, in the short term, I really do think it's going to look like specialized models. Over time, you start to rework the entire tool chain from top to bottom, and then maybe it can kind of generalize and like it's like you don't actually even have a ticketing solution anymore. It's just like the AI is the ticketing solution. It just does all that work for you. But we're, you know, at least in business in today's terms, like you're still going to have your Salesforce subscription, you're still going to have your Zendesk subscription, and your AI is going to interact with those things. And so I do think specialization is the short term play. I think over time, you know, I think it really just kind of converges to do you believe in like the general part of AGI? And I'm not sure I do think at the base root, like the kind of core intelligence is still going to, you know, I think there's like a really strong return to like making the core intelligence better.

And I suspect what will happen is you'll have these specialized flavors that just feel different because the, the, the toolchain that they're interacting with and the and the type of interactions you're having with customers just look so different, like a sales agent is just going to do totally different things than a customer support agent. And maybe if you dig deep enough, it's OpenAI or anthropic behind the scenes. But there's that value to me as a business is really through all the interaction layers, and that's a lot of hard grinding work that like these companies like Dickerson, etc., are kind of putting in today.

Lex Sokolin:

Yeah. So, you know, if I were to be cute about this, I would say that anthropic and OpenAI are kind of the, the myosin motor protein that you were studying at Stanford. Right? It's the sort of like the core production of energy from calories or whichever chemical process they, they work on. And then you still need to assemble the tissues and the organs and the bodies and, you know, the life cycle and the society and the relationships and so on of how all this stuff exists.

And so, there's plenty of space for specialization, even though, you know, everything's running on the burning of some particular protein to release that energy. I that's just.

Scott Woody:

Agree. No, I think well, I think. Yes, I do think the core concept of there are these base models that are doing a lot of the heavy lifting, and then there are like, I think you can denigrate it and call it wrappers. But like, you know, someone once said that like, you know, everything's a wrapper on top of Postgres and it's like kind of true. And I think that that doesn't mean that doesn't mean that all the value in software accrues to like Postgres. In fact, far from it. But I do think that thinking that these models will just solve all these problems by themselves, I don't know, that seems at least a couple years away, if not like much, much longer. And what I can say is that in business, especially as long as humans are buying software, there really is a strong return on building a highly specified, highly specific, really great interface to the agent. And I do think that there's a lot of room to run in that in that domain right now.

Lex Sokolin:

I'm interested in two things in terms of that specialization, and I don't know how much you can share, but so share whatever you can. But I'm interested in specifically what kinds of integrations the AI first players are doing in order to measure their economic output, you know, and to balance the equation for something being a financially productive agent. So as broad context, you know, anthropic has a lot more revenue on the developer side proportionally, whereas OpenAI is more consumer. Right. So, they must have kind of different stacks that they're plugging in. And then there are these kind of races in terms of deploying new types of models, whether it's upgrading text or whether it's adding reasoning flows or whether it's, you know, images and video and then sound and music and so on. Given your position as kind of a payment processor for this AI economy, where are you seeing growth that is notable that might be fresh or, you know, surprise to you or what are the trends in terms of what's actually working?

Scott Woody:

I think a lot of people expected the bubble would have popped by now on. Like, you know, I think last year and this year there's been a narrative of AI is really, you know, it's overhyped and and these like rapper apps are going to collapse over time. And it's like you're going to see a culling. And I think what you're seeing is there is a culling. It's just that there is real value being created inside of inside of the places where, where there is value. So like, you know, like again, like the decadence of the world, the, the lang chains of the world, there is real value getting created. And so the thing that I guess was most surprising to me is like I thought the way this, you know, if this is a bubble, I thought what it would look like is like the entire ecosystem takes a hit and it's like it feels like a winter. And obviously that that may still come to pass, but what I am seeing is pretty significant replatforming of very major players onto AI and kind of adopting it in a very full-throttled way.

So, like look at Salesforce going up on Dreamforce and kind of committing to agents. I know internally that that is like that is that they are committed there. And so, I think the thing that's most surprising is just how much these large tech companies are really embracing the AI stuff in a way that does not feel. Again, maybe it's like a bubble in some kind of economic value terms like maybe VCs aren't going to make any money. But I do think that, like, these companies are rewriting their entire DNA from the ground up to kind of accomplish or accommodate the AI stuff. I also think the other thing that I would say from a, from a where economic value is really getting created is I do think these really specialized, kind of focused eyes that are focused on a specific vertical. The way that they're measuring value in a lot of cases is they're doing like a run alongside with the existing human labor. So, like, I, you know, I have a small investment in a company that does essentially loan recapturing for auto loans.

So, what does that mean? It's basically when you take an auto loan out, you have to pay your monthly bill. And you know, there's a service where if you forgot to pay or if you don't have the money to pay, they'll call you, they'll text you, they'll send you threatening letters, etc., etc. And the way that's done, you know, typically is they'll you'll outsource that to some, like, you know, like outsourced labor in some part of the world that's very cheap. And they'll call you and they'll leave you messages, etc. This company, what they do is they build an AI agent that is literally like all it does is it gives you a phone call on certain times and it talks to you and helps you pay the thing on the phone. and that company, the way that they prove value is I'll just do a run alongside. They'll like to have their AI agent call 500 customers and they'll have their like outsourced like the, you know, the existing outsourced labor.

Call 500 firms. And what they can just show mathematically is that the AI is like 20% more efficient in terms of getting money back, and it's also like orders of magnitude cheaper. And so, what is happening is like the way they're proving value is they're just doing a run alongside test with the human labor and saying, look like it's freaking obvious. Like this thing is, you know, never complains, it never quits on you and never fails to show up for work. And by the way, it's like 20% more efficient at recovering money. And so, like, it's kind of an economic no brainer. You like save money and you get like a higher like loan repayment terms. And so that's the kind of thing that I'm seeing with a lot of these specialized AI agents. And I think the interesting thing about that, like that is interesting for me to think about because like we do like billing for these kinds of services is how does that customer establish trust with their customer that like I'm actually proving this value.

So, in the case of that loan remittance, what they're doing is they're essentially running a little case study over the course of a month. And then they are kind of just showing the data back to the customer. And then the customer is like, okay, this is like obvious. Like we're just going to adopt. And that's the kind of thing that I see happening really, really quickly across the industry. It's not super visible, right? Because it's not a self-serve flow. It's like a quite involved like POC process. But the results are so like lopsided that what I think is going to happen is that industry is just going to turn on its head in like quarters, whereas otherwise I would have expected it to take years to kind of transition. So I think what you're going to see, like the dynamic I would forecast if I were to make a prediction, is that certain industries are going to look like the way they've looked for the past ten years, and then within a year they will look completely different.

And so they will tip incredibly fast because the ROI story is just so absurdly clear. But the way it will happen is it will happen through these, like, you know, point deployments that, you know, do a run alongside POC type of thing and then suddenly, you know, in six months, like the entire industry will turn over. And I think this also gets into one interesting thing that I've just observed, having studied essentially market niches for a long time, which is that there's like this game theory for players inside of a submarket. So, let's pretend you're like ticketing software. Zendesk intercom come along and say, we're going to move from a seat-based model to a outcome based model. Suddenly, every single player in that industry has like a six-month window to adopt the new pricing model or die. And so what you see is that, like these companies announced in like August of this year that like, they're moving to this model in like September, and suddenly every single player on that market has been forced to adopt a usage based or essentially like a work based model within months.

And so, what you will see is that these, like these market dynamics, are playing out at a speed that is like honestly flabbergasting to me. And so, what I would say is like, you know, depending on what industry you're in, you should really study you’re the competitive landscape. And if a, if one of your competitors is doing something really novel with like pricing and packaging and especially with AI and it works, you should expect your entire industry to be rewritten in the quarter in the course of quarters. And the speed of these transitions is just honestly quite alarming in a in a positive way, but very surprising. And so, I would just look for like very fast, like changing dynamics over the next couple of years.

Lex Sokolin:

That's the certainty, fast changing dynamics. I'm absolutely with you. Well, thank you so much for a fascinating look into how you built the company and what the future looks like. But I think even for many people, understanding what the present looks like will be quite mind blowing if our audience wants to learn more about you, Scott, or about Metronome, where should they go?

Scott Woody:

They should go to Metronome.com and also, you know, we have a LinkedIn presence. We're happy to go follow us there and send me a DM there. And I'm happy to talk, especially if you're looking for either, revenue platform that's going to help you grow your business faster. Or if you're interested in working on anything related to Metronome, please come hit me up there.

Lex Sokolin:

Fantastic. Thank you so much for joining me today.

Scott Woody:

Awesome. Thank you.

Postscript

Sponsor the Fintech Blueprint and reach over 200,000 professionals.

👉 Reach out here.Read our Disclaimer here — this newsletter does not provide investment advice

For access to all our premium content and archives, consider supporting us with a subscription.

Share this post