Hi Fintech Architects,

Welcome back to our podcast series! For those that want to subscribe in your app of choice, you can now find us at Apple, Spotify, or on RSS.

In this conversation, we chat with Richard Ma - CEO and co-founder of Quantstamp, a leading blockchain security company. Richard has over 10 years of experience in the financial industry, including roles as a quantitative strategist, derivatives trader, and portfolio manager.

Ma graduated from Cornell University with a degree in Electrical Engineering and Computer Science. Richard was first introduced to blockchain technology while working as a trader at Archelon Group, and soon recognized the potential for using blockchain to create a more secure and efficient financial system. In 2015, he joined Tower Research Capital as a senior quantitative strategist. Two years later, he left Tower to launch Quantstamp with co-founder Steven Stewart.

Under Ma’s leadership, Quantstamp has grown into a leading provider of security audits for blockchain protocols and smart contracts. The company’s mission is to secure the decentralized internet of value, and it has protected $100B in digital asset risk from hackers. More than 300 startups, foundations, and enterprises work with Quantstamp to keep their innovative products safe.

Richard Ma has a BS from Cornell University in Electrical Computer Engineering. Richard has also attended the Stanford Business Leadership Series and the Stanford Executive Program for Growing Companies.

Topics: blockchain, security, audit, hacking, hacks, crypto, cryptocurrency, digital assets, Web3, DeFi

Tags: Quantstamp, Ethereum, MakerDAO, Compound, EigenLayer

👑See related coverage👑

[PREMIUM]: Long Take: The $2B of tokenized Real World Assets (RWAs) trading onchain in 2023

[PREMIUM]: 2022 in Review: Our top learnings in Web3, market volatility, and industry insights

Timestamp

1’30: From High-Frequency Trading to Fintech Innovator: The Journey to Creating Quantstamp

9’26: Demystifying the Audit Process: Inside Quantstamp's Approach to Securing Blockchain Projects

15’04: The Art and Challenge of Blockchain Audits: Navigating the Complex World of Code Review

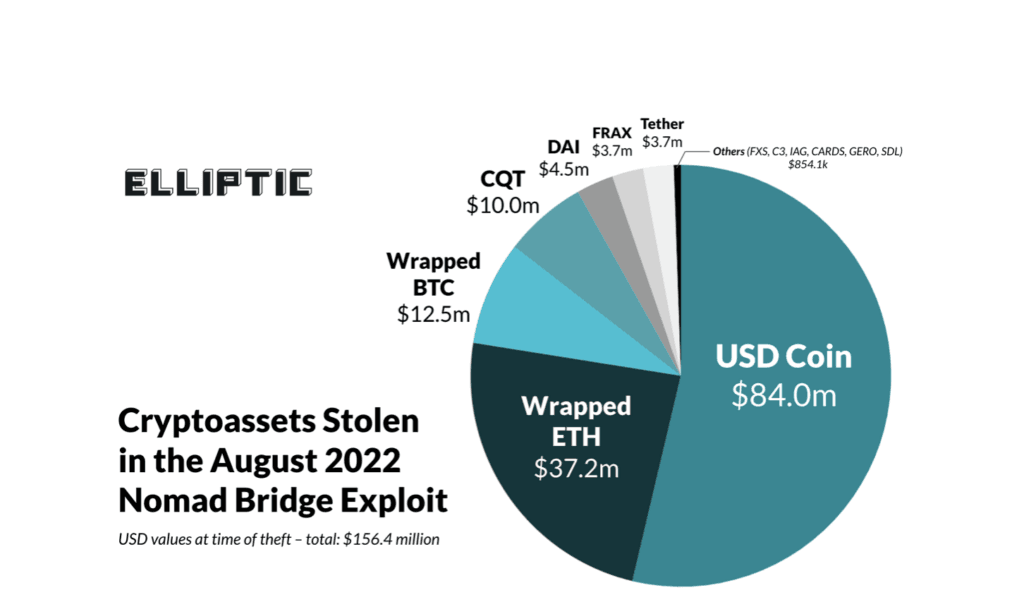

19’37: The Nomad Hack: A Case Study in Preventability and the Importance of Diligence in Code Auditing

24’39: Web3's Adversarial Landscape: Unpacking the Motivations Behind Crypto Hacks and Security Breaches

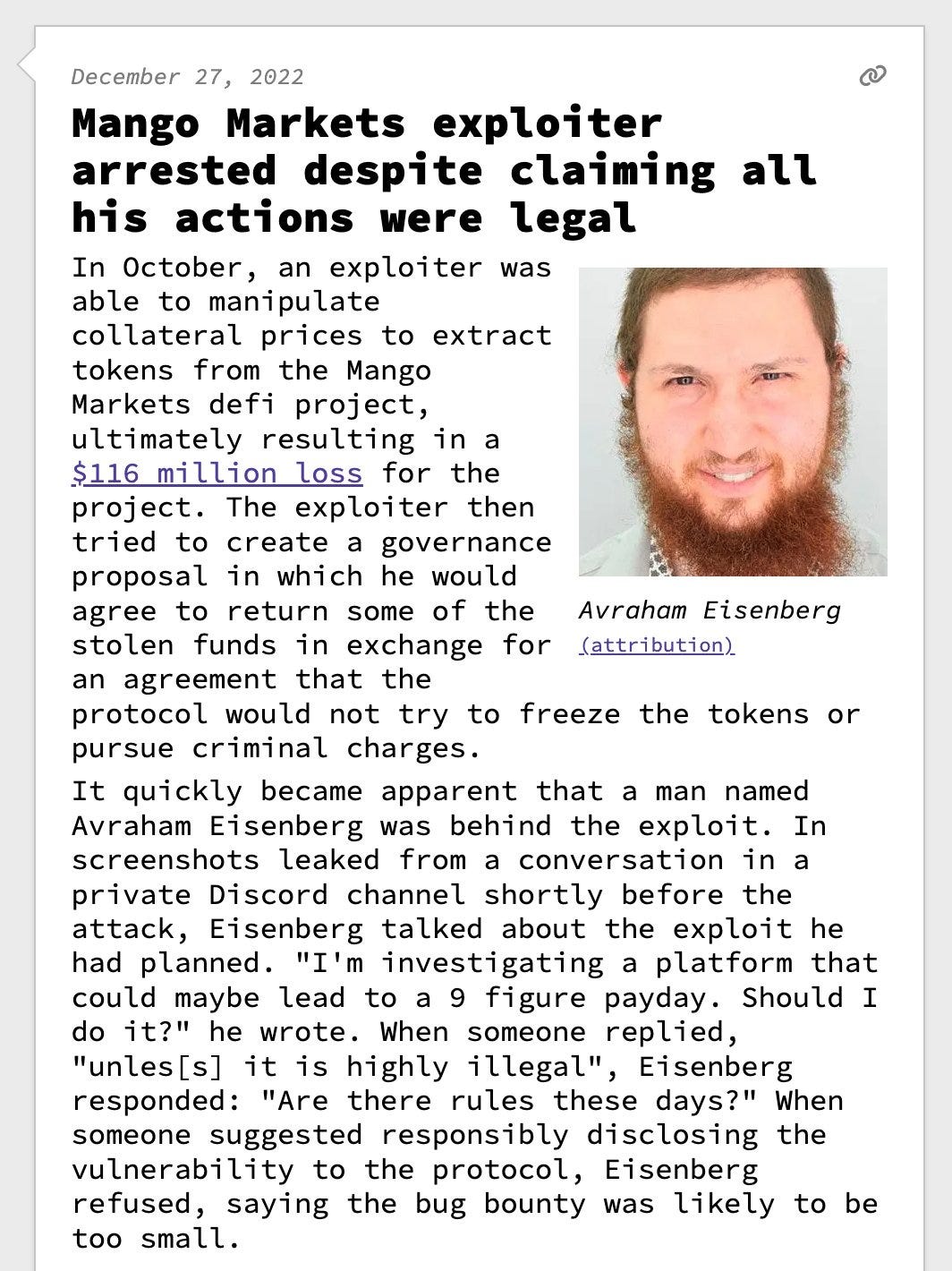

29’13: The Ethical Dilemma of Crypto Hacking: Navigating the Fine Line Between Exploitation and Market Manipulation

34’59: Exploring the Frontier: Key Innovations Shaping the Future of Blockchain in 2024 and Beyond

36’34: The channels used to connect with Richard & learn more about Quantstamp

Illustrated Transcript

Lex Sokolin:

Hi everybody, and welcome to today's conversation. I'm thrilled to have with us today, Richard Ma, who is the CEO at Quantstamp. Quantstamp is a fantastic firm, super interesting, one of the first blockchain audits and security companies in the space that has been doing great work across Web3 now for many years. And that of course gives Richard a really interesting seat to the ecosystem and everything that's been going on there. With that, Richard, welcome to the conversation.

Richard Ma:

Yeah, really appreciate having me on here, so.

Lex Sokolin:

How did you end up creating Quantstamp? Where did the idea come from, and can you talk a little bit about what it felt like? What was the environment, what were the trends, and how were you thinking about the moment?

Richard Ma:

Basically, I was a high frequency trader. I had invested in Ethereum quite early on in 2016. I invested in the DAO, which was the first big project on Ethereum. About two or three weeks after investing in the DAO, it was actually hacked for over $50 million. That gave me the reason to start Quantstamp, because during the hack it was very chaotic. I started to really dig into the security side for Ethereum.

Lex Sokolin:

What does it mean when you say it was hacked? What actually happened with the DAO and its smart contracts?

Richard Ma:

Yeah, so basically in the Dallas smart contracts there was a bug, it was called a reentrancy bug, and it basically allowed the attacker to withdraw more money than they were putting in, and it's really due to how the smart contracts code was ordered. So, because of this technicality and the fact that all the money in Ethereum is just in these publicly accessible contracts, the hacker was able to basically take advantage of this bug and steal the ether. So that's sort of the summary of it.

Lex Sokolin:

Yeah, so I'm going to go straight for the jugular off the bat. And I remember that moment and it led to the Ethereum fork, Ethereum Classic and the Ethereum that we have today, and was really an existential moment. And one of the things that people kept saying was, "Code is law." So, whatever the code executes is kind of holy, sacred, and should be respected, and forking the chain to remove that bug is sacrilege and should not be done, because it doesn't follow the principles of immutable financial services. Are bugs in the code law as well? Is it special and sacred, and is it a contract if somebody just writes spaghetti code that then gets manipulated?

Richard Ma:

Yeah, I would say code is not sacred in any way. People make mistakes all the time. Typically for normal software, in every 100 lines of code, there's usually a bug, and it's just very natural when you build software, that people make mistakes. So I don't think that this code is special in any way. In the same way that maybe, for example on your iPhone or your computer, you have automatic updates all the time. And actually, a lot of those updates, they're security updates, they're basically patching up code. And amongst engineers, there's the saying that basically a lot of software is literally just patches all the way down, and that's no different with even if you have smart contracts.

And it really started with the very first one, because the interesting thing about the original DAO hack is that we now know that the original hacker, his name is Toby Hoenisch, he was the CEO of 10X, which was one of the first ICOs. The interesting thing was that during that DAO hack, he actually went into the Slack channel at the time and was asking about this vulnerability. And I think partly due to people's responses to him, he wanted to basically carry it out. So, it's kind of like, even starting from this very first one, people had more, I guess, idealistic views on what it could be, compared to the fact that just whenever you build something new, there could still be bugs. And that's still true today.

Lex Sokolin:

Got it. Okay. So this was the impetus for you to try to create some sort of answer, some sort of solution. How did you narrow down on the idea, coming in from the trading space? How did you figure out the right projects to address this vulnerability?

Richard Ma:

My own background is that I was a quant, so I was a quant trader doing high-frequency trading. Every day in high-frequency trading, you're basically making hundreds of millions of dollars in trades with software. And a really interesting thing is that when I was a trader, we basically managed to never have any incidents where we had a bug that would somehow cause us to lose a lot of money. And a lot of it came down to having very extreme software testing methods, making sure, basically battle-testing all the software in a lot of different ways before putting it into production. So, when I started the company, I just gathered a lot of really smart friends and tried to apply those same software testing techniques to Ethereum smart contracts. Luckily when we started the company, all the smart contracts, they were really simple. So, we could basically apply these techniques in a very exhaustive way, and really make sure that these projects were safe. And over time these projects became more complicated, and we had time to adapt to that.

Lex Sokolin:

Gotcha. And so what were the early days of the company? Did you land on a services proposition, and who are some of the early folks that you worked with?

Richard Ma:

So early on, our very first thought was to maybe make a software online where you could charge $20 per automated audit. And what became apparent was that the projects, early on, it would be a couple of guys working on a brand-new idea. And they would have a white paper and some rough draft of what they wanted to build. And for these early projects, what I realized is that they basically wanted to talk to us as people, instead of just run our security software. Because it's their first time building a project, they had lots of questions, they had lots of design considerations that they wanted to discuss with an expert. So early on, what we did was we actually just essentially gave a lot of free advice to folks.

And then also, what we did was a lot of the projects, they weren't ready for production yet. So, I basically just invested as an angel investor into these projects and tried to offer some good advice. And then over time, some of our very first customers like Request Network and MakerDAO, and some of these projects, they started to be really well-known names and that just sort of naturally grew from there. So, at one point, MakerDAO, it was more than 50% of all the value that was in DeFi. And so, we did a lot of audits for them and sort of grew alongside them into the really broad current phase of the market.

Lex Sokolin:

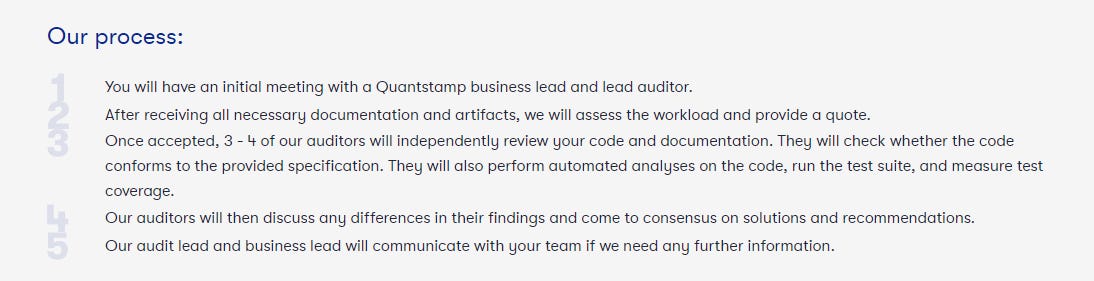

Maybe if you could flesh out what an audit is and what the product is. I think it's interesting you talk about, they just wanted to talk to a person and have a technical counterpart, and I think that's super important. But when you think about the packaging of the product, the audit product, what does that look like, and what does the project get at the end of it? And maybe we can go from there.

Richard Ma:

So today for audits, there's three main parts. So, the first part is basically the project they share with us, their GitHub, so they share with us the code they have, and we basically have a team of experts, usually three people go through the code line by line and look for vulnerabilities. So that's the first part. And then basically from the start of the project, we continuously talk with the project. Usually, we find different types of ways to hack the project, so we'll try to explain to them, here's how we would do it. And through talking to the project back and forth, usually we'll actually end up uncovering even more stuff. And also, the project, it gives them a time to understand these attack factors.

Because for the engineers of the project, usually when they build it, they're mostly focused on the features. For example, if they're building a new decentralized perpetual exchange, the team is mostly focused on building out all the features, making it really user-friendly. And then when they bring it to us, we're looking at the security. So, we might bring a lot of considerations that they haven't thought about. And so, through this process of talking, we usually help them to uncover more stuff and then get them to think a bit more completely about the security side of the project. And then the third part is, so at the end we usually will have a final report, and for the typical project, we'll end up with anywhere from five to 20 security findings, and then they'll basically go and fix it. And then at the end we'll publish a public report, and then they'll basically at that point have fixed everything. So that's usually the process for an audit.

Lex Sokolin:

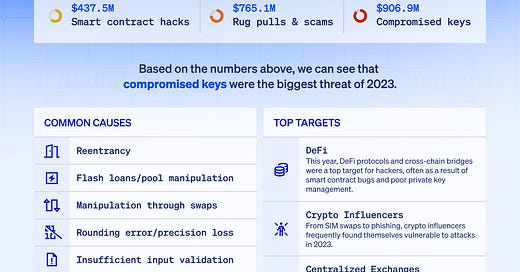

Two directions I want to go. The first is to ask, what are the things that people usually make mistakes on? Is it a series of patterns that's similar in different projects? Like the more of these that you do, the more you realize, oh, everyone's got re-entrancy bugs, or some sort of flash loan attack vulnerability. Is there five most likely to break things, or is every project different? So maybe we start with that.

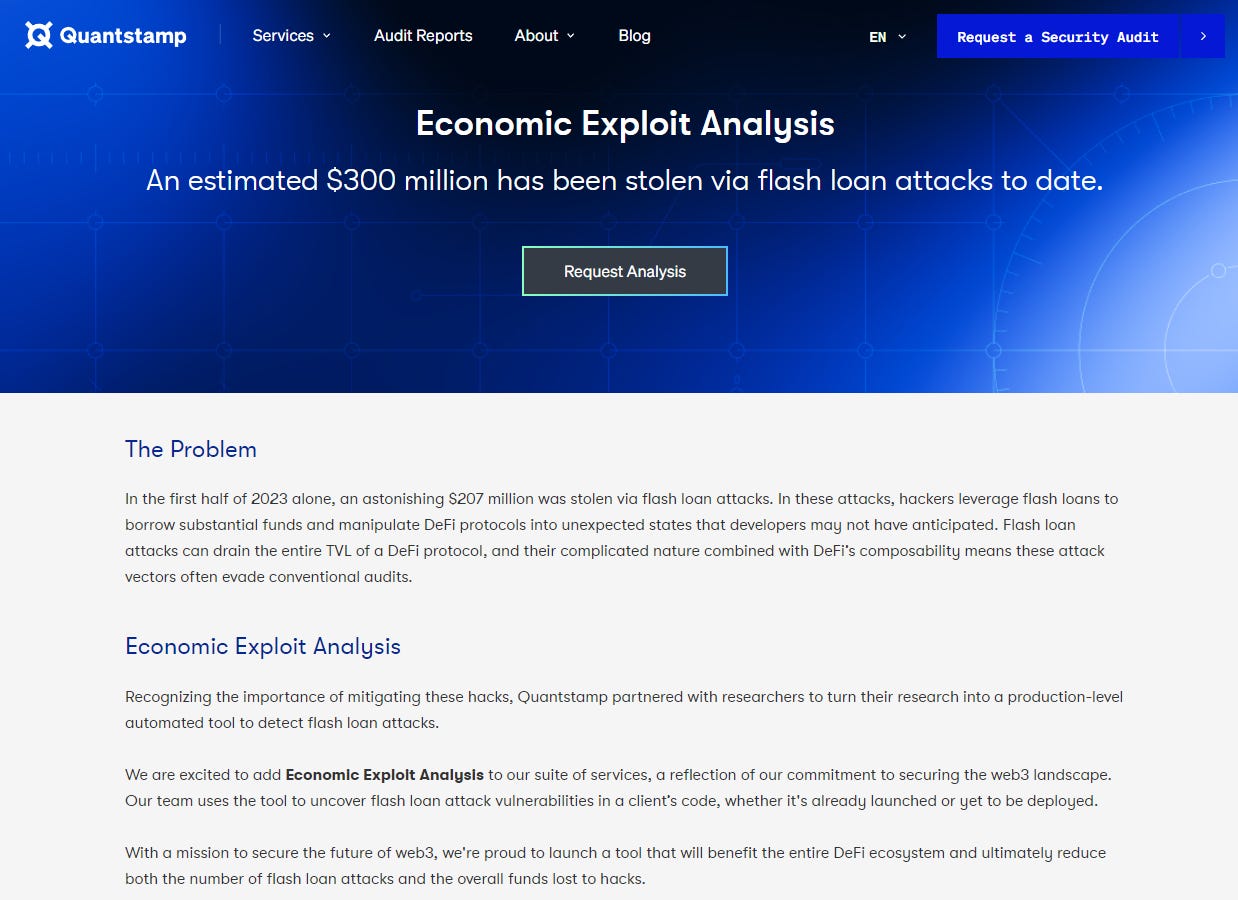

Richard Ma:

I would say in each category of project, there's usually a very common bug. So, for example, in DeFi protocols involving lending, there's often ways to basically create debts in the DeFi protocol where the amount that you put in, it's more than the debt that you take out. And these are usually done with flash loans to basically create a temporary imbalance in the protocol's accounting, and they tend to lead to insolvency events in these lending protocols. So usually for a specific type of project, we'll go in, and we'll already know, these are the most common ways where you could hack that project. And we'll take our experience from auditing similar projects and applying those things and basically trying to battle test it with that project. It's very similar with bridges. So as many people know, there have been a lot of bridge hacks. The reason is that bridges tend to have assets on one protocol, and they have liabilities on a different protocol where you have these receipts that represent deposits on a different chain.

And so, there's all these different types of vulnerabilities because of mismatches in the accounting for proving that you have a certain number of deposits on a different chain. So usually, we look for those things first, where it's common errors, and then the bulk of vulnerabilities we usually find is actually in design. So, it's in how people wanted to design something versus what they specifically wrote. These take all sorts of different shapes and sizes because every project, they have very different designs. But for those, we usually try to get as much documentation as possible, talk to the team a lot, and understand what they were trying to do, and then see if the code is actually doing what they're trying to do. So that's still, I would say, the bulk of the findings we have.

Lex Sokolin:

Reading someone else's code seems like the hardest things ever. I know when often developers build stuff... Let's say you have a project and you've got a tech team, and they spent a year and a half building things, and then you lose the CTO or something and a new CTO comes in, and often it's like, "Oh my goodness, I can't, I don't understand how any of this is structured. Let's rewrite everything from scratch on a different infrastructure," or whatever. And it seems like you have to be doing the hard thing, which is really understanding how the developers of some projects are structuring an idea, and you can't change it. It seems difficult and messy.

Richard Ma:

Yeah, it is very difficult. It's actually extremely dangerous and kind of a job with a very high learning curve that doesn't stop. So just to your point, most teams that get an audit, they would have worked on it already for anywhere from three months to a year and a half before they get the audit. So, the project, the team that worked on it, they're the ultimate experts. And when we come in, we have to find bugs that they missed and basically repeatably do this. And so, it's very hard, because first when we come in, we need to learn how the project works, and then we only have a certain amount of time to find it. We don't have the luxury of working on it for six months.

So, this is probably the hardest part of being an auditor. And the thing that I think gets us better at it is that we're constantly doing it. So, every year, Quantstamp does about 250 audits. Most of the people at Quantstamp, they're basically veteran auditors. So many of the people that are helping with audits, they've worked on it for anywhere from three to six years. So, it's possible to get better at it, but because also in Web3 people are inventing new stuff all the time, and sometimes inventing new languages and new paradigms, we just basically have to constantly keep learning. So yeah, as you said, it's basically the hardest part of doing the auditor's job.

Lex Sokolin:

So 250 per year is quite a lot, and often the better your brand gets at audit, the more retail consumers or users of projects think that the brand is insurance. A new project launches, you don't know anything about it, it's just some black box called Blast that you send money into that disappears. If it says, "Audited by" and it's got Quantstamp or it's got Consensys Diligence or another well-known player in the space, people feel comfortable to hand over money and put it into the black box and hope nothing on the other side goes wrong. What do you think about this dynamic of treating code auditors as almost insurance providers? Is that the right, the wrong way to go about it, and how would you advise people?

Richard Ma:

Yeah, I would say actually the number one thing is to literally open the report and read it. And this is something I actually don't see a lot of people doing. So, because for Quantstamp reports, we try to write it so that it's just readable by a non-technical person, especially the first page. So, when teams call us to get an audit, we can't change exactly what they're going to do after the audit. Some teams, they'll actually choose to not change anything, even if we find stuff. And so, the thing that we can do is basically we write it into the report. So, if we feel it's pretty risky, we'll actually just write it in print and we'll say this is really risky, it's not really ready for production. It needs way more testing based on the number of bugs we found; we think there could be more.

We'll write it there. And the number one thing I think is just if you're going to deposit your hard-earned money into something, definitely take the five minutes to open the report and actually just read the first page. Obviously, it's not really possible to catch all bugs a hundred percent, because we can only spend a limited time on it. But I think over the lifespan of Quantstamp, we've had basically greater than 99.9% success rate, at least at finding things and making sure projects aren't hacked. And most of the projects that are hacked, we actually already have internal understanding that this could happen based on the risks. So, we try to write that in the report.

Lex Sokolin:

Can you give some examples where things did go wrong? I'm interested in two aspects of it. The first is, would it have been preventable, what's the reason for why something wasn't caught? And then separately, the public perception and how to deal with that.

Richard Ma:

So I think probably the biggest incident in Quantstamp history is the Nomad hack. And for Nomad it was definitely 100% preventable. So basically, in the case of the Nomad hack, now, this happened about a year and a half ago, and so it's a bridge, and basically there were three things that could have made it preventable. So, the ultimate cause of the Nomad hack was that, after one of the Quantstamp audits, we found a lot of findings. While the project was still fixing the findings, one of the developers in Nomad, they basically pushed a new change that was not audited. It was a one-line change, it was basically sort of a fix they had for something else, and this one-line change actually caused the vulnerability that basically led to the Nomad hack. That was a case where I think it could have been very preventable.

And I think partly why they pushed the change was because it's a very small change and usually projects, they don't want to get an audit for just one line of code, for example, because the audits, you have to plan them ahead of time. So, I would say the three preventable things there was, one, getting a lot of extra code reviews even internally in the company, so that nobody can directly push the master and then get the change just deployed to production. The second part is just taking... A lot of teams in Web3, they're in a rush because whether it's bridges or DeFi protocols or layer 2s, people always feel like they're competing with other projects.

At the time, I think Nomad was competing with Wormhole and LayerZero and trying to show that... To get traction in the market. And so, a lot of times, actually probably every two to three weeks, Quantstamp, behind the scenes, we usually save a project by finding a critical bug and then trying to delay their release. And a lot of times we're able to save them because they're not in such a rush that they've already put it into the market, so we can't have extra time to look at it. So, I would say this is the part where it's harder for the public to see because they just see the ultimate hack, but they don't see the environments that created the lead-up to the ultimate event.

Lex Sokolin:

Do you have to deal with that blow-back, and what are the tools that you have to do it?

Richard Ma:

Yeah, I would say luckily most of the time we're basically able to help the projects. So probably on a weekly basis we might save, I would say anywhere between $1 million and 10 million in hacks, pretty much on a weekly basis. Because almost for every single audit, we'll find high or critical severity bugs in these projects. So, by basically helping them to fix it, we're preventing these disasters from happening. And so, most of the time it's pretty smooth. Most of the time, I think, for Quantstamp, we prefer to just stay in a supporting role and basically try to help out. And then over the years, that's been very satisfying, so yeah.

Lex Sokolin:

Here's a left-field question. Often Web3 is framed as an adversarial environment, where you've got potentially adversarial relationships at every part of the economic stack. So, when you've got blockchain protocols, there are people who are trying to create security, but they're trying to create security because there's attackers who want to break open the chain and direct resources to themselves and overrun it. And then when you go to the smart contracts part, you've got applications that other people are trying to hack, or bridges that people are trying to break and pull money out from. Do you have a sense for why the presumption is so adversarial, and then who the adversaries are? You mentioned that the DAO hacker was an early ICO CEO, which is like, maybe don't undermine the currency that is funding your project. But what's the nature of these financial adversaries, and are there stereotypes or archetypes that you can draw, that you could paint, that other people would understand?

Richard Ma:

I would say just at the highest level, a lot of the hacks they happen because of the experimentation. So, for the things that are really stable in the Web3 ecosystem, for example Compound or Aave or Uniswap, or stable coins like Tether, those have been battle-tested, they're extremely safe. And the hacks tend to happen more where people are experimenting. So today there's still a lot of experiments with ZK-rollups, optimistic rollups bridges, and so that's where they tend to happen. In terms of the archetypes, I think at the present day, they tend to fall into three main categories. The number one is actually the most well-publicized and well-known one, which is that essentially for the last two years, North Korea, they've been hacking crypto projects, and they've stolen more than $2 billion at this point. So, they did some of the really big hacks like the Ronan bridge hack and also the hack for Harmony.

So, there it's a nation-state actor, they have an extremely sophisticated team. They're not only hacking smart contracts, they're also hacking websites directly. They're hijacking the DNS. They also do phishing attacks to get access to private keys. So I would say that's one archetype that exists today. And basically whenever... Actually, on a weekly basis for Quantstamp, we try to remain on very high alert, just train our staff constantly and also help to train our customers to deal with this.

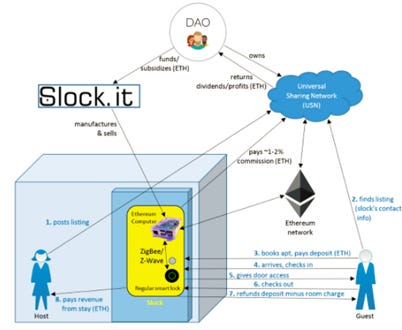

I think the second archetype is more like, I would say it's the developer that found something and feel like they're not going to get treated well by the project directly. And I would actually put a Toby who was the DAO hacker into this category. And so basically from what I know, and from talking to people that have worked with Toby in 2016 during the DAO event, he was in the Slack channel, and he was trying to talk to the developers, this company called Slock.it that built the DAO contracts, and discussing the potential for this re-entrancy vulnerability.

And I think people didn't take him too seriously and they didn't take any immediate actions, and they weren't really humble about the fact that something like this could happen. And this archetype is basically these developers that maybe try to be the white hat and see that maybe they're not really getting heard and then they decide to instead to exploit the contract. And we've actually seen that, quite a few of these through the years, where it's like when developers try to report a bounty, often the projects don't really pay them, even if it's a posted bounty on our website. And so, it leads people into the gray hat or black hat side. And then I would say the third archetype essentially is sort of these financially motivated actors. So, it happened a lot during DeFi summer, during the rise of DeFi, where there were a lot of people on crypto Twitter that were extremely public about hacking a project and calling it a complicated series of trades, basically classifying these paths of user funds as just very profitable trades.

Lex Sokolin:

That goes back to the earlier point of code is law turned into sort of this pirate ethos of, "If I can market manipulate some oracle into helping me steal user funds and break collateral limits and stuff, then that's a good thing. It's a nice thing I've done to battle proof this code," when in reality you are market manipulating a market. And it is satisfying that end of the day, that clear market manipulation is getting caught and kind of flushed out.

Richard Ma:

Yeah, it's satisfying, and I am really glad that this is sort of not a thing anymore. So, a really good example is actually this guy called Avi Eisenberg. So, he basically hacked Mango markets, and was very public about it, saying that, "Oh yeah, this is just a profitable trade." At the time, I think the hack was over $60 million. This happened about a year ago, and he was actually tried in court and found guilty, and I think he's currently in jail. So, the reason why this third category of just financially motivated actors, it's highly unethical, is just because lots of people, they've put their hard-earned money into these protocols. And so, when somebody goes and hacks them, often people lose their life savings, or a substantial part of their life savings.

And over the last six years of running Quantstamp, I've talked to a lot of these people and the stories are really sad. But when I talk to the hackers, they seem to be very disconnected from this reality that a lot of these people face. And so, I'd say, I'm glad people are not really using this as an excuse anymore, because now people are actually getting tried criminally for it. A lot of times recently what's been happening is that when there's a hack and people have caught the hacker, the hacker has basically returned the funds. This has been happening a lot in the last year, I would say, because they're basically afraid of criminal prosecution, since the code is law thing, it hasn't stood up that well in court.

Lex Sokolin:

Do you think that we're ever going to have much more secure, decentralized financial applications? And I see a couple of different paths, whether it's upgrades to the programming language, and one of the claims of the move programming language is... It's more formal and therefore there's less error for mistakes. Or maybe you end up turning into software, the audit service in a way that lots of people can actually adopt. What are the paths to getting out of this fairly janky period where everything is kind of scotch-taped together, is there a path for software-based anti-hacking tooling?

Richard Ma:

So I would say the parts that are going to be really safe are the battle tested stuff like stable coins and Zend, Compound, these types of products that have been really battle tested through the years. So those are just going to get even more safe over time. And the parts that I think will pretty much stay always risky are the new experimental projects. And obviously because I deal with these projects every day and I see what happens under the hood, I say to my friends that I'm basically working inside of the sausage factory and watching how the sausage gets made on a daily basis. And from watching how the sausage gets made by founders that have good intentions, but they're not very experienced, they just started the company. I think that part will basically always be risky.

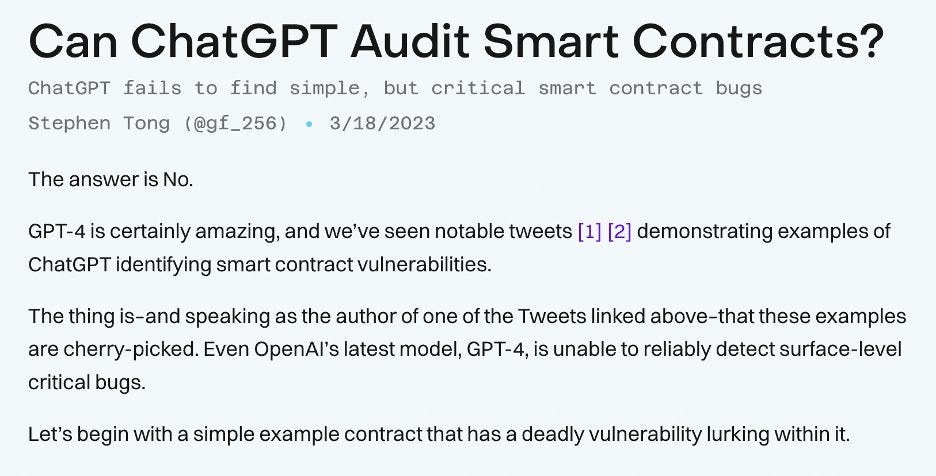

And the reason is that a lot of the bugs, they're kind of these design bugs. There's no way to perfectly cover them. And you can see it from the parallels with some of the biggest companies in the world like Apple and Google, Netflix, they still have bugs all the time. They just constantly patch them, and you're getting constant updates to your MacBook. A lot of those are security updates. And so even there, there's constantly new zero-days that are discovered, like mistakes that developers have made. The thing I think that will improve things is probably AI-based debuggers. So, for Quantstamp, we've been building a tool called AuditGPT, and we've started to use it in all of our audits. And basically, what it does is that it translates the code into human-readable language and then helps to match it against previous vulnerabilities, and basically flag it to the auditors to look more into. So, I think this part of the security auditing stack and coding stack, it'll just get better over time. It's not a perfect solution, but it's definitely, I think, helps the people that are writing the software.

Lex Sokolin:

Like you say, there's a reason that risk capital is risk capital, and that's because it's at risk. And so, when you are building something super novel and the very ground in which you're trying to build it is changing every day, then there's no way but to be hands-on and be exposed to novel software dangers. Last question for you. You have fantastic access to all the projects that are launching in the space, because you're auditing them before they go live. If you look forward at 2024 and 2025, what kinds of things are you seeing that you think are the most exciting sectors or most exciting names for others to focus on?

Richard Ma:

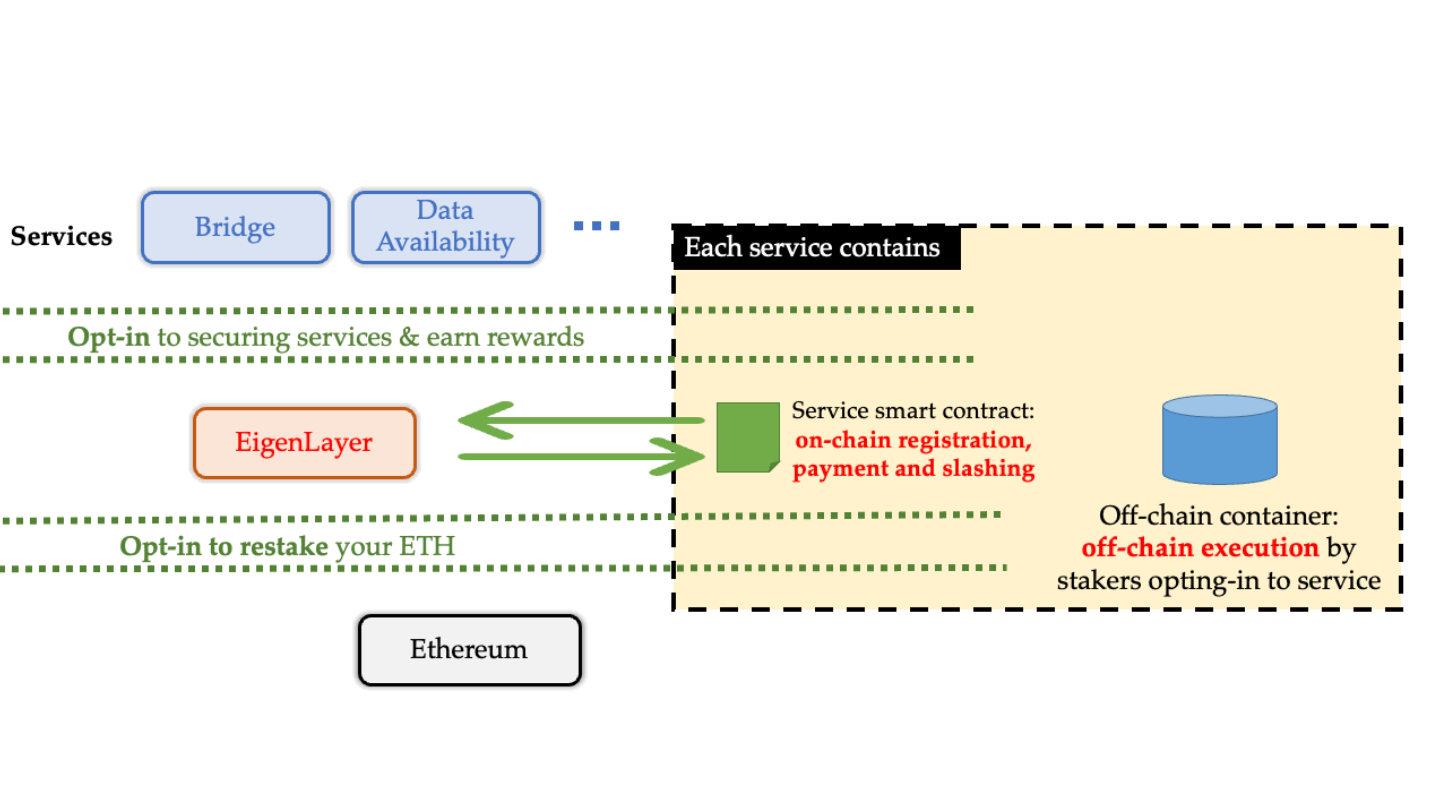

Yeah, I would say probably the three top ones is, one is definitely EigenLayer. So, it's a project that is allowing you to restate your staked ether, and basically get more yield and contribute security to other protocols, bootstrapping on top of Ethereum's security. It's kind of a new frontier in terms of, there's so many projects building on top of Eigenlayer, and we've been auditing a lot of them. It's attracting a lot of VC capital as well. So, this is definitely going to be a theme for 2024.

The second one is the rise of ZK-rollups. So currently the biggest two rollups are Optimism and Arbitrium, which are both optimistic rollups. And for optimistic rollups, you have to wait up to seven days to withdraw your money. And basically, for ZK-rollups, you can get instant proofs, and so that's a lot better. So, I would say that's sa second theme. And then third, there's a lot of exciting development happening on Solana, so it's having a huge resurgence. There's a lot of projects that we're building during the bear market, which are now launching, and so we've been auditing a lot of those, and I think those are going to be pretty big for 2024.

Lex Sokolin:

Amazing. If our listeners want to learn more about you or about Quantstamp, where should they go?

Richard Ma:

Probably the easiest place is just quantstamp.com, or our Twitter @quantsamp, and yeah.

Lex Sokolin:

Fantastic. Richard, thank you so much for joining me today.

Richard Ma:

Appreciate it. Thanks a lot.

More? So much more!

Disclaimer here — this newsletter does not provide investment advice and represents solely the views and opinions of FINTECH BLUEPRINT LTD.

Want to discuss? Stop by our Discord and reach out here with questions.

Like it? Share it!

Share this post