Hi Fintech Architects,

Welcome back to our podcast series! For those that want to subscribe in your app of choice, you can now find us at Apple, Spotify, or on RSS.

In this conversation, we chat with Jason Morton - CEO at EZKL, Founder at Zkonduit, and an Associate Professor at Penn State. EZKL aims to simplify the implementation of ZK cryptography, allowing developers to focus on building applications instead of delving into complex mathematical theories. It achieves this by taking a high-level program description and generating the necessary components for ZK verification. This initiative aims to allow developers to concentrate on creating applications without needing to delve deeply into the complex underlying mathematical theories.

In addition to this, Jason is an Associate Professor at Penn State University where he focuses on applied algebraic geometry and tensor networks in fields like statistics, computer science, and quantum information. His work explores the connections between various information-processing systems, such as Bayesian networks and quantum circuits, through the lens of tensor networks and monoidal categories, aiming to uncover shared mathematical foundations across these domains. He has contributed to significant research, including studies on dimensions of marginals of Kronecker product models and computational methods in theoretical computer science (Penn State Science).

Topics: fintech, machine learning, ai, artificial intelligence, llm, zk-proofs, zero-knowledge proofs, blockchain, web3

Tags: EZKL, Ethereum, Bitcoin, DARPA

👑See related coverage👑

DeFi: $225MM raise for Monad, Ethereum competitor with parallel execution

[PREMIUM]: Trusting Artificial Intelligence based on ZK Proofs, and the $10B fraud market

[PREMIUM]: Web3: EY & Polygon enterprise rollups; new NFT smart contracts features; $22MM for Nil Foundation ZK Proofs

Timestamp

1’18: From Mathematics to Machine Learning: Tracing the Journey of an AI Researcher"

3’47: Putting Theory Into Practice: Navigating the Evolution of Neural Networks and Blockchain

7’18: Understanding Zero-Knowledge Proofs: The Evolution and Impact of Cryptographic Privacy

11’02: Digital Trust and Privacy: How Zero-Knowledge Proofs Enhance Security and Authentication Online

16’15: From Consensus to Cryptography: Exploring the Transformative Impact of Zero-Knowledge Proofs on Blockchain Technology

22’30: Revolutionizing AI with Blockchain: The Strategic Focus of EZKL on Verifiable and Secure Machine Learning

28’18: Connecting Worlds: Bridging Advanced AI and Blockchain Technology for Economic and Computational Efficiency

31’21: Strategic Transparency: Integrating Proofs in Smart Contracts for Trustworthy Investment Strategies

35’44: EZKL Defined: Navigating the Blend of Product, Library, and Framework in Blockchain Proofs

38’48: Blurring Lines: Will Web3 Innovations or Traditional Web2 Enterprises Drive the Future Demand for EZKL?

40’28: The channels used to connect with Jason & learn more about EZKL

Illustrated Transcript

Lex Sokolin:

Hi, everybody, and welcome to today's conversation. We have an absolutely fascinating topic today with Jason Morton, who is the founder and CEO of EZKL. EZKL is a technology that helps people build around artificial intelligence using ZK proofs and the latest in technology. So, we'll open all of that up. Jason, I'm so excited to have you on the podcast.

Jason Morton:

Good to be here, Lex.

Lex Sokolin:

So maybe just to get us into the mood, how did you enter the industry or how did you enter this space? What is your background? Where do you come from, and what do you focus on?

Jason Morton:

Yeah, thanks. So very long road. You can think about what we do today as taking an AI model or a statistical model, turning it into a system of polynomial equations and turning a crank to get cryptographic proof. My area of research, I have PhD in math and I was a math professor. My area of research was something called algebraic statistics, but we did exactly that. So, we took a model, whether an AI model or a statistical model and thought about it in terms of algebraic geometry, in terms of systems of polynomial equations.

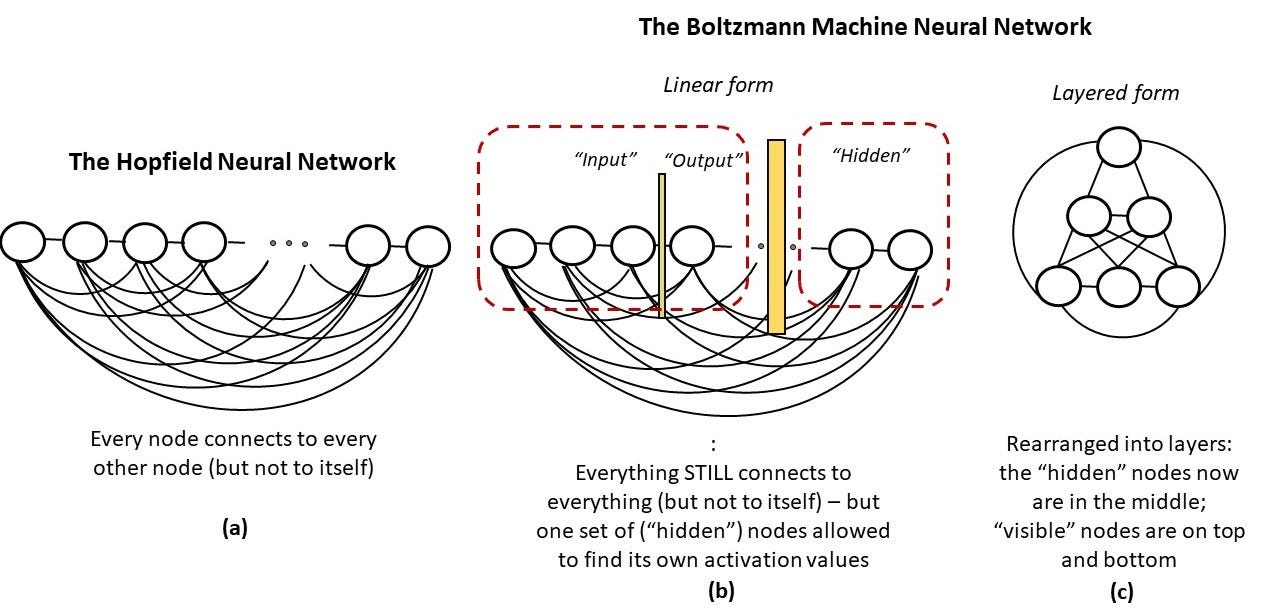

And so that led, actually the first time I tried to do that was around 2008 when we wrote a paper about the Boltzmann machines. I had this meeting with Andrew Ng and he told me that deep learning was the new thing and people were thinking about putting it on GPUs and all this stuff, and so got started working on it back then. It was more of an academic exercise in some sense at that point, because we didn't have a really great reason to turn it into a system of polynomial equations except to study it. And so, it's been exciting recently because suddenly that kind of thinking lets us do a lot more with models in terms of verifiability and privacy.

Lex Sokolin:

During that moment, for example, what was your motivation like? Is it the difficulty of the problem or the research areas unexplored, or is it some greater aesthetic pleasure from being able to do this? What drove you to pursue these topics in the first place?

Jason Morton:

Yeah. So back then, I mean it was some combination of just real liking the math itself and having studied algebra, geometry and this kind of way of thinking about things and some matter of conviction that automated decision-making, automated reasoning, AI would be really important in the long term and wanting to make some kind of contribution to it. I think I'm not a great, or at least at that time, I realized that I was not a great kind of engineer of these systems in terms of solving the machine psychology problems or the soft problems, but I wanted to contribute in a way that could happen from someone who comes from a more mathematical point of view.

Lex Sokolin:

That's 2008, probably six years before any of the neural networks are hitting production in the large tech companies. What was your path like between then and maybe the first instances of seeing the magic that machine learning systems started to bring forward?

Jason Morton:

So, as you mentioned way before it was happening on GPUs, I think there was a lot of windows into it being really important. So as part of this DARPA program that was in 2011, it was a deep learning program with Yann LeCun, Andrew Ng, and Yoshua Bengio and folks like that. And as the mathematician, my job was these systems seem to work in practice. Do they work in theory? So, we were trying to get some theoretical results that showed that they were doing something new because at that time, they were very promising but they weren't quite to the point where they could outcompete specialized solutions so it could do face recognition, but not quite as well as a hard cascaded or something like that, right?

So not quite as well as a special solution, but they had this kind of glimmer of generalizability and some people were excited about them and worked on that, again, from the mathematical point of view, proving some properties of these things and then kind wandered off a bit just as it got to be commercial because of this point, I'm still an academic and it became very difficult for anybody in the academy to compete with big tech.

I think my last NRIPS was the one before Zuckerberg showed up and money started getting thrown around, right? At that point, I think there was a big divergence in terms of what could happen in university and what could happen in the industry. And I ended up focusing on some other types of problems that are related such as on-quantum information and the studying circuits from circuit high level using category theory and stuff, and that was well into the late 2010s.

Lex Sokolin:

How do you pick the problems that you focus on because you're like very casually describing?

Jason Morton:

No, it's like a search. It's the, isn't this cool? And then something pulls you down the path. It's very random walk to be honest.

Lex Sokolin:

Is it a novelty search?

Jason Morton:

Yeah, it's a novelty search. Sometimes you have a hammer and then you look for a nail and sometimes you have a problem and you look for the right hammer to solve that problem. It sort of bounces back and forth. And then at the same time in the background, there was the cryptocurrency blockchain stuff happening. I think my first attempt at that was trying and failing ultimately to buy some Bitcoin on Mt. Gox around 2011, and then kind of losing track of that for a little bit until Ethereum came out, and then I got really excited about that space again. And these struts didn't really come together for me until there was some stuff on that side, but they didn't really come together until the ZK started to become important in Ethereum and in blockchain in generally as a way to make things more private and make things more scalable.

And at that point, kind of okay, this problem of how do you combine, how do you mathematically decompose statistical models, AI models into their constituent kind of polynomial systems and how do you get them to work with blockchain and converged again? And so that was actually when I stepped away from academics and energy here.

Lex Sokolin:

So, let's start with the basics, which is ZK. What is a zero-knowledge proof and why are they important and useful?

Jason Morton:

Yeah, okay. So, my favorite way to explain what a zero-knowledge proof is, so they've gone through lots of different ways of presenting them. And when I first heard about them at an Air Force meeting in 2015, I didn't get it at all because the scenario is something like you want to write a computation on the Amazon cloud, but you don't trust Amazon. And so, you ask Amazon to do this thing with a million times overhead and you get the result. And in that model, it felt, and oh by the way, it's probabilistic. There's a chance that it won't actually be correct. And that felt kind of crazy because well, if we don't trust Amazon, we might as well turn off the internet, right? Everything runs on these cloud servers. And so that was hard and that was sort of the publicly checkable proof era. And then I think the best way to think about it as a generalization of a digital signature.

So, if you know what a digital signature is, and not everybody does, but it's a good starting point. Digital signatures underlie of course blockchain transactions, but also things like how you know when you go to a website Genventures.xyz that it's really the one that okay, is this is the lock symbol, is the hierarchy of signatures, the digital signatures, TLS and everything else, but to know that it's the right site. Okay, so take a digital signature. You have as inputs like ECDSA. You have as inputs some message that you're signing really the hash of that message and you have a private key. You have a signature algorithm, which is generally very simple from exponentiation, from elliptic curve operation or RSA. You run this algorithm and you produce output the signature and that anyone could take that signature and maybe with a message hash and believe that you really knew the secret.

The secret kind of identifies and authenticates you. You really knew that secret. You really ran the algorithm combining that secret and message hash to produce the signature. So, there's a belief or proof that you really knew that secret, you really ran that algorithm. The weakness here is that the algorithm itself is fixed once and for all and the input, the private input is limited to this high entropy private key. And when I think about a zero-knowledge proof is a generalization of this. So, it lets you take that signature algorithm and replace it with any program in the world, any program you might ever want to run. And it lets you take the private input, the secret key, 256 bits or whatever, and replace it with this large private input, arbitrary private input you want. It can be your tax documents, it can be your face, it can be a movie, it can be whatever you like and the public information as well.

And it combines those things and produces instead of a signature, what we call a proof, which has the same property that anyone can look at that proof and believe that you really ran the algorithm that you committed to on these inputs. You really ran the algorithm we agreed upon ahead of time, and it showed that this was a video of a cat taking a bath or whatever or something more consequential. So yeah, that's how I think of zero-knowledge proof. It's very much like a digital signature. It's a programmable digital signature. It gives us a lot more power.

Lex Sokolin:

One question, and again from a very naive point of view is what does this give us just on the digital signature side or the flip side being if we didn't have this? What would be missing from the internet and our digital experiences?

Jason Morton:

If we didn't have digital signatures or if we didn't have this proof?

Lex Sokolin:

If we weren't able to have the SSL lock next to the URL and if we weren't able to use zero-knowledge proofs, what is the thing that people would not have in their day-to-day experience?

Jason Morton:

Digital signatures alone kind of mostly give us an authentication. This person said that such and such was true. Without that, there would be no way of knowing that the site that you're going to is the real site. So, it'd be very easy to send you to a fake Google or fake copy of your bank or whatever and trick you. So, there would be much less security online. The entire blockchain system as we know, it wouldn't work because there'd be no way to just sign transactions. There'd be no notion of a wallet. And even things like credit card payment systems would I think struggle to operate. So, there's a lot of signatures that happen and deep in the sort of bowels of your computer to make authentications and handshakes work, and those would all stop working basically similar to what happens if someone comes up with a quantum computer and all these things break.

And what's kind of interesting is that we've built all of that, this sort of security infrastructure of the internet and enabled transactions and enabled so much commerce to happen over the internet. We can have Amazon, we can do all this stuff, and we've done it all with signatures, with non-programmable signatures by building this kind of complex hierarchies of operations and trusts and revocation.

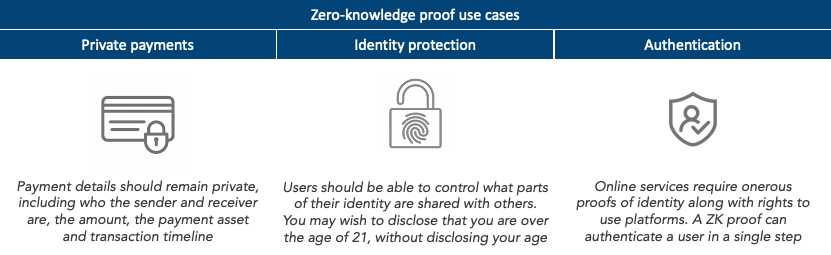

I authorize this key. I can revoke this key. You have this whole complex system and we're sort of programming with signatures instead of programming in signatures. And what zero-knowledge proofs really unlock is the ability to program in signatures. So, you can take one of these big complex systems built up a primitive and in theory at least potentially replace the entire system with just a program. So instead of you want to do a new protocol, instead of hiring cryptographers, it's been a couple of years, you just write the code, compile it, and it's done. I think we're only in the very, very early stages of seeing what happens when we change that. So, I mentioned this affordance of authentication. What they also give you is two things, privacy to sort of the new element. So, in a typical digital signature, you get exactly one thing. You get to keep private, which is your private key, right?

Everything else about the message is not private or at least the hash of the message is not private. So, there's not a specific way to do that. And what these let you do is you can choose parts of the message to reveal and parts to remain private. So, you can say, for example, go through a KYC process and say, well, I'm not a member of a group that's not allowed to do this, or I'm a member of a group that is allowed to do this, and that's all you get to learn as the other side. And then the other piece that it gives you is kind of authenticate or sorry, validity, which is the one way to think about, okay, we ran this program, one person ran a program, produced a proof, now everyone in the world can believe that that program ran successfully.

So even if there's a high overhead, if many people are trusting that, verifying that that program ran correctly, that overhead gets amortized for all the people. So, it's kind of an alternative to consensus, right? In consensus, we run the same program many times. Here we run the program once and everyone else just trusts that it's true because they can verify that it's true, rather. Yeah.

Lex Sokolin:

That is the punchline, a hundred percent. Just playing it back in the way that I understand it, and correct me if I'm wrong. So, the first bit which is essentially encryption is the authentication and identity math that the entire internet is built on, whether it's directing traffic to a website or knowing that you're inside of a shopping cart or sending your credit card information in a secure way that's built on digital signatures. And the next step around zero-knowledge proofs is that they can be made multidimensional and programmable. So, the example that you gave of proving that you're a member in a group, so that's you want to get into a bar and they want to card you, but instead of having to show them your whole passport or driver's license, you're able to show a proof that says, yes, this person is over 21 or over 18.

If you're lucky enough to be in Europe, you don't have to reveal everything about yourself. You just can reveal the one attribute that the counterparty is interested in. And if that's right, my next question to you was going to be, what does your knowledge proofs do for blockchains? Because before we even get to ZK machine learning, there's been a ton of work building rollups that are using ZK as part of their process. And you had mentioned that it's potentially a better mechanism for achieving programmable truth and trustlessness than consensus. So, can you spend more time opening that up in the sense of like, option A is game theory plus money equals consensus and what that does and then versus the zero-knowledge approach and what that does and how you're seeing these things play out in real time?

Jason Morton:

Yeah. So, there's a bunch of directions that we can go here. So, one of the ways you can think about it is that the kind of validity you get from ZK almost orthogonal to the kind you get from a consensus blockchain, consensus mechanism. So, you can use it to scale blockchain so that you can have new operations and more complex operations that effectively run as though they ran on chain. You want to run your AI model on chain or you want to run a roll up, you want to access history, all those things become possible. But it's also interesting you brought up the game theory because it's a way of securing that doesn't rely on fraud proofs or on game theory at all, right? And so, there are a lot of steps back in time for a second to 2017, 2018 period, people were really excited about blockchain enterprise.

Let's try to use this new idea we have around impossible to forge transactions and contracts and consensus to try to replace some of the systems we currently use for things like settlement and ERP or whatever. Part of the problem with that, I think, was that if you rely on game theoretic incentives, you really limit your audience a little bit. So, there's economic issues around you can't secure something of much greater value with much lower value. And there are some things that are just inconceivable. So, for example, if you have, okay, this is getting a little bit far with the question, but okay, so one of the things that comes up a lot is there's optimistic, skip forward a little bit. There are optimistic approaches to machine learning where you can do fraud proof instead of doing a proof of execution of the algorithm. And that of course requires that you have a game theoretic aspect, but there are many adversarial settings or settings in general where you don't really have the ability to rely on that.

So, suppose you wanted to, you're talking about a drone or something and the drone is analyzing an image and trying to decide whether or not to take an action. You can't have this, it's not an economic decision that a thing is making, if that makes sense. And it's sort of unthinkable that you would delegate it to that. So, I think the same thing kind of happens. That's a bit dramatic, but something kind of happening in corporation or in what are currently off-chain corporate activities. You might have a need for confidentiality, you may have a need for validity, and the people involved are not comfortable delegating it to a game theoretic mechanism outside this traditional legal system. In those cases, you can do a cryptographic mechanism.

Lex Sokolin:

Got it. So if I were to characterize it, it would be the consensus mechanism. The game theoretic mechanism is you've got some sort of economic incentive or exposure to be honest. And if you're dishonest, you get massively punished. And usually, the way that it works with Bitcoin or Ethereum and other networks is the more people are playing the game, the more secure that consensus mechanism is. So, the bigger the chain, the more likely that the stuff on it is actually true. But with ZK, you don't need to avail yourself to the guessing game and this economic competition, you can just derive whether something happened or didn't.

Jason Morton:

One of the other things that follows from that is speed. So, I also did some work on MPC systems and of course blockchain systems, anything that requires consensus or communication has this fundamental limit around the speed of light. If you have agents that need to communicate with each other and those agents live on a network and they're a hundred milliseconds away or 300 milliseconds away from each other and they have to do some round trips, you can only ever go so fast, you have to wait for block times or fraud proof periods.

That's further limitation. In the beginning, it looks like those things will be faster than ZK because the ZK overhead is significant, although it's declining at a really fast rate, much faster than Moore's Law. But the fact that you don't have to communicate means there's kind of no speed limit. So, if you can parallelize it or you can improve your algorithms, you can get to that kind of sonality using a zero-knowledge proof as fast as you can compute the proof. Whereas in the other case, if you always are going to have to do some communication, you always have to wait for that discussion to happen.

Lex Sokolin:

Why is it hard to compute the proof? Why does it take a long time today?

Jason Morton:

So, there are fundamental reasons and then there are sort of practical reasons and I think we found, we've sped up our system by, I don't know, something like 20,000 or 30,000 times since we started, something like that. Most of it is honestly because these things are magic and a bit of a miracle and they only just barely started working. So only in the last couple of years did we get to a system that would do anything non-trivial. And whenever you're doing an engineering problem, it starts out with high unit costs, it's slow, it's very manual, and eventually it gets faster and faster and it gets cheaper and cheaper. And the same thing happens here. So, most of the ZK systems that are in production today, I would say including ours, just kind of barely work.

And so, a lot of the work that we're all doing is you go back to a system that works and then you find all the bottlenecks, you remove them. So, it's just kind of piece-by-piece engineering. I think there's a lot of times people are searching for a silver bullet looking for the new proof system or looking for a new idea that will solve the problems, but I think it's largely really a lead bullet discipline. It's just the tuning, tuning, tuning. You're trying to make a car go fast or rocket go up, you're removing pieces from that, making things thinner. And that's the process.

Lex Sokolin:

So, let's move to machine learning and what brought you back to looking at the AI space and then what is the current thing that EZKL is focused on?

Jason Morton:

Yeah. So, there's a couple of affordances that we get here. So, one of the questions is or one of the things you might want to do is in the context of blockchain to run an AI model as if it ran on chain, where you now increasingly there are more and more projects in the area that introduce some sort of economic incentive there, token incentive or the ability to incentivize creation models, execution of models. So, one of the most fundamental things you need to be able to do there is to check whether a model was run, promised model was run correctly and can we make it as though it ran on chain? And the way you do that is to commit to the pieces that you need to commit to and finally verify it. I think we're thinking about that, of course, a lot I'm working with folks, but in general, we're also trying to think about what are the broad areas in which verifiable AI makes sense.

And there is an overhead. As I said, I think that overhead is coming down very fast. I think in 10 years or 20 years maybe, we might be able to say that overhead gets down to less than two times. So, remember even something like 30% or something, who knows, could be sublinear. I think in long term, it'll be a little bit like ECC RAM, right? There's an overhead for ECC RAM, the costs a little bit more, maybe it's not quite as fast for the same price, but what you get is a higher degree of certainty. I think maybe long term, it'll be almost a cost you can ignore. So, we're thinking about, okay, what are the scenarios? But today, before we get there, what are the scenarios in which we want to be able to run a statistical model? We want to be able to run an aggregation or a query.

We want to run an AI model, and there's enough of an adversarial environment that it's worth the motivation. People are motivated to have this kind of cryptographic security. So yeah, I guess that's kind of the explorations that we're doing with various customers in different areas is both who needs it, who needs that kind of security today, and then how do we provide that kind of security? And one of the other kind of questions that this brings up and which we find is really, really important in moving toward production with these systems is that you are designing a crypto system. You have some things that are secret, some things that are public, you have some pieces that are checked, some pieces that are not checked. And figuring out how all those things should plug together in order to produce a system that is trustworthy and economically useful is a really difficult kind of Cambrian explosion of possibilities design problem.

It's like someone gives you the internet and you're trying to figure out what is the internet for? What can I do with this for sharing cat pictures? And so sometimes I think what we're doing in ZK, in us but other people too are it's almost like we're trying to invent a web framework. You go back to the early days of the web. We had C. We had all the pieces, like we had Java, early JavaScript. We could have made web applications. You could have made Gmail or something. You could have made all these other web applications that we've seen, but people didn't yet, partly for performance reasons, but also partly because we lacked the abstractions of kind of MVC or 12 factor or all the different, where does data go, stateless data for this and React and components and all this stuff, right? We just lacked all those abstractions that made developing that kind of application easier.

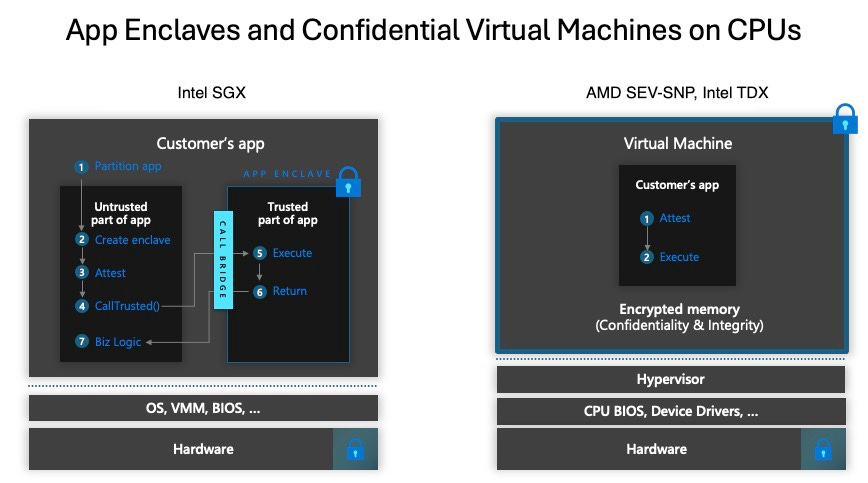

And we're definitely in this stage where we're trying to figure out the right abstractions people can understand and build to them. One extreme of that is all you get is signatures and you can use that to build really impressive systems. The other extreme of that is here I'm going to hand you a verifiable computer, a VM or a trusted enclave, do whatever you want. I feel like both of those extremes are kind of not the right path forward. We have to figure out the thing in between that has the right abstractions and the right interfaces that let people build economically valuable things with it.

Lex Sokolin:

The way that I understand, or at least my cartoon version of it, is we have these two separate technology trends that are both mathematically driven but from very different parts of mathematics. And one is the blockchain as a computer, and at least in its current form, it's a tiny computational bandwidth that can go in there. So, it's like everybody wants to have the Mandalorian rendered in the Unreal Engine, but right now, we have the Nintendo with maybe four colors in it. And then on the other side, we've got these unbelievably hungry statistical models, as you say, trained on an absurd for hundreds of millions of dollars on the entire internet running on super computer clusters, incredibly resource intensive, but they're able to produce kind of labor and economic activity.

And so, we have the Nintendo version of economic architecture and then the alien version of intelligence and trying to connect them together is really, really difficult. So how do you transform and compress the stuff that's happening off chain to be non-corrupting and to be true on chain as well. And what kinds of models have you seen like machine learning or generative AI that you think that maybe are the first use cases for EZKL, the framework to do this where you're seeing some demand and you're like, okay, that makes sense, that AI stuff should live on these blockchains and this is at least a glimmer of what's coming?

Jason Morton:

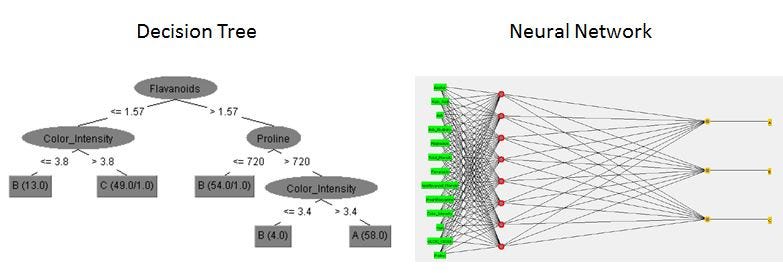

In terms of the scope of the model, I think. Everybody wants LLM, right? Big LLMs but a little bit. That's just because it’s kind of the exciting thing in the moment. I think those are coming reliably and because we get much faster than Moore's Law, kind of how many cycles we get or how much proof we can get, that doubles at a much faster rate than the size of the models, even though those are also getting big quickly. Those will all be possible. But I think it's more interesting today to look at the kinds of models that are kind of statistical ML decision models that are deployed in the world today that are making economic decisions. So that might just be something as simple as a regression or a decision tree or a small neural network that's producing prices or making classifications. Those kinds of things are eminently practical.

They can be proved in a few seconds for the most part, and I think are a great way to reveal the kind of problems that make sense. So, for example, we have seen some LLM stuff, or also looking at people who are doing things like credit ratings or aggregation. So, we're working on a project which is a simple aggregation of votes. They work with optimism, gives away a certain amount of money, 120 million last time periodically. And what they do is collect ballots from badge holders, from voters privately, and then produce from that an aggregate, run it through an algorithm, and that decides how much money each product gets. And so, the algorithm we put into EZKL and that lets you kind of preserve the privacy of the voters in two directions. One is that who you voted for doesn't become public and the other is that you have no way to prove you voted for a certain person or for a certain project which would subject you to the possibility of bribery.

So that's a very simple algorithm, it's just an aggregation. It's almost like something you could do with data frames or with a SQL query or something, but it's nevertheless kind of economically important. So, we look for those kinds of examples right now to get a sense for where the demand is. We also see a fair amount of stuff in DeFi and games because you, for example DeFi, what you can do is commit to a vault, commit to a strategy, but have a vault around that strategy, let that run for a while, show some performance. Anyone who puts into that same vault can be convinced that future executions will follow the same strategy drift or risk of strategy drifts or rugs that are not built into that original model.

Lex Sokolin:

If you could open that up a little bit more. Let's say there is an investment strategy and there's a box and the box says, put money into here and I will do X, Y, Z. I will go and invest in NFTs with the color red and that's the only thing I do. How does lacing in proofs work in that case? At which part of the smart contract or how does that integration happen?

Jason Morton:

Yeah, the high-level view is basically you submit to the smart contract, you can think about a trade or a state transition. You say, here's the inputs, here's the outputs, here's the proof. And then the smart contract runs the verifier. It checks that the inputs are still true and then if they are, it changes them to the outputs. So maybe it's just portfolio weights or something. In that case you don't really need the inputs, but maybe you have as the input, you have something like what block are we in or what are some prices or something. And so, it's really just, it's almost making a lookup table or proving the execution of a function, proving a straight transition, any kind of state transition.

Lex Sokolin:

For people who are not as versed in blockchain in this space, I go back to the example of Ray Dalio and Bridgewater, and the Ray Dalio claim is we use a gigantic global macromodel with thousands of inputs to figure out what decision we're making. And there's a book recently written on it as well as the New York Times expose, which basically says there's a hundred rules in an Excel file that Ray Dalio picks from and that's how it works. So, in that case, you'd be able to say, okay, well, if you do have this enormous global macromodel, plug the wires into the prover, and it would actually validate for you whether that thing is being used or whether it's a dude with a spreadsheet.

Jason Morton:

Yeah, yeah, absolutely. That's really interesting direction I think is that we would like to explore around specifically asset management and kind aspects of due diligence. You have the asset manager, it says, they say they have a sophisticated quantitative model. You can kind of prove whatever properties you like about that model. How many factors are being included or what is the leverage of each of those? Is it really a hundred? And other kind of even more stark version of this is there are many quantitative strategies, which as an LP. You ask yourself, is this alpha real or are they just selling puts? Because selling puts looks fantastic until it doesn't and not literally selling puts, but implicitly selling puts have a strategy that's equivalent to that, and it really interesting to explore that kind of thing. It doesn't necessarily have to have anything to do with blockchain of course, because that same, that need to prove some capabilities that you have or some facts about your company without revealing all the details about your company is a very common situation.

I think that's also really relevant when we think about privacy. So usually when people are thinking about ZK and privacy, they're thinking about individual privacy around my personal, my PII or my transactions, like wanting to hide my transactions. And of course, those things tend to be controversial because governments don't tend to be crazy about it, and also because economically it seems like people are rarely willing to pay for privacy.

I think the right place to think is actually more on the corporate side and you say while humans, an individual may not be willing or even legally allowed to maintain their privacy, if you go to the level of the corporation, they often have an obligation to maintain confidentiality of other people's private information or their own internal trade secrets, or they want to convince the financial markets that their sales were X and their profit was X without revealing all the details. So, they hire human auditors to check those things, but we could do the same thing with ZK. One way to think about ZK is that it's a way to, and the kind of stuff we're doing with EZKL is that it's a way to remove trusted intermediaries and do something that's a lot cheaper.

Lex Sokolin:

It's a way to actually know the truth as long as there is some sort of digital substrate in which it floats.

Jason Morton:

Right, you have to have a source of truth there. And so that's part of the reason this whole thing takes time because like HTTPS spreading throughout the internet, nobody else is doing it. It doesn't necessarily add that much value when you do it, but I think it does have natural network effects once it gets rolling.

Lex Sokolin:

So, what is EZKL itself? I know it's a company and I know that you work with other developers and they pick up pieces of it. How do you think about it? Is it a product? Is it a set of libraries? Is it as a framework? And then how do people engage with it?

Jason Morton:

So, it's all those things. I mean, I think the best way to think about it is in terms of if you're very, I bet data you can use it as library or as a compiler. I think as time goes on, we move more and more to more complete solutions where you have a scenario that you want to accomplish and we've sort of thought through components of that ahead of time. Then I think about it as kind of towards new ideas around sources of truth analogous to something like a database engine. So, it enables you to kind of transform one type of truth into another that's a generically effective thing, but just to be really more concrete.

Okay, so how do you interact with it today? You have the following options. You can download the command line tool and you can create proofs and manage them. We have a backend proving service where we'll keep track of the artifacts and execute proofs for you, which is designed to be very fast and tuned based on what we've learned about the systems, and we have Python. So, a lot of people, the first point of contact is that they have a model that they've built in PyTorch or TensorFlow or scikit-learn and they would like to prove that model and you can just PIP install EZKL and do that.

Lex Sokolin:

What's the economic or commercial model for what you're building? Are you thinking about this as a product, as a protocol, as a giant opensource layer like?

Jason Morton:

So right now, the strategy is that this is a company. This is not a token product-based protocol. And so, our sources of the way we make money is either licensing the components of the system, running these backend services for folks.

Lex Sokolin:

And then when it becomes internet scale, what does that company look like?

Jason Morton:

As we grow, we'll be doing more and more of the work. So early on, our earliest users were kind of our most technically sophisticated in some sense. We just hand them something that barely works and they can build with it. And as time goes on, what we have is something that's more and more complete. So, I think over time, it's going to look more like the kind of experience you'd have interacting with a hosted database system or a hosted accounting system depending on or an entrance service depending on the application.

Lex Sokolin:

Have we seen companies like this before? If you were to compare it to something else out there, what does it look like?

Jason Morton:

Yeah, I sometimes think of it as a bit like something like Mongo or Kafka or Confluent in terms of that's where the database analogy or even something potentially like that's a sidecar to your AI workflow and ops company like Weights and Biases or most ambitious programs to something like an ERP.

Lex Sokolin:

Do you think most of the demand will come from, this is a bit of a rhetorical question from Web3 companies or from Web2 companies or this distinction not even make sense once everything has grown up?

Jason Morton:

Long term, the demand has to come from real economic activity. Sometimes we get a little bit of ahead of ourselves there on the Web3 side. So that means that I think long term, the demand for what we're doing in general comes from enterprise and Web2, either in Web3, but kind of growing into those economic roles. So, you take a company like Uber say, hard to predict today, whether it will be Uber running this kind of stuff somewhere in its infrastructure or whether someone else will end up disrupting it coming from a pure Web3 types but same with social or anything. I expect that it would be some mix of that. I do think it's a bit like an internet type technology, a verifiable internet or verifiable exchange of information and then over time, more and more of the economy will touch it. You can almost think about it as a vector by which Web3 ideas expand to larger economy that it has not been able to function yet.

Lex Sokolin:

Super interesting. I agree with you in that. It's almost impossible to predict where demand will come from first, and there's just so much growth to be done in the AI space as well as the Web3 AI space that I think it'd be much better for our whole kind of niche to be open to each other and not start drawing these religious lines in the sand before anyone's even come into the party. You do have to go kind of really broad open, and I very much appreciate that point of view. If our listeners want to learn more about you or about EZKL, where should they go?

Jason Morton:

Best place, I guess the website, Ezkl.xyz.

Lex Sokolin:

Fantastic. Jason, thank you so much for joining me today.

Jason Morton:

Great to talk with you.

Shape Your Future

Wondering what’s shaping the future of Fintech and DeFi?

At the Fintech Blueprint, we go down the rabbit hole in the DeFi and Fintech world to help you make better investment decisions, innovate and compete in the industry.

Sign up to the Premium Fintech Blueprint newsletter and get access to:

Blueprint Short Takes, with weekly coverage of the latest Fintech and DeFi news via expert curation and in-depth analysis

Web3 Short Takes, with weekly analysis of developments in the crypto space, including digital assets, DAOs, NFTs, and institutional adoption

Full Library of Long Takes on Fintech and Web3 topics with a deep, comprehensive, and insightful analysis without shilling or marketing narratives

Digital Wealth, a weekly aggregation of digital investing, asset management, and wealthtech news

Access to Podcasts, with industry insiders along with annotated transcripts

Full Access to the Fintech Blueprint Archive, covering consumer fintech, institutional fintech, crypto/blockchain, artificial intelligence, and AR/VR

Read our Disclaimer here — this newsletter does not provide investment advice and represents solely the views and opinions of FINTECH BLUEPRINT LTD.

Want to discuss? Stop by our Discord and reach out here with questions