Hi Fintech Architects,

Welcome back to our podcast series! For those that want to subscribe in your app of choice, you can now find us at Apple, Spotify, or on RSS.

In this conversation, we chat with Malcolm deMayo - global head of NVIDIA’s Financial Services Industry (FSI), a vertical business unit that is working to accelerate the adoption of AI. NVIDIA FSI is the leader in accelerating and modernizing critical industry use cases such as fraud, document and process automation, customer service, risk management and much more.

Mr. deMayo has held a range of leadership positions within the technology industry. Prior to his current role, he was group vice president, Oracle FSI Strategic Clients, where he was responsible for growing existing and establishing new client relationships across Oracles entire portfolio of solutions such as Enterprise Resource Planning, Human Capital Management SaaS, AI &Analytics, OCI ,and FS vertical applications in Banking, Capital Markets, and Insurance. Prior to Oracle he was Head of Sales at TCS Digital Software & Solutions (DSS) a leading provider of machine learning powered analytic solutions for Retail Banking. In addition, Mr. deMayo has held various leadership roles at EMC, Teradata, and NCR.

Mr. deMayo received a Bachelor of Science degree in Marketing and Computer Science from CCSU.

Topics: AI, Artificial intelligence, Machine learning, accelerated compute, compute, generative AI, genAI, financial services, Neural networks

Tags: Nvidia, OpenAI, Google, Microsoft, EvidentAI, AlexNet

👑See related coverage👑

Fintech: New York Community Bancorp halts trading, raises $1B capital injection

[PREMIUM]: Long Take: Carta's misstep and exit from secondary markets point to a bigger problem

[PREMIUM]: Long Take: Can we be optimistic about 2Q2023 Equities, Crypto and Venture markets?

Timestamp

1’35: Beyond Moore's Law: Nvidia's Pioneering Role in Financial Services Innovation

6’56: Revolutionizing Efficiency: The Transformative Impact of GPU-Accelerated Computing in Finance

14’12: Unlocking Efficiency: How Nvidia's Accelerated Compute Platform Revolutionizes Machine Learning in Finance

19’52: Transforming Finance: The Power of Machine Learning in Fraud Detection and Market Analysis

29’15: From Data Custodianship to AI Revolution: Nvidia's Pivotal Role in Transforming Finance with Generative AI

35’03: Beyond Classification: Nvidia's Role in Fueling Generative AI's Impact on Financial Services Innovation

42’04: Shaping the AI Landscape: The Dynamic Between Open Source Innovation and Proprietary Models in Financial Services

47’01: The channels used to connect with Malcom & learn more about Nvidia

Illustrated Transcript

Lex Sokolin:

Hi, everybody, and welcome to today's conversation. I'm really excited to have with us Malcolm deMayo, who is the global vice president for financial services at Nvidia. Nvidia, of course, you know as the most important artificial intelligence company probably in the world right now, so I'm excited to talk with Malcolm and learn how AI and finance intersect. With that, Malcolm, welcome to the conversation.

Malcom deMayo:

Pleasure to be here. I've been looking forward to this conversation with you for a number of days now, so excited to be here with you and your audience.

Lex Sokolin:

Excited to have you. There's, of course, so much going on with AI and the hardware and the software elements of it, and we'll take the time to get there. But I wanted to just set the ground and understand, what does your role entail? What does it mean to cover financial services at a company like Nvidia?

Malcom deMayo:

It's a really, really important question and thank you for it, Lex. Most companies prior to ChatGPT would've been very surprised to learn that Nvidia has been working with financial services firms for over 15 years, actually really now more like 17-plus years, and probably even more surprised to learn that a company that is thought of as a chip company has been innovating together with financial services firms for that long. What it means, back to your question, is that Nvidia goes to market by industry, by vertical. It's very important to us to understand the challenges that industries have in the context of the kinds of solutions we build. We're an accelerated compute platform, so we're looking for compute-intensive challenges and to help solve them.

When you think of that in financial services, you have trading challenges, you have challenges in banking and challenges in segments like insurance with extreme climate and extreme weather and climate change and trying to predict that accurately, trying to understand in trading where the never-ending hunt for alpha, and in banking, there's just thousands of opportunities to improve and we can talk specifically about them.

My role starts with making sure we have the right focus, that we are focusing our resources on solving those right problems because we don't go to market the way the typical technology company goes to market. Our platform is available through all our partners. We do not sell it direct. It's really important that we are working closely with companies in each of those segments, enterprises to really understand their workflow and understand how we can bring value to them without having to disrupt too much what they do.

Lex Sokolin:

Let's open up the key verticals in which Nvidia does this work and what does compute, what does that mean, and then what are the key verticals in which this is required?

Malcom deMayo:

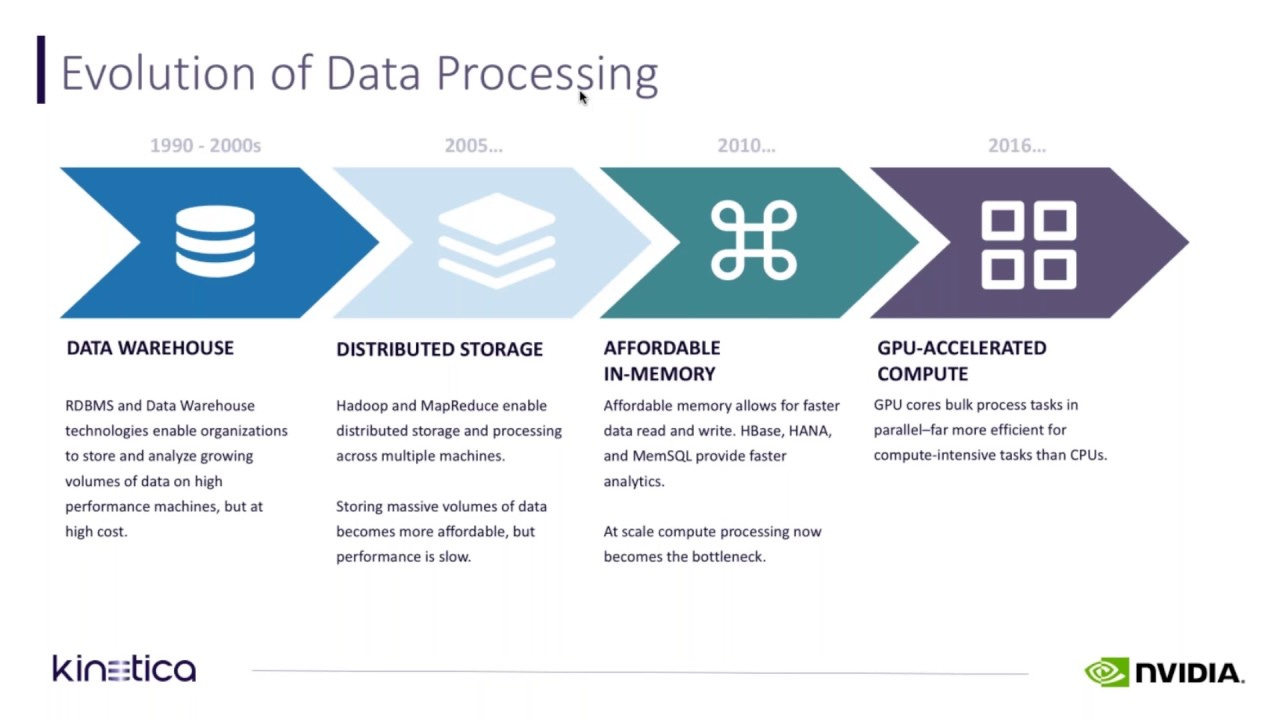

Compute is exactly what you would think it is. It's every industry, not just financial services in every segment within financial services has been leveraging CPUs for the last 50 years or 40 years. We've gotten very comfortable with the fact that this informal law postulated by Gordon Moore, the founder of Intel Corporation, which was called Moore's Law. Moore's Law simply said that compute will double every CPU generation and power, in other words, energy and cost will stay the same. You'll get twice the performance with every new CPU generation, with power, energy consumption and cost remaining the same. For decades, financial services firms have met the explosion in transactions, they've met the explosion in data, and they've been able to meet this by incorporating the latest compute from companies like Intel and AMD.

But about 10 years ago, Moore's law stopped working and today it's relative. It's, for the most part, Moore's law is dead. As a result, and in the last 10 years, we've had an explosion in transactions from millions to hundreds of millions to billions, and we've had an explosion in data from gigabytes to petabytes and soon to be exabytes. You hear companies in the trading space talk about their 200 to 300 petabyte data sets. You hear large banking organizations like JP Morgan talk about their 500-petabyte data store.

When the compute power isn't keeping up, isn't staying on that Moore's law, what happens is you to buy more compute. Most firms have moved to cloud to take advantage of the horizontal scalability and elasticity that cloud delivers. One of the covers in plain sight, what's happened is data centers now are consuming 3% of the world's energy, and that's expected to double by 2030. At the same time, the cost, the CapEx cost of compute has just gone through the roof, and so compute is the engine we need to process transactions, to process data in order to satisfy our customers in financial service.

Lex Sokolin:

That shouldn't be confused for GPU, right? There's lots of hunger right now for GPUs and for a different kind of chips rather than the CPU cloud stuff. Can you delineate the difference?

Malcom deMayo:

The simplest way to think about CPU or traditional or legacy compute and GPU-accelerated compute is that a legacy CPU executes one instruction at a time and a GPU executes thousands of instructions at a time, and so we generate this massive speed up. In fact, NVIDIA has delivered a million x speed up in the last 10 years with our accelerated compute platform.

Lex Sokolin:

Are the tasks the same or are they different in some manner?

Malcom deMayo:

The tasks are the same, Lex. What we do is we pair a GPU with a CPU, and so you're not getting rid of the CPU, but the tasks the CPU was tackling required you to scale out and add servers, so that data centers are bursting at the seam, whether they're owned by enterprises or in cloud. When we apply GPU acceleration and generate a 50x to 100x speed up, we're essentially allowing you to do the same work with a lot less compute, with a lot less physical footprint. You could go from, as an example, 10,000 CPU servers, CPU-only servers, that work could be done by 200 GPU-accelerated compute servers. It's the same work, but it's just instead of spending a hundred million dollars for your compute, you're spending $8 million. Instead of consuming 5 megawatts of power, you're consuming 0.26 megawatts. It's a 12.5x to 13x reduction, x not percent, x reduction in CapEx and a 20x, not percent, x reduction in power consumption.

Lex Sokolin:

We'll jump into generative AI and the kinds of resourcing it needs in a little bit, but let's first spend the time to open up what it is that financial companies purchase from Nvidia. What verticals do you serve? Then, what are the real needs in those? What are the biggest customer problems that you see?

Malcom deMayo:

There's a lot of great challenges in our business. One of the things that is consistent is the minute you knock down a problem, you discover that there's, just around the corner, another big problem. But when you think about trading, historically, we've helped accelerate the options trading business, which is very complicated, very computationally intensive. You're requiring to run lots of simulations to determine what the most efficient price is for an option, and what's my risk in holding that option? Is that option going to end at its expiration date? Is it going to be in the money? Am I better off selling this option or am I better off holding it?

All of those calculations, we've been doing that for 15 years together with large sell side banks and with buy-side hedge funds and asset managers. That has started to migrate to or evolve to, as they start to think about how they want to leverage generative AI and being able to process vast, vast quantities of different types of data, not just market data, but text data, sentiment data, social media data, et cetera. That is a very interesting and large challenge that they're leveraging our platform to tackle.

In banking, banks, maybe not arguably, but banks have to be very, very careful in the adoption of new technology. They're heavily regulated, their customer data is heavily regulated, they're the custodians of their customer's information, and it's a big responsibility. But there are thousands of use cases that they can be using pre-GenAI. A great example is the large banks have to conduct a daily credit loss report and report that to the Fed in the US and in other jurisdictions to other regulatory bodies. But at the end of the day, we take a workload that runs 20 hours, and in those 20 hours, they're doing things like moving data into their models and then running scenarios, risk scenarios and credit loss scenarios, and then delivering the results to the Fed.

That 20-hour workflow ran in under four hours on our platform. That's about a $20 million savings across the year. $20 million savings just one workload, that instead of taking 20 hours now takes four hours or just under four hours. Lex, when you think about that, you as a bank, no pun intended, you can either, you can bank the savings and just run the same workload in four hours, or you can start to think about all the compromises you make, the attributes you're not using, the scenarios you're not running, that would give you a more accurate view of your credit loss in a better position with your compliance team and your regulating bodies.

In the payments industry, since COVID, transaction fraud has soared. It's estimated now to be a close to $40 billion annual problem, we're talking about credit card transactions. Working together with the large networks to understand how to leverage our platform to better identify fraudulent patterns is helping to take a bite out of fraud. In fact, we helped American Express reduce their annual fraud by 6%, which is a very large sum of money.

Lex Sokolin:

Let me frame a little bit where I'd like to go next, which is, of course, in the current moment, artificial intelligence is the primary thing that has all the attention on it. I'm going to draw the line between machine learning and the types of AI that are already deployed in most financial institutions, whether for underwriting, lending, insurance, fraud and so on, decisioning models, and then Generative AI, which is being used largely to automate skilled knowledge work and content creation and so on. Before we get to Generative AI, can I double click on machine learning across your clients? Because NVIDIA is, obviously, through its graphics cards, an enabler of machine learning, and specifically through that technical architecture is the reason we are even able to have neural networks and so on that our performance efficiently to work on financial data. Can you talk about the machine learning applications that are the most common that you see across the client base and maybe some color and examples of those?

Malcom deMayo:

I think maybe, I should probably take a quick step back, Lex, and differentiate between what we build, which is an accelerated compute platform and hardware accelerators. There's a lot of hardware accelerators on the market. These are things like FPGAs, GPUs, A6, and they're available from cloud providers, they're available from chip makers, et cetera. That's an accelerator, a hardware accelerator. Accelerated compute platform is hardware, software and network, kind of a full system built to enable amazing speed ups and at the end of the day, take a workload that's running and speed it up 50x to 100x by embedding libraries into the workflow that are optimized to run on the GPU and CPU and DPU. That's the thing that differentiates Nvidia.

Back to your question. We take data processing, so the accelerated compute platform is a three-layer cake. It's hardware at the base layer, it's Nvidia AI enterprise as a software layer, and then it's our AI frameworks, developer frameworks on top.

The hardware layer is pretty straightforward. It's a family of GPUs, data processing units that accelerate things that CPUs are doing, work that CPUs are doing they were never intended to do like virtualization, network management, et cetera, and then obviously, a CPU. The software layers where this gets really interesting, we accelerate the ETL function of machine learning or the data processing function. This is the most, by the way, under the covers most customers, most enterprises don't realize they spend most of their money moving data around. They probably realize that, but probably have raised the white flag and said, "There's really not a lot we can do about this. We have to move data to models, to machine learning models in order to execute them."

But we accelerate that process. In fact, that Federal Reserve example I spoke about a moment ago, where we took the process from 20 hours to less than four hours, 50% of the time savings was data moving around and just accelerating that work. Data processing is a big application in every segment we're talking about. This is a challenge that just keeps growing as we create new types of data, and we find new technologies like Generative AI that allow us to expand beyond just tabular data or spreadsheet data to incorporate text, image, video, you name it.

The first application horizontally is data processing. We've built libraries. Rapids is a suite of libraries inside of Nvidia AI enterprise that accelerates Apache Spark without a single line of code change. It also will generate shaft simulations, so that you can start to understand and explain how the machine learning model is making its decisions. If you're dealing with a tree-based model like an XG boost model, we're going to accelerate the ETL portion or the data processing portion of that end-to-end process. Then we're going to accelerate the training process, where you're teaching the model to do a task, and then we're going to accelerate the inferencing portion, where you're exposing the model to new data for it to make a prediction or identify patterns, et cetera.

In all three cases, in the training area, we excel in inference of machine learning models. We've built a suite of libraries we call Triton, which is part of Nvidia AI Enterprise also. Triton simplifies the work of inference by allowing you to inference on a CPU-only server or on a machine learning model on a CPU-only server or on a GPU-only server with the same code base. Your development team doesn't have to support multiple code base.

In addition to that, because we know the entire platform, we've built it, we've got a few tricks that allow you to optimize performance in each phase of execution, whether that's the ETL or data processing, training or inference. Actual use cases that we see machine learning tackling today, you name them. It's fraud, money laundering fraud in banks, transaction fraud in payments firms, payment networks. In trading, it's looking at patterns in tabular data using machine learning algorithms to determine sentiment and find opportunities for alpha, and alpha is above average profits.

Lex Sokolin:

It's a fascinating look on what actually people have to think about in deploying the technology. I'd love to get a bit more detail on the use cases in terms of how does the use case work. What does it mean to apply machine learning to fraud? What does it mean to apply it within a capital markets environment, and by what mechanism is it adding value? Then, any trends in terms of adoption around the industry, how widespread is the usage?

Malcom deMayo:

Machine learning is very prevalent in all segments. It's being used in production by just about everyone and has been for several years. What does that mean in a transaction fraud environment? What are we actually doing? You think about a typical transaction, credit card transaction, Lex. You've got a consumer, whether they're in-store or they're online, they're either going to be swiping or tapping or they're going to be clicking at the point of sale. Then, you have about two seconds, about a second and a half to two seconds to authorize that transaction. Think about that, that's 1,500 to 2,00 milliseconds. That's the envelope you're dealing with.

In that period of time, the credit card, the name on the credit card, credit card number, the authorization number, this is what the data footprint looks like, or the data record looks like, that gets sent across for authentication to authorize whether or not this transaction gets approved. In that process, they're trying to determine, is Lex good for it? Is Lex going to be able to pay this back? To do that, this transaction has to go to your bank. They have to make sure, does Lex have a sizable enough account? Does he have a good payment history? All of that gets done in that 1,500 milliseconds to 2,000 milliseconds.

About a 20 to 30-millisecond part of that, we're developing a credit score, and so essentially determining based on a bunch of different attributes, and it depends on the model, but is this a store you frequently buy at? Human beings are very habitual. We tend to do the same. We have a lot of the same patterns. We get coffee at the same place usually or the same couple of places. You tend to buy clothing and et cetera from the same places. You tend to shop in the same geographic places. You can start to look at, does Lex buy sporting tickets in Puerto Rico? Has he ever done that before? This might not be Lex.

Lex Sokolin:

By which mechanism does this happen? Because there are billions of these decisioning points, and it's not like somebody starting out writing software saying, "If outside of this country, therefore flag."

Malcom deMayo:

What you just described would be more of a rules-based approach, not a machine learning approach, where you define some rules and you're in a constant, you're constantly trying to stay ahead of the bad guys in creating rules versus having AI that can identify patterns and essentially flag things that are outside of the norm.

Lex Sokolin:

Any kind of examples of this that come to mind as the primary use case in the other verticals that you mentioned?

Malcom deMayo:

I think the biggest use of AI and trading has been around using natural language processing, even with just machine learning. Using natural language processing to identify the sentiment of say a social post, a social media post or breaking news. You'll see, as an observer of the market, you'll see breaking news and you'll see the market move, and so you know that the algorithms are understanding their reading and understanding some of these things.

Let's take the example of a social media post. By the way, it's fair, I think, to say that trading firms protect their IP. It's their differentiation. We're not really sure exactly all of the things they're doing, if that makes sense. But we provide our platform to them. When they flag the challenges they have to us, we work really hard to improve what we're offering. But back to that social media example, it's not enough to just be able to determine the sentiment, positive, neutral, negative. It really doesn't tell the algorithm much.

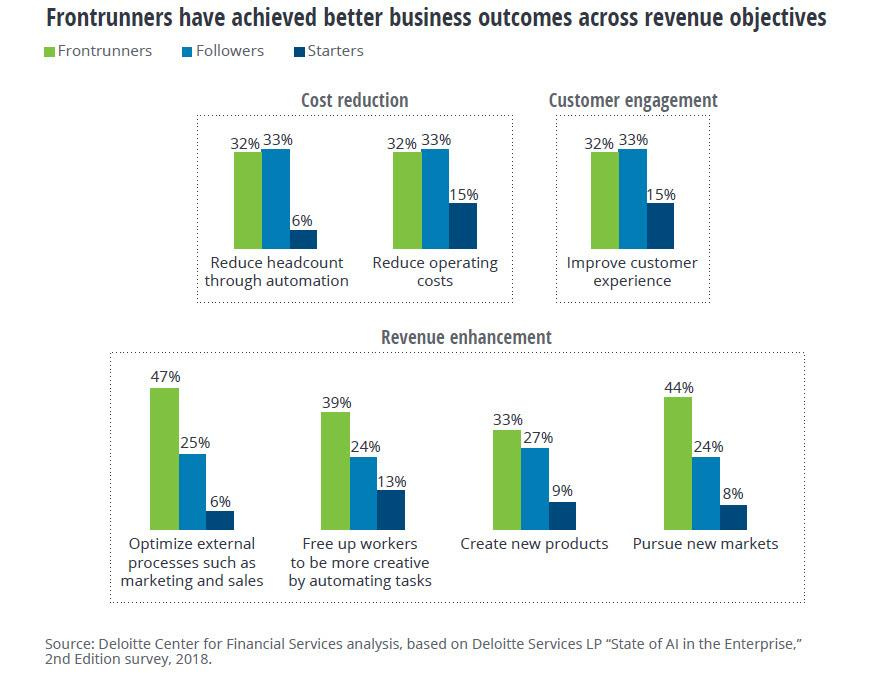

It also has to understand, is Lex an influencer? Are a lot of people going to pay attention to what he posts, and so is he influential? Should we wait this host or lower than others? If that makes sense. That's an example of how natural language processing is being used in trading. In banking, there are so many opportunities to automate processes that have grown up over the last six decades through acquisitions, processes that were because of a regulation that maybe has changed or been amended, and that process still exists buried in a process. Using first principles and rethinking how we do things, machine learning gives banks the opportunity to re-imagine, if you will, how they do things and streamline and automate. The simplest way to think about it is leveraging machine learning to improve productivity, to improve efficiency, and to look for ways to improve or grow revenue, and some of the uses, we'll do all three.

Lex Sokolin:

Do the use cases get implemented by integration partners and consultants, or do you usually see the banks doing the work themselves? I guess, in terms of the commercial relationships that you formed with the industry, is it often that you're providing the broadly speaking cloud infrastructure and then somebody like EY or McKinsey, does the machine learning project on the substance, or do you see Goldman Sachs showing up and rolling up their sleeves and doing it themselves?

Malcom deMayo:

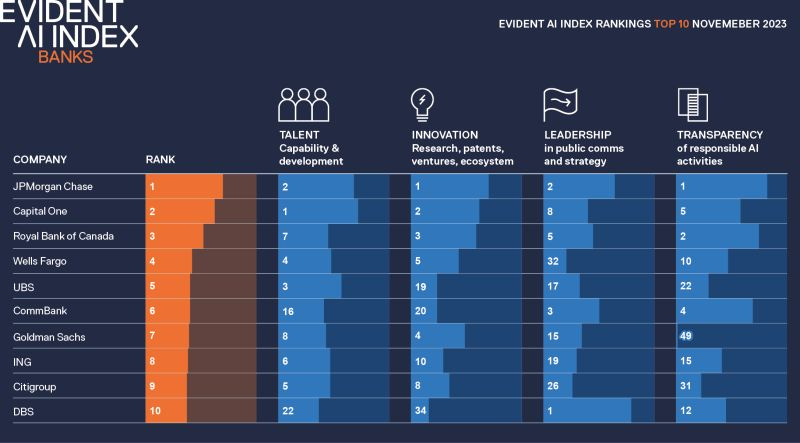

I guess the answer is it depends. It depends on the bank, and do they have the skill sets, do they have the team in place to do the work? That isn't always dictated by size. It’s really are they a frontrunning bank and in fact, there's an index that's been published by a research firm called Evident AI. I'm not sure if you're familiar with it, Lex, but you can check it out. Evident AI does an outside-in view of banking. They've measured the maturity of banks based on four pillars, innovation, leadership, transparency, and talent. When you think about how a financial firm is deploying AI, it really depends on, do they have the talent? Are they innovating? Do they want to take a leadership position? Then, are we learning about this because they're transparent? How would you find these things out? Are they publishing research papers? Are they issuing patents? Do they have a lot of open recs out for AI skillset, for data engineers and data scientists, et cetera?

I think the answer to the question from an NVIDIA perspective is, we believe it's really important to build a powerful ecosystem. The way I like to think about that is, we want to partner with ecosystem players, who the end customers want to partner with, in a way that the customer wins, the partner wins, and Nvidia wins. It's, how can we embed our AI accelerated compute platform, or GPU-accelerated compute platform, into an ecosystem partners go to market in such a way that it's providing value to the enterprise that's using it, and to the ecosystem partner as well.

Lex Sokolin:

I think it's a pretty controversial question for the AI industry, whether finance firms are able to keep up by making their data open and available or whether they try to keep it for proprietary advantage, and as a result don't avail themselves to some of the more modern technologies. But maybe we can move on to the second part. We've covered some of the ideas around machine learning. Can you talk about Generative AI and the difference between Generative AI and machine learning, and in particular, as it relates to Nvidia, maybe the role NVIDIA has taken in the industry. Can you just reflect maybe on the last few years of the company. I don't know what it is anymore, tripling 10x-ing, a 100x-ing in value. Can you talk about what is it about the architecture and function of Generative AI that has had such an impact on Nvidia? Then, we'll talk about that applied to finance later.

Malcom deMayo:

I think it's really important that banks in particular are the custodians of their customer data. While in some jurisdictions it's the law of the land that they open that up, they still have to make sure customers have opted in. It's really important to be a responsible custodian of customer data, and also, to make sure that AI is being used and they're responsible in a safe way.

The thing to keep in mind is that you have to go back to 2012 when an AI contest called ImageNet was responded to by three researchers up at the University of Toronto with an AI called AlexNet. NVIDIA was doing a lot of R&D around, for obvious reasons, around computer vision. What AlexNet did was it was able to classify an image of a cat from an image of a dog. Classification is pretty important. We segment everything, we classify everything in financial services.

This was what Jensen liked to call AI's big bang moment. He took very close notice because AlexNet was trained on an Nvidia GPU. AlexNet was, it was a deep-layered neural net. What most people miss is between AlexNet in 2012, AI's Big Bang and ChatGPT, which is the iPhone moment for AI, the world became awakened to the capabilities of Generative AI with ChatGPT.

From the AI's big bang moment in 2012, to the iPhone moment in 2022, AI models grew 3600x. They grew from 60 million parameters to ChatGPT today is, we think, $1.8 trillion. I've already commented on transactions have exploded and the amount of data has grown massively. When you think about that, all of that happened in plain sight, but most people didn't pay much attention to it. They just kept scaling out their servers, whether on-prem or in-cloud and griping about the high cost of compute, the high cost of storage, and along comes Nvidia.

In 2012, Jensen took notice of the correlation, the strong correlation between AI and compute. I doubt he would say that he knew this would happen, what you just described in terms of the doubling, the tripling, whatever in 2012, but took very strong note of it and started investing heavily in R&D in 2012 to build what is today an accelerated compute platform. Really, the only accelerated compute platform as I've already defined it. When you start thinking about creating models that have the ability to understand the human language and are starting to automate some of the most tedious functions, the potential is enormous. But we'll just leave it there for a moment. It's really important that financial firms understand how to use the technology, and the way they do that is by experimenting, by creating teams that have the ability to experiment.

They're all organized a little bit differently. Some firms have AI teams that report directly to the CEO, and some firms have AI teams embedded in their lines of business. The common denominator is that, for AI to be successfully deployed, the business and the creators of AI need to collaborate. That collaboration is an area that we focus on, helping the developers, helping the data scientists by creating a standard approach, a standard platform that's available in every cloud, in every cloud marketplace, making it available through server vendors, having key software ISVs embedded into their offering, so that it just simplifies the adoption. It starts to simplify the adoption of AI to improve developer productivity. You're not having to learn how to work in multiple frameworks, you have a single framework that's available everywhere. That's how we're making a huge difference. As I mentioned, we delivered a million x speed up over the last 10 years. We think we can do that again. We continue to evolve Nvidia AI enterprise. We're retooling it right now to be microservices based, to simplify the adoption and deployment and configuration.

Lex Sokolin:

You brought up ImageNet and seeing the opportunity to become a vendor for this much larger category, not just a vendor, but sort of the core infrastructure piece on which the whole thing rests. Going back to that distinction, the ImageNet stuff and machine vision, all of that is neural networks doing classification, whereas more recently, in the last two years with ChatGPT, it's a different, technical architecture, and as a result, very, very different use cases. You're not trying to classify stuff; you're trying to hallucinate stuff probabilistically into generating artifacts that people believe are almost human quality. Can we talk about that distinction and, in particular, the implication of that distinction on the types of use cases that financial services companies have to explore or have started exploring?

Malcom deMayo:

When you think about what Generative AI is enabling and how it's different from its predecessor, it's not replacing machine learning. These technologies are going to coexist. Machine learning is still going to work really well for financial firms that are going to continue to use it, but the ability to understand the human language creates a whole, and to understand it more accurately and to understand images and videos. These capabilities project or maybe open up a whole new universe of uses that machine learning wouldn't be used for.

One of the areas that we're working very closely, and I mentioned that the accelerated compute platform is a three-layer cake, the final layer or a bunch of a number of AI development frameworks. One of them is to be able to build a human avatar, and to leverage another framework to do speech conversion to text and text conversion to speech. This is underpinned by a large language model. You have Generative AI working with conversational AI with a digital avatar. You think about that for a minute. You can start to really attack one of the biggest opportunities for financial services firms, and in particular, those that deal with consumers, they can start to offload the pressure in their call centers with a human-like engagement to address any number of questions that a customer might have.

In fact, when we had one of the largest wealth managers in our briefing center, the statement was made that 7 out of 10 questions aren't advice questions, they're complaints. They're questions looking for answers to a problem. All of that can be handled very elegantly by a Generative AI digital human. The idea that Generative AI can generate answers, create content is what's different. It's not just being able to understand the human language much more accurately, but also the ability to then generate new and unique content is one of the things that makes it different from the previous generation of AI.

The opportunity to, as customers have experimented, if you go back a year around this time last year, everyone in the business felt like everyone's going to have to create their own models from scratch, and in fact, Bloomberg did. They created Bloomberg GPT. In fact, in their research paper that they published, they acknowledged the help they got from Nvidia in building that. If you turn to page 15 in the research paper, you'll see that Bloomberg GPT was Megatron-enabled. Megatron is code that we developed with Microsoft that really underpins our Nvidia AI Enterprise framework today and is used by many, many of the model builders out there today. There's a parent-child relationship there.

When you think about hallucination, what Bloomberg showed the world last year was that by using a smaller model, a 50 billion parameter model and training it on more data, you could achieve more accuracy. By varying the data, not just scraping the internet, but using their proprietary financial data, you could create a model that outperformed community models.

One of the takeaways was, you don't necessarily need the largest model in the world, you could use a blend of data, and you can train a model to be more accurate. If you fast forward about three, four months, fine-tuning the idea that we're going to take a trained model, a community model, and then train it on our business, the way we think about that is a community model is like a smart university student. Graduate, very smart, knows nothing about your business. If you want this model to generate answers that are relevant to your customer and not just generic answers or generic formulas or recipes rather, you're going to have to train it on your data. Then, fast forward another three, four months last year and the idea of leveraging prompt engineering and retrieval augmented generation as a technique to training became very popular.

The ball is moving very quickly. Our framework, the Nemo framework that we've built as part of Nvidia AI Enterprise for training AI supports all of those techniques and is used by a lot of the model builders today to create their models. To answer your question, I think from an Nvidia perspective, our customers, we think our customers are going to want to be able to take advantage of the latest innovation and model creation. New models are created it seems like on a daily basis now. When a new model drops, Lex, we take that model and we optimize it to run on the platform, so that you get a five to 10 X inference benefit.

What does that mean? It means that your model will perform the task you want it to perform 5 to 10 times faster or said differently, 5 to 10 times less expensive. From an NVIDIA perspective, we want to make sure that models are optimized, we want to make sure that customers have access to a framework to train by any of those techniques I just talked about in any hyperscaler or on-premises or in a COLO, and we continue to evolve as the market evolves.

Lex Sokolin:

Last question for you, and it's about the development of open-source models. We have a parallel trek between the large technology companies that are spending millions of dollars training gigantic data sets versus the open-source side of the world, which is now lagging in performance by maybe six or nine months but is still doing quite magical things. Do you see the future having more local models on people's devices and specifically on hardware that they control and own? Or do you expect the world to be much more focused on pulling from third party services where we delegate our data to other people?

Malcom deMayo:

A couple of different ways to answer that. First and foremost, if you think of OpenAI and ChatGPT, I think you can still Google Jensen Huang delivering a supercomputer to them in 2015. He hand delivered it. It's an earlier version of our DGX platform, which is our reference architecture for building AI. We built that for ourselves and then thought, "Geez, you know what? We should make this available to the marketplace." Today, we're putting DGX in all the hyperscalers, so that any size company has the ability to build AI the way we do.

The way we think about it is, if you can solve the problem, you're trying to solve with an API service like ChatGPT, then do it. Just keep an eye on the cost and the cost is going to be a moving target. But if a session is three or four questions or prompts, a cascading series of three or four or five, if that's a session and the session's 30 cents average enterprise might have 50,000 employees. 50,000 employees’ times five of those a day times 260 days a year at scale, that's millions of dollars. You just have to do the back of the napkin and make sure that, because you think about how often do people use search today? This could get to that level.

The first thing is if you can solve the questions you want to answer with an API service, you should do that. If you want your Ais to be smart and to know how to answer questions about your customers or about your data, then you're going to have to use one of those techniques that I previously discussed, and you're going to want to use a model or an open-source model to use your term.

One of the reasons financial services firms might want to do that is they may want to own the weights and biases. They want to own the derivative model they create. They want to have control over their data, the data they're using to train the model, and so they would want to have that model in their own possession. I think we see all of those. We see the vast majority of questions probably can be answered any guide service, but the minute you start to want to deliver specific improved service to your customers, you have to start using your data, and financial service is heavily regulated, you're probably going to want to use an open source model to do that.

In terms of the question of where from an Edge perspective, we've built, for healthcare and financial services, we've built inside of Nvidia AI Enterprise what we call flare, which is federated learning. When you think about where this is going, firms may want to move models to their customers. They may want to train on their customer's data in their customer's data center and pass the weights and biases back to a central model. To do that, that model has to be secured, and that's what federated learning enables.

In addition to that, in our latest GPUs, we're encrypting in the GPU. Homomorphic encryption is performance-intensive and not really pragmatic in a lot of use cases. We've built on the GPU something called confidential computing. We're enabling our customers to essentially move AI where they need to. Where the data is, meet their customers, where they are, whether that's in their data center, in cloud, in COLO or wherever it may be. In other industries, you're seeing GPUs in cars now, configured in cars. You're seeing a lot of usages in edge devices in robotics. Our family of GPU started like $50 and ramp up to $250,000 in terms of price points. We think we understand where the market is going, where the uses will be necessary, and we're building all of the capabilities needed to do that. I hope that answered your question.

Lex Sokolin:

It absolutely did. Thank you so much for giving us this wonderful overview of how finance can interact with Nvidia and how it can avail itself to all the opportunities across machine learning and Generative AI and things that are emerging on the Edge. Malcolm, if our audience wants to reach out to you, how should they do that?

Malcom deMayo:

They can reach out to me at mdemayo@nvidia.com. MdeMayo, D-E-M-A-Y-O, MdeMayo@nvidia.com.

Lex Sokolin:

Fantastic. Thank you so much for joining me today.

Malcom deMayo:

Hey, you're welcome. It's great to be here with you, Lex.

Shape Your Future

Wondering what’s shaping the future of Fintech and DeFi?

At the Fintech Blueprint, we go down the rabbit hole in the DeFi and Fintech world to help you make better investment decisions, innovate and compete in the industry.

Sign up to the Premium Fintech Blueprint newsletter and get access to:

Blueprint Short Takes, with weekly coverage of the latest Fintech and DeFi news via expert curation and in-depth analysis

Web3 Short Takes, with weekly analysis of developments in the crypto space, including digital assets, DAOs, NFTs, and institutional adoption

Full Library of Long Takes on Fintech and Web3 topics with a deep, comprehensive, and insightful analysis without shilling or marketing narratives

Digital Wealth, a weekly aggregation of digital investing, asset management, and wealthtech news

Access to Podcasts, with industry insiders along with annotated transcripts

Full Access to the Fintech Blueprint Archive, covering consumer fintech, institutional fintech, crypto/blockchain, artificial intelligence, and AR/VR

Read our Disclaimer here — this newsletter does not provide investment advice and represents solely the views and opinions of FINTECH BLUEPRINT LTD.

Want to discuss? Stop by our Discord and reach out here with questions